Create README.md

Browse files

README.md

ADDED

|

@@ -0,0 +1,184 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language:

|

| 3 |

+

- multilingual

|

| 4 |

+

license: other

|

| 5 |

+

license_name: kwaipilot-license

|

| 6 |

+

license_link: LICENSE

|

| 7 |

+

library_name: transformers

|

| 8 |

+

---

|

| 9 |

+

<div align="center">

|

| 10 |

+

<img src="https://raw.githubusercontent.com/Anditty/OASIS/refs/heads/main/Group.svg" width="60%" alt="Kwaipilot" />

|

| 11 |

+

</div>

|

| 12 |

+

|

| 13 |

+

<hr>

|

| 14 |

+

|

| 15 |

+

<div align="center" style="line-height: 1;">

|

| 16 |

+

<a href="https://huggingface.co/Kwaipilot/KAT-V1-40B" target="_blank">

|

| 17 |

+

<img alt="Hugging Face" src="https://img.shields.io/badge/HuggingFace-fcd022?style=for-the-badge&logo=huggingface&logoColor=000&labelColor"/>

|

| 18 |

+

</a>

|

| 19 |

+

|

| 20 |

+

<a href="https://arxiv.org/pdf/2507.08297" target="_blank">

|

| 21 |

+

<img alt="arXiv" src="https://img.shields.io/badge/arXiv-2507.08297-b31b1b.svg?style=for-the-badge"/>

|

| 22 |

+

</a>

|

| 23 |

+

</div>

|

| 24 |

+

|

| 25 |

+

# News

|

| 26 |

+

|

| 27 |

+

- Kwaipilot-AutoThink ranks first among all open-source models on [LiveCodeBench Pro](https://livecodebenchpro.com/), a challenging benchmark explicitly designed to prevent data leakage, and even surpasses strong proprietary systems such as Seed and o3-mini.

|

| 28 |

+

|

| 29 |

+

***

|

| 30 |

+

|

| 31 |

+

# Introduction

|

| 32 |

+

|

| 33 |

+

**KAT (Kwaipilot-AutoThink)** is an open-source large-language model that mitigates *over-thinking* by learning **when** to produce explicit chain-of-thought and **when** to answer directly.

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

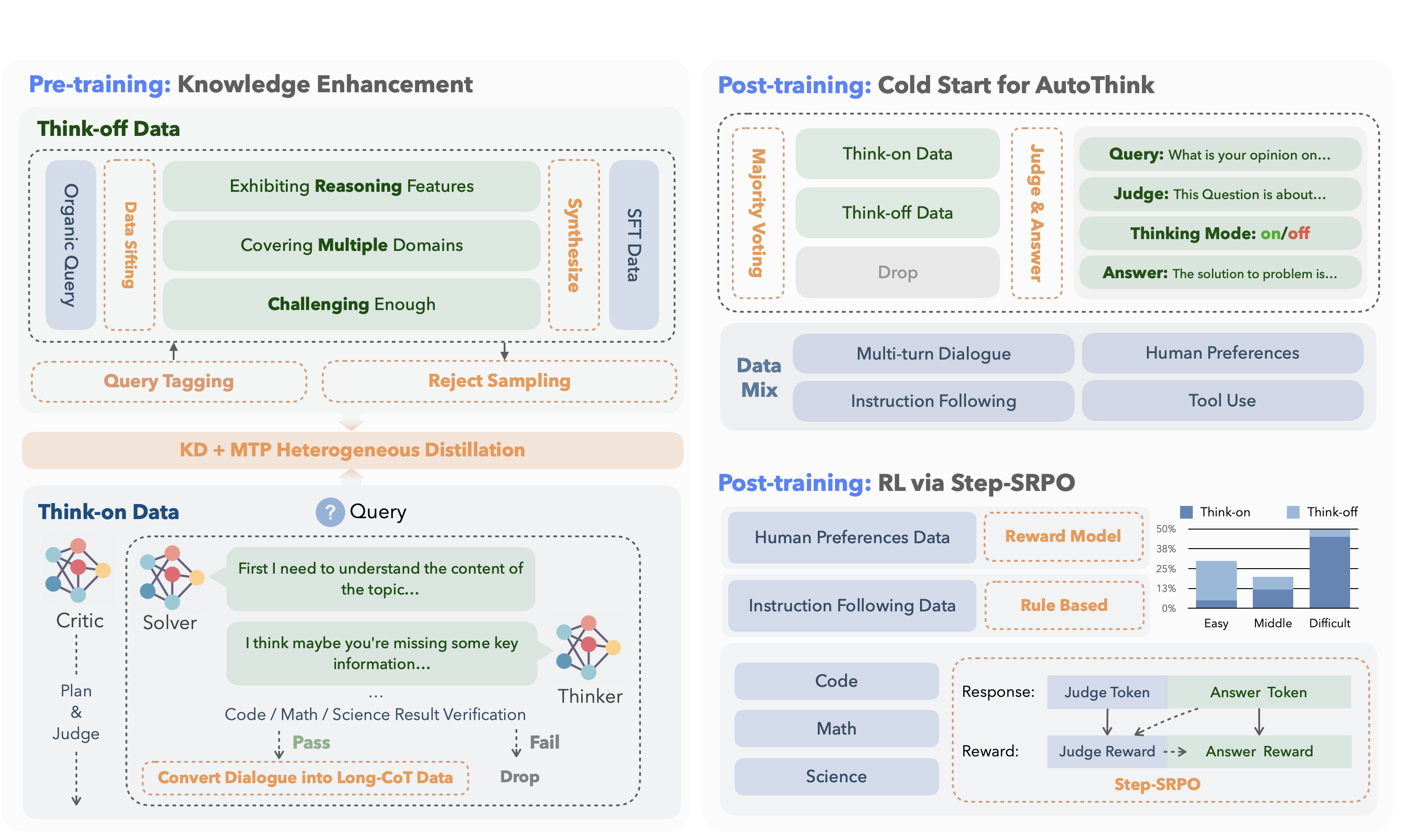

Its development follows a concise two-stage training pipeline:

|

| 38 |

+

|

| 39 |

+

<table>

|

| 40 |

+

<thead>

|

| 41 |

+

<tr>

|

| 42 |

+

<th style="text-align:left; width:18%;">Stage</th>

|

| 43 |

+

<th style="text-align:left;">Core Idea</th>

|

| 44 |

+

<th style="text-align:left;">Key Techniques</th>

|

| 45 |

+

<th style="text-align:left;">Outcome</th>

|

| 46 |

+

</tr>

|

| 47 |

+

</thead>

|

| 48 |

+

<tbody>

|

| 49 |

+

<tr>

|

| 50 |

+

<td><strong>1. Pre-training</strong></td>

|

| 51 |

+

<td>Inject knowledge while separating “reasoning” from “direct answering”.</td>

|

| 52 |

+

<td>

|

| 53 |

+

<em>Dual-regime data</em><br>

|

| 54 |

+

• <strong>Think-off</strong> queries labeled via a custom tagging system.<br>

|

| 55 |

+

• <strong>Think-on</strong> queries generated by a multi-agent solver.<br><br>

|

| 56 |

+

<em>Knowledge Distillation + Multi-Token Prediction</em> for fine-grained utility.

|

| 57 |

+

</td>

|

| 58 |

+

<td>Base model attains strong factual and reasoning skills without full-scale pre-training costs.</td>

|

| 59 |

+

</tr>

|

| 60 |

+

<tr>

|

| 61 |

+

<td><strong>2. Post-training</strong></td>

|

| 62 |

+

<td>Make reasoning optional and efficient.</td>

|

| 63 |

+

<td>

|

| 64 |

+

<em>Cold-start AutoThink</em> — majority vote sets the initial thinking mode.<br>

|

| 65 |

+

<em>Step-SRPO</em> — intermediate supervision rewards correct <strong>mode selection</strong> and <strong>answer accuracy</strong> under that mode.

|

| 66 |

+

</td>

|

| 67 |

+

<td>Model triggers CoT only when beneficial, reducing token use and speeding inference.</td>

|

| 68 |

+

</tr>

|

| 69 |

+

</tbody>

|

| 70 |

+

</table>

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

***

|

| 76 |

+

|

| 77 |

+

# Data Format

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

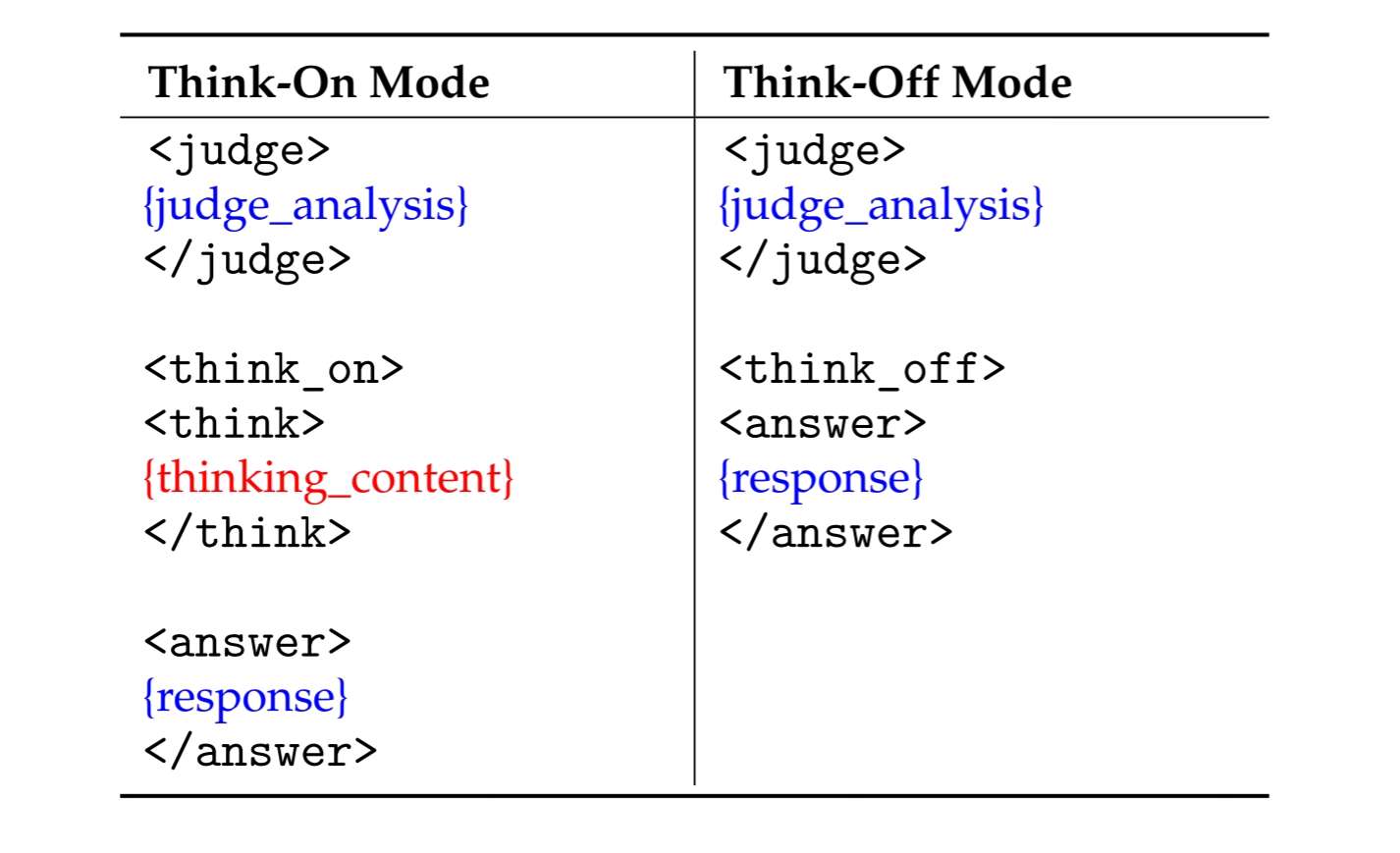

KAT produces responses in a **structured template** that makes the reasoning path explicit and machine-parsable.

|

| 81 |

+

Two modes are supported:

|

| 82 |

+

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

|

| 86 |

+

|

| 87 |

+

## Special Tokens

|

| 88 |

+

|

| 89 |

+

| Token | Description |

|

| 90 |

+

|-------|-------------|

|

| 91 |

+

| `<judge>` | Analyzes the input to decide whether explicit reasoning is needed. |

|

| 92 |

+

| `<think_on>` / `<think_off>` | Indicates whether reasoning is **activated** (“on”) or **skipped** (“off”). |

|

| 93 |

+

| `<think>` | Marks the start of the chain-of-thought segment when `think_on` is chosen. |

|

| 94 |

+

| `<answer>` | Marks the start of the final user-facing answer. |

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

***

|

| 98 |

+

|

| 99 |

+

# 🔧 Quick Start

|

| 100 |

+

|

| 101 |

+

```python

|

| 102 |

+

from transformers import AutoTokenizer, AutoModelForCausalLM

|

| 103 |

+

|

| 104 |

+

model_name = "Kwaipilot/KAT-V1-40B"

|

| 105 |

+

|

| 106 |

+

# load the tokenizer and the model

|

| 107 |

+

tokenizer = AutoTokenizer.from_pretrained(model_name, trust_remote_code=True)

|

| 108 |

+

model = AutoModelForCausalLM.from_pretrained(

|

| 109 |

+

model_name,

|

| 110 |

+

torch_dtype="auto",

|

| 111 |

+

device_map="auto"

|

| 112 |

+

)

|

| 113 |

+

|

| 114 |

+

# prepare the model input

|

| 115 |

+

prompt = "Give me a short introduction to large language model."

|

| 116 |

+

messages = [

|

| 117 |

+

{"role": "user", "content": prompt}

|

| 118 |

+

]

|

| 119 |

+

text = tokenizer.apply_chat_template(

|

| 120 |

+

messages,

|

| 121 |

+

tokenize=False,

|

| 122 |

+

add_generation_prompt=True

|

| 123 |

+

)

|

| 124 |

+

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

|

| 125 |

+

|

| 126 |

+

# conduct text completion

|

| 127 |

+

generated_ids = model.generate(

|

| 128 |

+

**model_inputs,

|

| 129 |

+

max_new_tokens=65536,

|

| 130 |

+

temperature=0.6,

|

| 131 |

+

top_p=0.95,

|

| 132 |

+

)

|

| 133 |

+

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

|

| 134 |

+

content = tokenizer.decode(output_ids, skip_special_tokens=True).strip("\n")

|

| 135 |

+

print("prompt:\n", prompt)

|

| 136 |

+

print("content:\n", content)

|

| 137 |

+

"""

|

| 138 |

+

prompt:

|

| 139 |

+

Give me a short introduction to large language model.

|

| 140 |

+

content:

|

| 141 |

+

<judge>

|

| 142 |

+

The user's request is to provide a concise factual introduction to large language models, which involves retrieving and summarizing basic information. This task is straightforward as it only requires recalling and presenting well-known details without deeper analysis. No complex reasoning is needed here—just a simple explanation will suffice.

|

| 143 |

+

</judge>

|

| 144 |

+

|

| 145 |

+

<think off>

|

| 146 |

+

<answer>A **Large Language Model (LLM)** is an advanced AI system trained on vast amounts of text data to understand, generate, and process human-like language. Here’s a concise introduction:

|

| 147 |

+

|

| 148 |

+

### Key Points:

|

| 149 |

+

1. **Training**: Trained on diverse text sources (books, websites, etc.) using deep learning.

|

| 150 |

+

2. **Capabilities**:

|

| 151 |

+

- Answer questions, generate text, summarize content, translate languages.

|

| 152 |

+

- Understand context, sentiment, and nuances in language.

|

| 153 |

+

3. **Architecture**: Often based on **transformer models** (e.g., BERT, GPT, LLaMA).

|

| 154 |

+

4. **Scale**: Billions of parameters, requiring massive computational resources.

|

| 155 |

+

5. **Applications**: Chatbots, content creation, coding assistance, research, and more.

|

| 156 |

+

|

| 157 |

+

### Examples:

|

| 158 |

+

- **OpenAI’s GPT-4**: Powers ChatGPT.

|

| 159 |

+

- **Google’s Gemini**: Used in Bard.

|

| 160 |

+

- **Meta’s LLaMA**: Open-source alternative.

|

| 161 |

+

|

| 162 |

+

### Challenges:

|

| 163 |

+

- **Bias**: Can reflect biases in training data.

|

| 164 |

+

- **Accuracy**: May hallucinate "facts" not grounded in reality.

|

| 165 |

+

- **Ethics**: Raises concerns about misinformation and job displacement.

|

| 166 |

+

|

| 167 |

+

LLMs represent a leap forward in natural language processing, enabling machines to interact with humans in increasingly sophisticated ways. 🌐🤖</answer>

|

| 168 |

+

"""

|

| 169 |

+

```

|

| 170 |

+

|

| 171 |

+

***

|

| 172 |

+

|

| 173 |

+

# Future Releases

|

| 174 |

+

|

| 175 |

+

Looking ahead, we will publish a companion paper that fully documents the **AutoThink training framework**, covering:

|

| 176 |

+

|

| 177 |

+

* Cold-start initialization procedures

|

| 178 |

+

* Reinforcement-learning (Step-SRPO) strategies

|

| 179 |

+

* Data curation and reward design details

|

| 180 |

+

|

| 181 |

+

At the same time, we will open-source:

|

| 182 |

+

|

| 183 |

+

* **Training resources** – the curated dual-regime datasets and RL codebase

|

| 184 |

+

* **Model suite** – checkpoints at 1.5B, 7B, and 13B parameters, all trained with AutoThink gating

|