Detecting Beyond Sight: Building AI-Enabled SAR Intelligence with Synthetic Data

Written by Gandharv Mahajan, Apurva Shah, Rishikesh Jadhav, Massimo Isonni, Ayssa Cassity, and Mish Sukharev

As critical as electro-optical sensors are to physical AI, they do have fundamental limitations for certain perception tasks. They require both light and a clear line of sight, which makes them vulnerable to common obscurants like night, fog, smoke, clouds, vegetation, or rain. In many operational scenarios, this severely limits their effectiveness.

Synthetic Aperture Radar (SAR) has the ability to overcome these limitations and provide critical operational data in adverse visual conditions.

SAR is a form of radar that generates high-resolution images of terrain, structures, and objects—regardless of weather or lighting conditions. Unlike optical sensors, SAR uses radio waves, allowing it to "see" through cloud cover, smoke, darkness, rain, and even dense foliage. It can also detect subtle changes in surface features, making it ideal for tracking movement, deformation, or the presence of camouflaged objects.

These capabilities have made SAR a critical tool for a variety of industries from disaster preparedness, to agriculture, to defense and intelligence. For DoD applications especially, SAR plays critical roles in applications ranging from battlefield surveillance to maritime domain awareness and target identification.

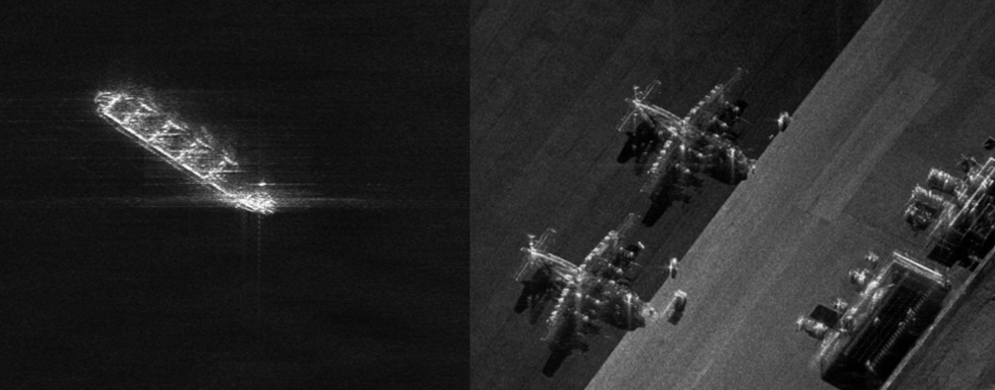

Examples of SAR imagery. Left: Ship at sea. Right: aircraft on an airforce base. (Source: Sandia National Laboratories)

AI Enabled SAR Intelligence

At first, SAR data may appear simply like black and white optical images, but since they result from constructive and destructive interference of returning radiowaves, interpreting them is far from intuitive. Fortunately, today’s AI models can extract a wide range of valuable information from SAR imagery, making the ability to train AI systems to interpret SAR a rapidly growing priority.

Training these models, however, is not without challenges: they must reliably analyze SAR data to identify threats, classify vehicles, detect changes, and interpret environmental conditions—all from imagery derived from radio waves rather than visible light.

Building a reliable AI model requires large labeled datasets—and herein lies the challenge: SAR datasets are a scarce resource. Unlike more common forms of data, large volumes of SAR imagery can’t be harvested from the internet, and even typical dataset providers lack access to necessary sources. Labeled SAR data (requiring specialized annotation, such as polarity) is even more scarce, further diminishing training data options.

What are the barriers to generating labeled SAR data?

- For Satellites-Based SAR: While satellites with SAR sensors are already in orbit, they are utilized for critical missions and can’t be easily retasked to training data collection. Launching new satellites for this purpose is generally an operational nonstarter, making rates of new data acquisition, and its distributions, a constrained resource.

- For Airborne-Based SAR: While airborne SAR can be deployed, it’s costly, time-consuming, and often impossible to coordinate over the right scenes at the right times.

- For Ensuring Relevant Data Collection: SAR is typically used to monitor targets like ships, military vehicles, and other large-scale or adversarial activities. These are not easy (and often inherently dangerous) to stage or revisit for data collection, especially under operational conditions of interest.

- For Accurate Annotation: SAR imagery is inherently non-intuitive, and without corresponding optical references (aka, ground truth data), accurate annotation becomes a challenge. Today’s teams can find themselves with abundant SAR data but no reliable way to label it—creating a bottleneck for training effective models.

Fortunately, high-quality synthetic data is the ideal solution for overcoming this bottleneck.

Falcon’s Virtual SAR Sensor for Synthetic SAR Dataset Generation

Video: Side by side of SAR data generated in Falcon (left) along with the source digital twin of an aircraft carrier in open water (right).

Falcon’s new virtual SAR sensor (beta implementation arriving with upcoming Falcon 5.3 release) represents a physical simulation of how SAR operates, generating accurate imagery along with ground truth and automatic, pixel-perfect labeling. Furthermore, our virtual SAR sensor can be tuned to align with any real-world SAR implementation, making sure that the synthetically generated training data closely aligns with the output of any specific real world system.

All vital parameters can be easily adjusted, including:

- Angular resolution (Azimuth) AND pixel size

- Range resolution AND pixel size

- Max and min intensities (in dB)

- Noise

Achieving this level of customizable, high-fidelity synthetic SAR data required solving several technical challenges. Each SAR pixel doesn’t just represent intensity values like in an RGB image—it carries complex-valued information, encoding both amplitude (A) and phase (θ). This longer-wave-based nature of SAR makes standard rendering techniques used in game engines like Unreal non-applicable out-of-the-box.

To overcome this, Duality's team created a custom fork of Unreal Engine’s raytracer (made possible by an OEM agreement with Epic Games). This allowed us to build a Falcon-specific, GPU-accelerated raytraced SAR model, exposed via Falcon’s Python API. This hybrid approach enables precise control over radar wave propagation, resulting in physically grounded SAR simulation with powerful, user-friendly customization.

Mission-Specific Synthetic SAR Data—Instant, Flexible, and Customizable

The virtual SAR sensor works seamlessly with Falcon’s digital twin catalog and scenario authoring tools, meaning that any possible scenario can be instantly used for synthetic SAR data generation. No custom integration or external workflows required!

Supporting all platforms from low-flying drones to satellites in low Earth orbit, this new virtual sensor is also primed to take full advantage of Falcon’s powerful simulation tools, which include: accurate world modeling via the GIS pipeline, an expansive digital twin library, intuitive scenario authoring, and the flexible Python API. Together, these tools enable rapid generation of mission-specific SAR datasets and unlock new frontiers in AI-driven perception using this powerful sensing modality.

Curious how Duality can help your team?

Contact us to chat about how your company could work with Duality, or check out our free subscription for access to the binary and learning resources you need to start using synthetic data to train AI models today!