5 Things You Need to Know About Moonshot AI and Kimi K2, the New #1 model on the Hub

You’ve probably never heard of Moonshot AI, but their latest release, Kimi K2, became the #1 trending model on Hugging Face in less than 24 hours—climbing past thousands of competitors to claim the top spot. This Chinese startup quietly built something that outperforms GPT-4 on coding tasks, and open-sourced it on the Hub.

Here are key facts to better understand this startup and their model, including insights from two interviews in Chinese translated by Tiezhen.

What Is Moonshot AI and How Did They Go from Zero to $3.3 Billion in 18 Months?

Moonshot AI was founded in March 2023 by three Tsinghua University alumni: Yang Zhilin, Zhou Xinyu, and Wu Yuxin. Following OpenAI’s ChatGPT breakthrough in November 2022, Yang traveled back to the US and became convinced it was time to "ride the wave" in generative AI. "ChatGPT’s release was thrilling. I sensed many variables would shift — capital and talent, the two core factors in AI. If those variables moved, there was a real chance to build a company from zero to one whose sole purpose was AGI,"" said Yang in an interview on WeChat translated here.

He raised $60 million and built a 40-person AI team in three months.

Moonshot AI took off after launching its ChatGPT-style chatbot, Kimi, in October 2023. It became popular in China for handling massive text inputs—up to 2 million characters.

In less than two years, the startup has raised approximately $1.27 billion across two funding rounds, bringing them to a $3.3 billion valuation. Major backers include Alibaba, Tencent, Meituan, and HongShan (formerly Sequoia China).

For the anecdote: the company’s Chinese name, 月之暗面 (Yuè Zhī Ànmiàn), translates to *"The Dark Side of the Moon"*—a Pink Floyd tribute that reflects CEO Yang Zhilin’s love of classic rock and the company’s mission to explore uncharted AI territories. This theme carries over to the office names.

Who Is Yang Zhilin, CEO of Moonshot AI?

At 31, this Carnegie Mellon PhD co-authored foundational papers on Transformer-XL and XLNet—research that powers many AI systems you use today. He also worked at Meta AI and Google Brain during his PhD.

Yang’s vision focuses on Artificial General Intelligence: "AGI is the only thing that matters over the next decade."

AI isn’t about finding a product-market-fit in one or two years; it’s about changing the world over ten to twenty — two different mindsets.

— Yang Zhilin

This drives Moonshot’s focus on three key areas: developing "lossless long-context" capabilities, building toward artificial general intelligence, and creating consumer-facing applications rather than enterprise tools.

We hope to become, in the next era, a company that combines OpenAI’s technical idealism with ByteDance’s commercial philosophy.

— Yang Zhilin

His co-founders bring complementary skills, rivaling larger players: Zhou Xinyu brings deep knowledge of deploying neural networks on resource-constrained hardware (from stints at Hulu and Tencent), while Wu Yuxin brings experience from Google Brain's foundation models team and Meta AI Research.

How Does Kimi K2's Performance Stack Up Against GPT-4 and Other Industry Leaders?

Kimi K2 is a 1 trillion parameters model using a mixture-of-experts (MoE) architecture that activates only 32 billion parameters per inference. The model includes 384 specialized experts with dynamic routing, 61 layers, 64 attention heads, and a 128,000-token context window.

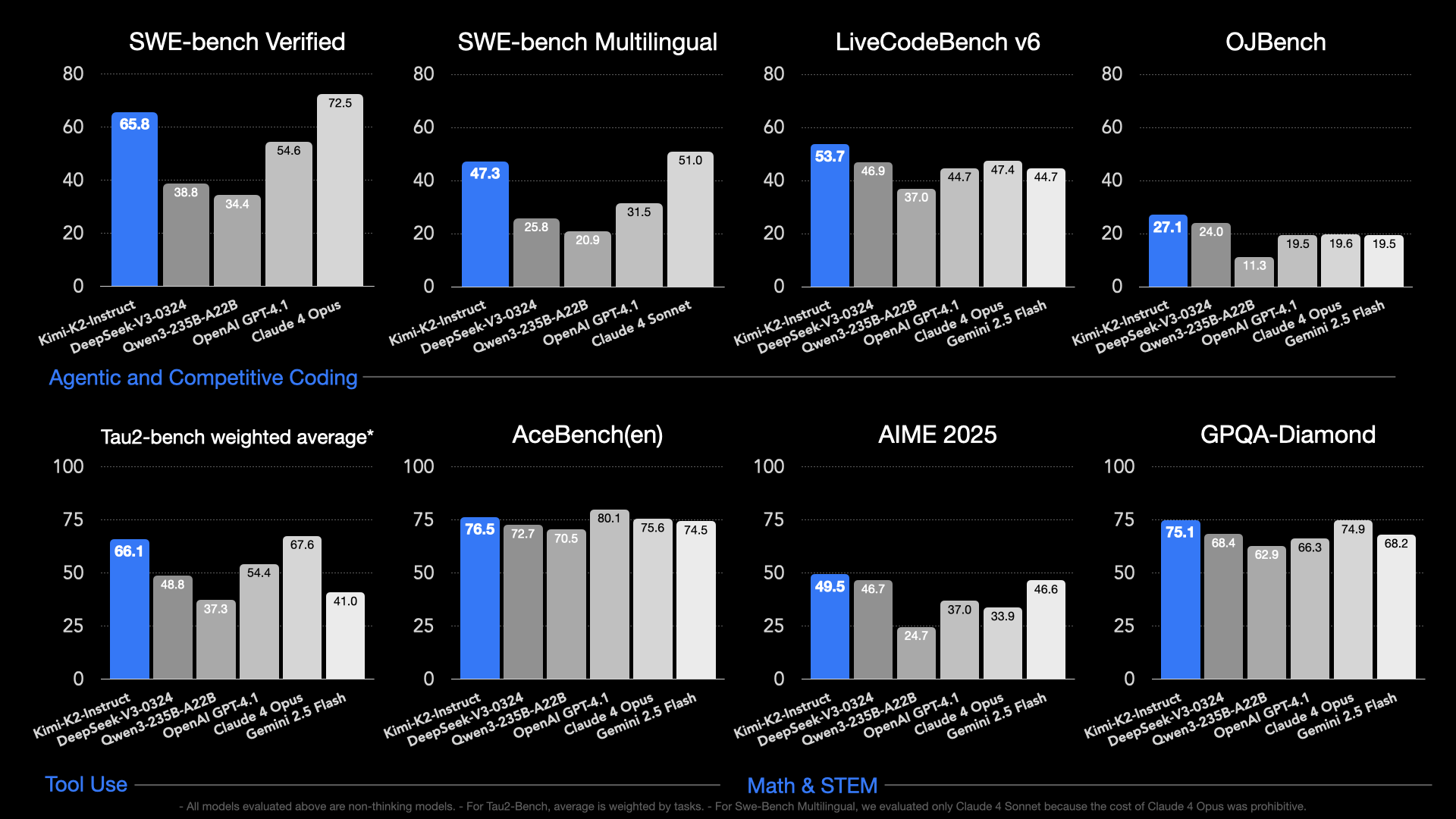

This model outperforms GPT-4.1, Claude Opus, and DeepSeek V3 on different benchmarks. For example:

- LiveCodeBench v6 (coding): Kimi K2 hits 53.7% accuracy vs GPT-4.1's 44.7%

- SWE-bench Verified (software engineering): 65.8% single-attempt accuracy, 71.6% with retries

- MATH-500 (math problems): 97.4% accuracy

Moonshot also demonstrated agentic capabilities in demos, including:

- A 16-step autonomous salary analysis with interactive charts

- A 17-step concert plan using multiple tool calls across search, calendar, email, and flight booking apps

What Is the Muon Optimizer and Why Does It Change Everything About AI Training?

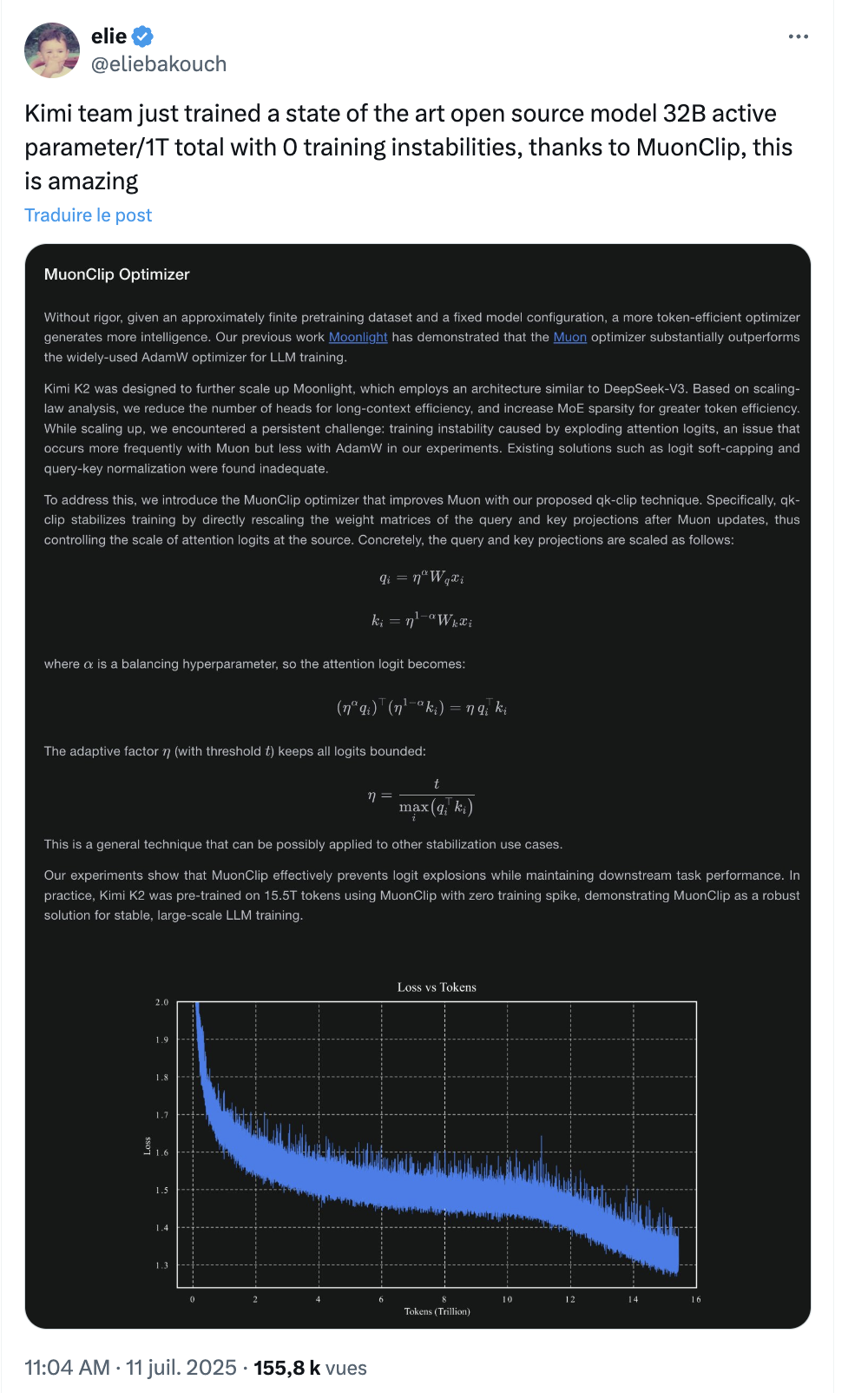

The real innovation behind Kimi K2 is the implementation of the Muon optimizer—a mathematical breakthrough developed by AI researchers, including Keller Jordan. Moonshot AI and UCLA researchers later published a research paper at the beginning of 2025 showing that Muon is scalable for LLM training. Traditional optimizers often get stuck in dominant directions, like a compass that keeps pointing one way. Muon solves this using matrix orthogonalization—which helps the model explore a wider solution space and avoid local traps.

Key results:

- 2x training efficiency

- 50% less memory usage

- Zero training failures over 15.5 trillion tokens for a trillion-parameter model

The enhanced variant, MuonClip, adds mechanisms to prevent training instability at massive scale—making trillion-parameter models practically trainable for the first time.

By the way, Muon stands for: MomentUm Orthogonalized by Newton-Schulz.

How Is Moonshot’s Research Philosophy Different?

Kimi K2 reflects a philosophy around scaling and long-term design. As Yang puts it: "The most important thing is to think from the end."" Their roadmap is shaped by a belief that architecture should evolve to match not just human needs, but a new computational paradigm.

"Lossless long context is everything," argues Yang. "If you had a context length of one billion, none of today’s problems would remain."

When token space is large enough, it becomes a new general-purpose computer — a universal world model.

— Yang Zhilin

Even fine-tuning, in Moonshot’s worldview, may be temporary: "In the future, the model will not need fine-tuning; instead, through powerful contextual consistency and instruction-following it will solve problems."

How Is Moonshot's Open-Source Strategy Disrupting AI Economics and What Does This Mean for You?

Moonshot is open-sourcing Kimi K2 and offering API access at just $0.15 per million input tokens and $2.50 per million output tokens—a bold move undercutting both OpenAI and Anthropic, as noted by VentureBeat.

The model can be:

- Run directly from the Hugging Face Hub using Novita as inference provider

- Deployed locally using inference engines like vLLM or SGLang

Notably, Moonshot moved from closed-source to open-source. A Moonshot’s team member shared a post explaining the decision, highlighting reasons like speeding up deployment and raising the bar for competition.

It’s interesting to note that Moonshot’s move came after Baidu’s decision to open-source their model Ernie. "Baidu has always been very supportive of its proprietary business model and was vocal against open-source, but disruptors like DeepSeek have proven that open-source models can be as competitive and reliable as proprietary ones," noted analyst Lian Jye Su on CNBC.

Moonshot’s long-term view also gives them a distinct stance on open vs closed models. They’ve publicly stated their focus is on unlocking scale and accelerating use cases—not chasing leaderboard dominance. In Yang’s words: "Users are the only real leaderboard."

How to Experiment with Kimi K2 Today

Try it on Hugging Face with inference providers

Explore agentic AI workflows: → Consider use cases where AI can autonomously complete tasks, not just assist

Try "vibe-coding" with Kimi K2: → Use it with AnyCoder

Go deeper technically: → Moonshot’s technical blog → Muon research paper on arXiv

Go further

Follow Adina and Tiezhen, Hugging Face experts who closely track the Chinese AI ecosystem

Read these two interviews with Yang Zhilin, translated by Tiezhen: → How Can a Brand-New AGI Company Surpass OpenAI? → Kimi: March toward the endless, unknown snow-capped mountain

Thanks to Brigitte for her comments on the article.