Argunauts Training Phase III: RLVF with Hindsight Instruction Relabeling, Self-Correction and Dynamic Curriculum

Introduction

In this article I explain how I'm using reinforcement learning with verifiable rewards (RLVF) to improve critical thinking and argument analysis skills in LLMs. The article, which is a snapshot of my work in progress, continues a series of posts about the Argunauts project:

☑️ Argunauts: Motivation and Goals ↪

☑️ Phase I: SFT Training ↪

☑️ Phase II: Selfplay Finetuning Line-By-Line ↪

✅ Phase III: ✨RLVF with Hindsight Instruction Relabeling, Self-Correction and Dynamic Curriculum✨

Using RLVF to improve argument analysis skills of open LLMs poses four major challenges:

- We need to define strong and verifiable rewards for the diverse tasks involved in argument mapping and logical argument analysis.

- Training will have to handle multiple rewards for multiple tasks of varying difficulty.

- Focusing on verifiable rewards, especially ones that can be formally or symbolically verified, raises the risk of reward hacking.

- Our base models achieve zero accuracy in some tasks they are supposed to learn.

I'm trying to address these challenges as follows:

- 🏃♀️ identify – very much in the spirit of deepa2 – the diverse core tasks involved in argument mapping and argument analysis;

- ⛹️♀️ design, based on 1, additional complex tasks that involve the coherent and coordinated solution of multiple core tasks;

- 🌲 map the requirements between the diverse tasks (from 1 and 2) in a didactic dependencies DAG;

- 🕵️♀️ use Argdown syntax, logical consistency and validity, and coherence constraints governing complex tasks in order to build verifiable binary rewards as well as to generate detailed natural language error messages for arbitrary candidate solutions;

- 🛜 take online DPO as a simple and clean RL paradigm that can be flexibly extended (as explained in 6, 7, and 8 below);

- 👀 adapt Hindsight Instruction Relabeling (HIR) to DPO, using natural language error messages (from 4) to allow models to learn from negative feedback and prevent over-generalization from partial/imperfect rewards;

- 🪞 integrate a multi-step self-correction loop in the RL flow to produce additional preference pairs with generated reviews or revisions;

- 🔄 while training, continuously adjust the training curriculum as a function of the model's current performance on each task (from 1 and 2) and the tasks' requirements according to the didactic dependencies DAG (3).

Tasks and Exercises for Argument Analysis

The four core tasks cover argumentative text annotation, argument mapping, informal argument reconstruction, and logical argument reconstruction:

CORE TASK arganno: Argumentative annotation of a source text.

Example item

| Problem | Solution |

| Annotate: Citizens are now faced with evidence of the growing power of organized moneyed interests in the electoral system at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. The election system is being reshaped by the Super PACs and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes. |

Citizens are now faced with <PROP id=1>evidence of the growing power of organized moneyed interests in the electoral system</PROP> at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. <PROP id=2 supports=1>The election system is being reshaped by the Super PACs</PROP> and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes.

|

Verifiable rewards (selection)

- Compliance with XML annotation scheme

- Source text integrity

- Unique IDs for all annotated propositions

CORE TASK argmap: Reconstructing a source text as Argdown argument map.

Example item

| Problem | Solution |

| Create argument map: Citizens are now faced with evidence of the growing power of organized moneyed interests in the electoral system at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. The election system is being reshaped by the Super PACs and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes. |

```argdown

|

Verifiable rewards (selection)

- Fenced argdown code block

- Valid Argdown syntax

- No premise conclusion structure

CORE TASK infreco: Informally reconstructing an argument presented in a source text.

Example item

| Problem | Solution |

| Informally reconstruct: Citizens are now faced with evidence of the growing power of organized moneyed interests in the electoral system at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. The election system is being reshaped by the Super PACs and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes. |

```argdown

|

Verifiable rewards (selection)

- Valid Argdown syntax

- Single argument reconstructed as premise conclusion structure

- Valid yaml inference metadata

CORE TASK logreco: Logically reconstructing an argument presented in a source text.

Example item

| Problem | Solution |

| Formally reconstruct: Citizens are now faced with evidence of the growing power of organized moneyed interests in the electoral system at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. The election system is being reshaped by the Super PACs and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes. |

```argdown

|

Verifiable rewards (selection)

- Valid Argdown syntax

- Logical formalizations are well-formed

- Deductive validity of sub-inferences

- No logically irrelevant premises

Based on the core tasks above, I define six compound tasks that involve the coordinated solution of multiple core tasks. The compound tasks are designed to provide additional verifiable semantic constraints by requiring that a solution's different parts cohere with each other.

COMPOUND TASK argmap_plus_arganno: Argumentative annotation of a source text and its corresponding reconstruction as an argument map.

Example item

| Problem | Solution |

| Annotate and reconstruct as map: Citizens are now faced with evidence of the growing power of organized moneyed interests in the electoral system at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. The election system is being reshaped by the Super PACs and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes. |

::: Citizens are now faced with <PROP id=1 map_label="Moneyed interests">evidence of the growing power of organized moneyed interests in the electoral system</PROP> at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. <PROP id=2 supports=1 map_label="Super PACs">The election system is being reshaped by the Super PACs</PROP> and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes.:::

```argdown

|

Verifiable rewards (coherence)

- Every annotated propositions corresponds to and consistently references a node in the argument map.

- Every node in the argument map corresponds to and consistently references an annotated proposition.

- The annotated support and attack relation match the sketched dialectical relations in the argument map.

COMPOUND TASK arganno_plus_infreco: Argumentative annotation of a source text and corresponding informal reconstruction of the argument in standard form.

Example item

| Problem | Solution |

| Annotate and reconstruct as map: Citizens are now faced with evidence of the growing power of organized moneyed interests in the electoral system at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. The election system is being reshaped by the Super PACs and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes. |

::: Citizens are now faced with <PROP id=1 reco_label="C3">evidence of the growing power of organized moneyed interests in the electoral system</PROP> at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. <PROP id=2 supports=1 reco_label="P1">The election system is being reshaped by the Super PACs</PROP> and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes.:::

```argdown

|

Verfiable rewards (coherence)

- Every annotated proposition corresponds to and consistently references a statement (conclusion or premise) in the reconstructed argument.

- Some premises / conclusions in the argument correspond to and consistently reference annotated propositions.

- The annotated support relations match the argument's inferences.

COMPOUND TASK arganno_plus_logreco: Argumentative annotation of a source text and corresponding logical reconstruction of the argument in standard form.

Example item

| Problem | Solution |

| Annotate and logically reconstruct: Citizens are now faced with evidence of the growing power of organized moneyed interests in the electoral system at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. The election system is being reshaped by the Super PACs and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes. |

::: Citizens are now faced with <PROP id=1 reco_label="C4">evidence of the growing power of organized moneyed interests in the electoral system</PROP> at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. <PROP id=2 supports=1 reco_label="P1">The election system is being reshaped by the Super PACs</PROP> and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes.:::

```argdown

|

Verifiable rewards (coherence)

- Every annotated propositions corresponds to and consistently references a statement (conclusion or premise) in the reconstructed argument.

- Some premises / conclusions in the argument correspond to and consistently reference annotated propositions.

- The annotated support relations match the argument's inferences.

COMPOUND TASK argmap_plus_infreco: Argument mapping a source text and informally reconstructing all the corresponding arguments in standard form (premise-conclusion-structure).

Example item

| Problem | Solution |

| Map and reconstruct: Citizens are now faced with evidence of the growing power of organized moneyed interests in the electoral system at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. The election system is being reshaped by the Super PACs and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes. |

```argdown

|

Verifiable rewards (coherence)

- Every node in the argument map matches a proposition or argument in the informal reconstruction.

- Every dialectic relations sketched in the map is grounded by the inferential relations in the informal reconstruction.

COMPOUND TASK argmap_plus_logreco: Argument mapping a source text and formally reconstructing all the corresponding arguments as logically valid inferences in standard form.

Example item

| Problem | Solution |

| Map and logically reconstruct: Citizens are now faced with evidence of the growing power of organized moneyed interests in the electoral system at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. The election system is being reshaped by the Super PACs and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes. |

```argdown

|

Verifiable rewards (coherence)

- Every node in the argument map matches a proposition or argument in the logical reconstruction.

- Every dialectic relations sketched in the map is grounded by the inferential relations in the logical reconstruction.

COMPOUND TASK argmap_plus_arganno_plus_logreco: Argument mapping a source text, annotating it, and logically reconstructing all the corresponding arguments as logically valid inferences in standard form.

Example item

| Problem | Solution |

| Map, annotate and logically reconstruct: Citizens are now faced with evidence of the growing power of organized moneyed interests in the electoral system at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. The election system is being reshaped by the Super PACs and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes. |

::: Citizens are now faced with <PROP id=1 reco_label="C4">evidence of the growing power of organized moneyed interests in the electoral system</PROP> at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. <PROP id=2 supports=1 reco_label="P1">The election system is being reshaped by the Super PACs</PROP> and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes.:::

```argdown

|

Verifiable rewards (coherence)

- Map and logical reconstructions cohere with each other (s.a.)

- Logical reconstruction and annotation cohere with each other (s.a.)

- Annotation and map cohere with each other (s.a.)

Another four sequential tasks are supposed to help the model to learn more demanding tasks by starting from an argumentative analysis of a source text rather than from the plain source text itself.

SEQUENTIAL TASK arganno_from_argmap: Argumentative annotation of a source text given a corresponding argument map.

| Problem | Solution |

Annotate in view of map: Citizens are now faced with evidence of the growing power of organized moneyed interests in the electoral system at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. The election system is being reshaped by the Super PACs and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes.

```argdown

|

Citizens are now faced with <PROP id=1>evidence of the growing power of organized moneyed interests in the electoral system</PROP> at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. <PROP id=2 supports=1>The election system is being reshaped by the Super PACs</PROP> and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes.

|

SEQUENTIAL TASK argmap_from_arganno: Reconstruct an argumentatively annotated text as argument map.

| Problem | Solution |

Reconstruct as map: Citizens are now faced with <PROP id=1>evidence of the growing power of organized moneyed interests in the electoral system</PROP> at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. <PROP id=2 supports=1>The election system is being reshaped by the Super PACs</PROP> and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes.

|

```argdown

|

SEQUENTIAL TASK infreco_from_arganno: Informally reconstruct an argument in standard form given an argumentatively annotated text.

| Problem | Solution |

Reconstruct as map: Citizens are now faced with <PROP id=1>evidence of the growing power of organized moneyed interests in the electoral system</PROP> at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. <PROP id=2 supports=1>The election system is being reshaped by the Super PACs</PROP> and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes.

|

```argdown

|

SEQUENTIAL TASK logreco_from_infreco: Logically reconstruct an argument in standard form given an informal reconstruction.

| Problem | Solution |

Reconstruct formally: Citizens are now faced with evidence of the growing power of organized moneyed interests in the electoral system at the same time that the nation is more aware than ever that the inequality among income groups has grown dramatically and economic difficulties are persistent. The election system is being reshaped by the Super PACs and the greatly increased power of those who contribute to them to choose the candidates who best suit their purposes.

```argdown

|

```argdown

|

Didactic Dependencies and the Dynamic Training Curriculum

There exist dependencies between the different tasks which we may discern a priori:

- Mastering a compound task presupposes that the system masters the component tasks involved in the compound task.

- Mastering a sequential task presupposes, from a training perspective, that the system masters the antecedent task, because input for the sequential task will be auto-generated online by the model.

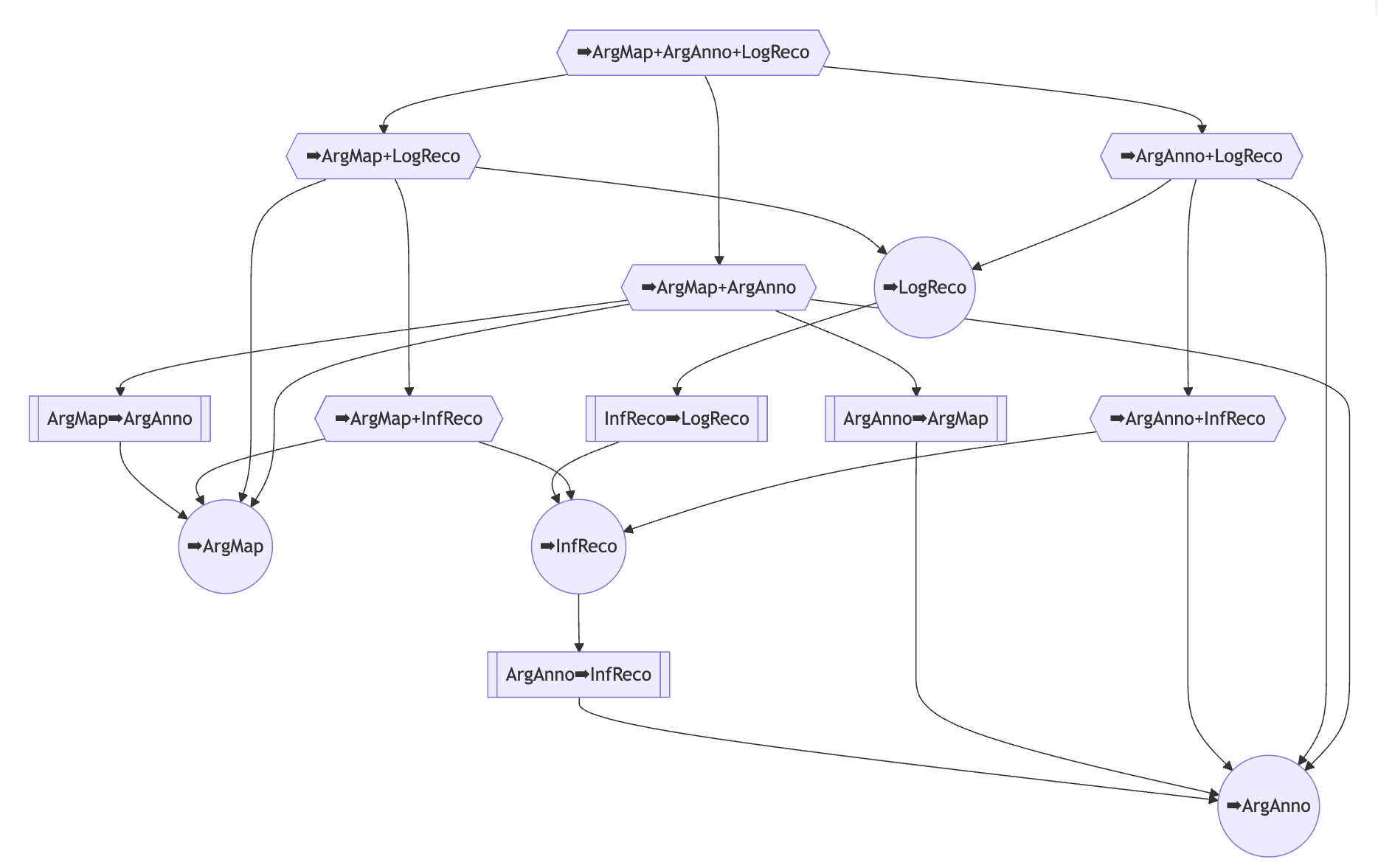

This gives us the following didactic dependencies DAG:

The top node represent the most demanding task, where the system is supposed to produce a syntactically and logically valid annotation, argument map, and formal argument reconstruction of a source text, all which are required to cohere with each other. At the bottom of the DAG, there are two root nodes representing the core tasks of argument mapping and argumentative text annotation. Node shape indicates the type of task, with circles representing core tasks, hexagons representing compound tasks, and rectangles representing sequential tasks.

Shi et al. (2025) demonstrate that RL benefits from carefully selecting tasks that are neither too easy nor too difficult for the model to solve. It is straightforward that we can exploit the didactic dependencies DAG above to dynamically determine the tasks a model should be trained on at a given time, taking into account the model's current performance on the tasks.

For example, it doesn't makes sense to exercise creating an argument map which coheres with an argumentative text annotation, as long as the model doesn't even know how to produce an syntactically well-formed Argdown argument map in the first place.

This is how I'm currently operationalizing this idea:

Let's say we have a total training budget of $N$ exercises per training epoch. We now need to detail how to dynamically allocate this budget over the different tasks $t=1,...,14$.

I reserve 10% of the training budget for the four core tasks, distributing it uniformly. The non-core tasks have zero initial budget.

The remaining budget is allocated recursively in function of the model's current performance $a_i;(i=1...14)$ in the diverse tasks. The basic idea is to train on tasks (i) which the model doesn't master yet, but (ii) which depend on tasks the model does master.

(Expand to see algorithm)

Let $b_i$ be the budget already allocated to task $i$; and let $i_1, ..., i_k$ denote the $k$ child tasks of an arbitrary task $i$ in the didactic dependencies DAG.

Let us also define the current maximum requirements failure rate, $\epsilon_i$, for task $i$ as:

if $i$ has any children and $\epsilon_i = 0$ otherwise.

Then, we may specify the budget allocation algorithm as follows:

- Transfer the free budget, which has not been initially allocated, to the top task(s).

- If budget $b$ is transferred to task $i$, then

- increase $i$'s budget $b_i$ by $(1 - \epsilon_i) \cdot b$;

- transfer the remaining budget $\epsilon_i \cdot b$ to the child tasks $i_1, ..., i_k$ of task $i$ in line with the current performance of each child $i_l$: $$\frac{1-a_{i_l}}{\sum_j(1-a_{i_j})} \epsilon_i \cdot b.$$

With this dynamic training curriculum, we shall expect the model to first train on simple / core tasks, and then gradually move to ever more demanding tasks.

Hindsight Instruction Relabeling Direct Preference Optimization (HIRPO)

Hindsight Instruction Relabeling is an elegant RLVR approach originally proposed by Zhang et al (2023).

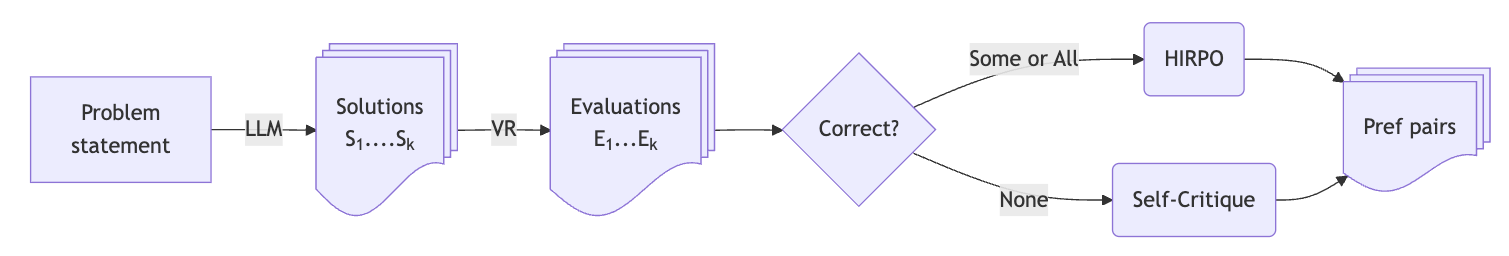

In a single HIR training epoch, one

- samples answers from the model online,

- evaluates the answers,

- uses correctly solved instructions and answers as training items,

- relabels instructions with incorrect answers (e.g., tasking the model to make mistakes), and

- uses the relabeled instructions and answers as training items, finally

- trains the model with the collected training items using a supervised fine-tuning (SFT) objective.

Let's illustrate hindsight instruction relabeling with a simple example:

| Instruction | Answer | VR | HIR Training Item (SFT) |

|---|---|---|---|

| Q: 8*7=? | A: 56 | ✅ | Q: 8*7=? A: 56 |

| Q: 8*7=? | A: 57 | ❌ | Q: 8*7=? (Make a mistake!) A: 57 |

Zhang et al (2023) demonstrate that adding relabeled instructions to the training data can significantly improve the model's performance as compared to only training on correctly solved instructions. That is because HIR "enables learning from failure cases" (which might help us to address an apparent shortcoming of GRPO (Xiong et al. 2025)).

The Argunauts projects transfers the HIR paradigm, which was originally designed for SFT, to online DPO training (→HIRPO). In HIRPO, we're also exploiting the fact that there are different kinds of mistakes when constructing preference pairs. Continuing the above example:

| Instruction | Answers | HIRPO Prompt | HIRPO Chosen | HIRPO Rejected |

|---|---|---|---|---|

| Q: 8*7=? | "A: 56", "A: 65", "A: Yes" | Q: 8*7=? | A: 56 | A: 65 |

| Q: 8*7=? | "A: 56", "A: 65", "A: Yes" | Q: 8*7=? (Mix up digits!) | A: 65 | A: 56 |

| Q: 8*7=? | "A: 56", "A: 65", "A: Yes" | Q: 8*7=? (Make NaN mistake!) | A: Yes | A: 56 |

Another benefit from bringing HIR to DPO is now becoming evident: By adding the preference pair in line 2 to the training data, we show the model why exactly the chosen answer, in line 1, is preferred to the rejected one. The hunch is that this might prevent over-generalization from partial rewards.

But that's not all.

With HIRPO, we can even construct preference pairs if the model generates no single correct answer at all:

| Instruction | Answers | HIRPO Prompt | HIRPO Chosen | HIRPO Rejected |

|---|---|---|---|---|

| Q: 8*7=? | "A: 57", "A: 65", "A: Yes" | Q: 8*7=? (Avoid NaN mistake!) | A: 65 | A: Yes |

Plus, we can also reward virtuous behavior beyond the answer being correct or incorrect:

| Instruction | Answers | HIRPO Prompt | HIRPO Chosen | HIRPO Rejected |

|---|---|---|---|---|

| Q: 8*7=? | "A: 56", "A: 56 ;-)", "A: 56, of course" | Q: 8*7=? (No gags!) | A: 56 | A: 56 ;-) |

| Q: 8*7=? | "A: 56", "A: 56 ;-)", "A: 56, of course" | Q: 8*7=? (Add gag!) | A: 56 :-) | A: 56 |

While running the HIRPO experiments, Sun et al. (2025) have published the exciting and much more general "Multi-Level Aware Preference Learning: Enhancing RLHF for Complex Multi-Instruction Tasks," which encompasses HIRPO ideas. I'm planning to integrate MAPL-DPO into the Argunauts project in the future. These are further HIRPO-related approaches I'm currently seeing:

| Approach | Hindsight Instruction Relabeling | Natural Language Feedback | Verifiable Rewards | DPO |

|---|---|---|---|---|

| HIR | ✅ | ✅ | ✅ | 🅾️ |

| IPR | ✅ | ✅ | ✅ | 🅾️ |

| CDF-DPO | 🅾️ | ✅ | ✅ | ✅ |

| CUT | 🅾️ | ✅ | ✅ | 🅾️ |

| MAPL-DPO | ✅ | 🅾️ | ✅ | ✅ |

| HIRPO | ✅ | ✅ | ✅ | ✅ |

How does HIRPO apply to Argunauts?

For the 14 argument analysis tasks, I've defined round about 40 verifiable rewards (a selection of which are listed above), all of which generate binary reward signals (correct/incorrect) and highly specific error messages, which can in turn be used for constructing training data with HIRPO.

On top of that, I've defined another 40 additional virtue scores that assess the quality of the model's answers beyond strict correctness, such as whether the labels in an argument map are succinct, whether the premises of an argument cohere with a source text, or whether an argument map is dense (has many support and attack relations).

Online DPO with Self-Correction

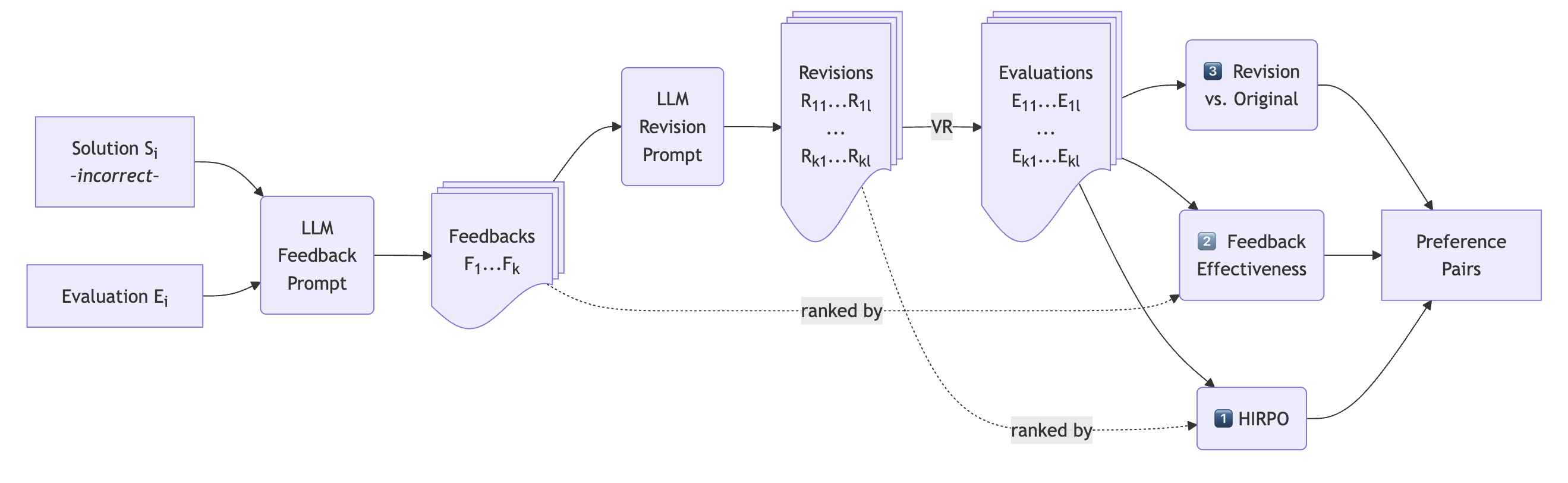

Following Kamoi et al. (2024), who show that LLM "self-correction works well in tasks that can use reliable external feedback," we exploit the natural language feedback from our verifiable rewards not only to construct HIRPO preference pairs, but also to enable the model

- to generate constructive feedback and

- to revise its original answers.

The self-critique workflow is triggered when all primary candidate solutions are incorrect:

Within the self-critique workflow, the model, being shown the incorrect answer and the detailed error message, is prompted – as teacher – to provide constructive feedback for a student. For each feedback accordingly generated, the model is then asked to revise its original answer. All the revised answers are, once more, evaluated:

We can now construct further preference pairs as follows:

1️⃣ We do plain HIRPO (as described before) vis-à-vis the revised (instead of the primary) solutions.

2️⃣ We compare different feedback texts in terms of effectiveness, i.e. how well they help the model improve its answers.

3️⃣ We compare revised answers against the original solutions.

Training

Problem Collection

We're using carefully curated argumentative texts from various domains and of different lengths, published in subsets of the collection DebateLabKIT/arguments-and-debates, as exercises.

Having a reader of insightful and diverse argumentative texts is extremely valuable for teaching students critical thinking and argument analysis skills. Expanding and improving the selection of argumentative texts is an ongoing effort, and I welcome contributions from the community.

Base Model

As base model we use the Argunaut model Llama-3.1-Argunaut-1-8B-SPIN from Phase II of the Argunauts project, which is a fine-tuned version of meta-llama/Llama-3.1-8B-Instruct.

Training Procedure

Each training epoch consists of the following steps:

- Sample

exercises_per_epochexercises from the collection of argumentative texts; and allocate exercises to different task types according to current task weights. - Generate answers and HIRPO pairs for each exercise and task.

- Mix in training items generated in previous epochs, prioritizing those contrasting valid and invalid answers.

- Train the model with generated HIRPO pairs using DPO.

We evaluate the currently trained model every evaluate_epochs epochs, and re-calculate the task weights based on the model's performance on the tasks (see Dynamic Curriculum).

total_epochs: 128

evaluate_epochs: 4

Sampling parameters

exercises_per_epoch: 512

eval_gen_kwargs:

temperature: 0.2

max_tokens: 4096

top_p: 0.95

min_p: 0.05

gen_kwargs:

temperature: 0.8

max_tokens: 4096

min_p: 0.05

feedback_gen_kwargs:

temperature: 0.8

max_tokens: 1024

min_p: 0.05

Training parameters

loss_type: sigmoid

num_train_epochs: 2

per_device_train_batch_size: 2

gradient_accumulation_steps: 8

learning_rate: 5.0e-7

lr_scheduler_type: linear

warmup_ratio: 0.5

Frameworks

Training Metrics

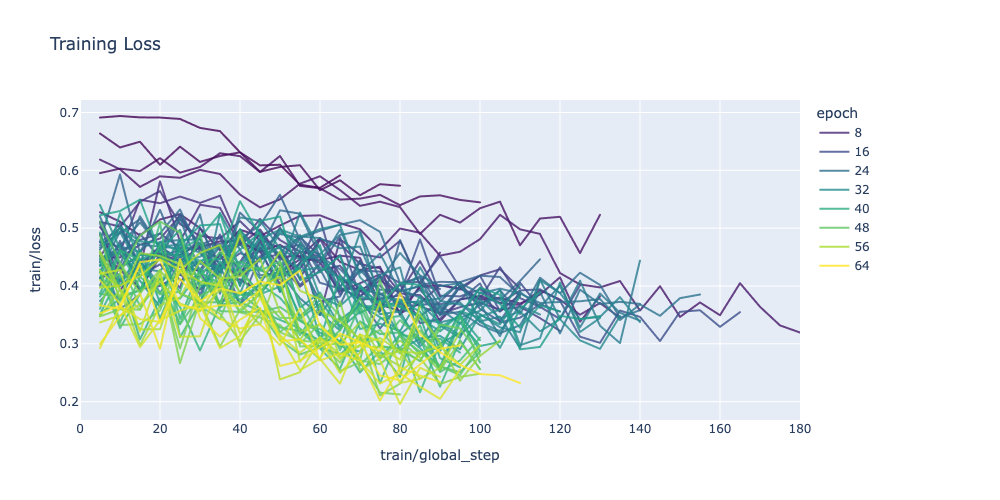

Training loss is going down both in each epoch and as we progress from one epoch to another:

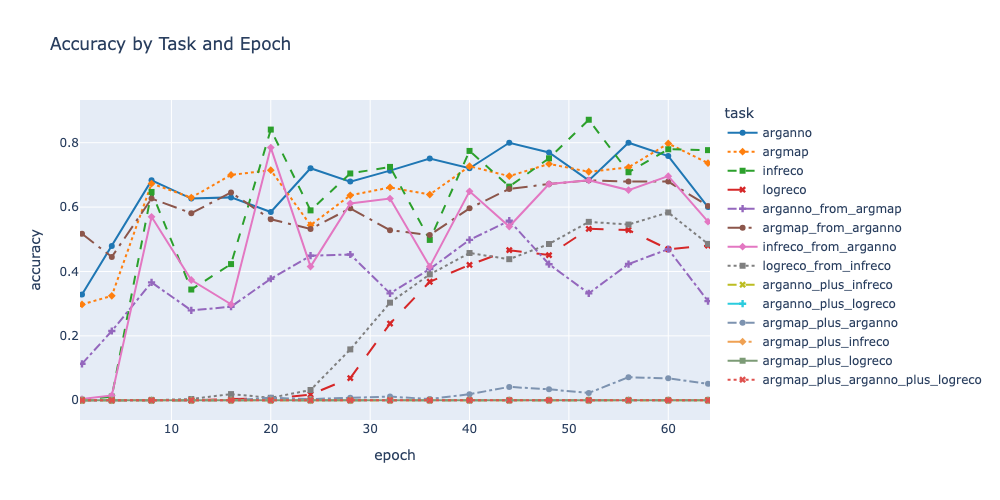

The performance in the diverse argument analysis tasks, assessed against exercises from the eval split, improves significantly during HIRPO training:

Noteworthy:

- The model acquires skills it does initially not have whatsoever, such as informal argument reconstruction (

infreco, around epoch 4), logical argument analysis (logreco, around epoch 20), and, with less success, coherent argument mapping and annotation (argmap_plus_arganno, around epoch 40). - Having achieved high levels of performance, metrics keep oscillating characteristically, reflecting a drop in performance as training focus shifts dynamically to other tasks, followed by a rebound once training returns to the task with degraded performance.

- Llama-3.1-Argunaut-1-8B-HIRPO fails to acquire more complex tasks, like coherent annotation and logical analysis (

arganno_plus_logreco). I'll try to fix this in future iterations, starting with more precise and detailed instructions / prompts.

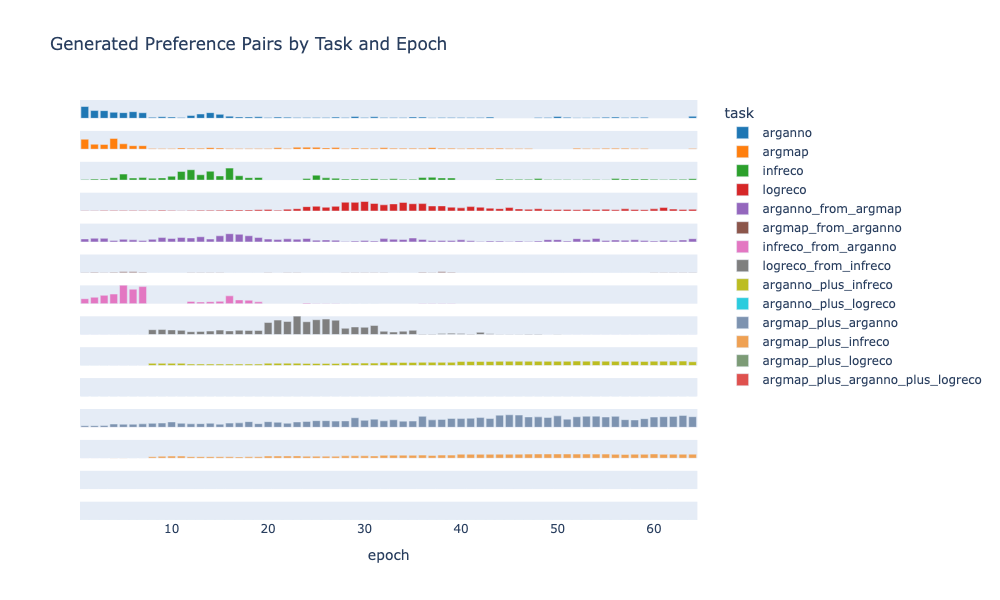

The training currculum is dynamically adjusted as the model acquires more and more skills, as shown by the number of training items generated in each epoch:

This also demonstrates that a spiral curriculum, where training returns to previously mastered tasks, is an emerging feature of the training process (compare the oscillating number of infreco or logreco training items).

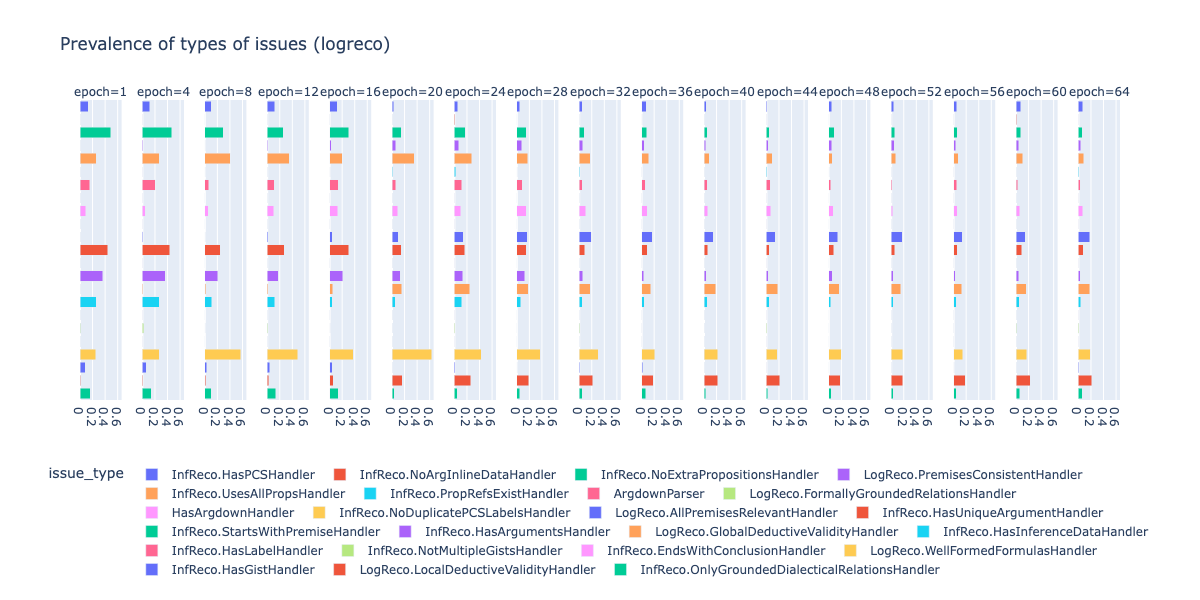

We may break down the performance in terms of error types being made. Thus, models may fail to generate a valid logical reconstruction of an argumentative text for very different reasons (e.g., misformatted febced code-block, missing conclusion, missing inference data, ill-formed logical formulas, etc.). The following diagram shows the prevalence of different mistakes (issues) made by the model in the logreco task:

We see that the model is drastically improving and starting to avoid specific mistakes well before it achieves even a minimal level of performance in the task (around epoch 20). This is because the model successfully transfers insights from other tasks it has been practicing, or because it learns purely from negative feedback (failure type preference pairs).

Evaluation

Chat experience

Let me share this chat with ggbetz/Llama-3.1-Argunaut-1-8B-HIRPO-dev4-Q4_K_M-GGUF.

👤 You

Hi, are you familiar with Argdown?

🤖 Assistant

Yes, I'm familiar with Argdown, a markup language for logical and critical thinking, particularly suited for representing and analyzing arguments. It allows for the clear and structured representation of arguments, including claims and arguments, as well as inference trees. If you have an argument you'd like me to analyze or need assistance with a critical thinking problem, feel free to provide the details!

👤 You

Can you please Argdown argument map that visualizes the argumentative macro structure of the following text?

:::

School uniforms deter crime and increase student safety.

In Long Beach, California, after two years of a district-wide K-8 mandatory uniform policy, reports of assault and battery in the district’s schools decreased by 34%, assault with a deadly weapon dropped by 50%, fighting incidents went down by 51%, sex offenses were cut by 74%, robbery dropped by 65%, possession of weapons (or weapon “look-alikes”) decreased by 52%, possession of drugs went down by 69%, and vandalism was lowered by 18%. [64]

One year after Sparks Middle School in Nevada instituted a uniform policy, school police data showed a 63% drop in police log reports, and decreases were also noted in gang activity, student fights, graffiti, property damage, and battery. A peer-reviewed study found that schools with uniform policies had 12% fewer firearm-related incidents and 15% fewer drug-related incidents than schools without uniforms. [25][69]

School uniforms also prevent students from concealing weapons under baggy clothing, make it easier to keep track of students on field trips, and make intruders on campus more visible. Frank Quatrone, superintendent in the Lodi school district of New Jersey, states, “When you have students dressed alike, you make them safer. If someone were to come into a building, the intruder could easily be recognized.” [6][38]

Further, school uniforms create a level playing field among students, reducing peer pressure and bullying. When all students are dressed alike, competition between students over clothing choices and the teasing of those who are dressed in less expensive or less fashionable outfits can be eliminated. Research by the Schoolwear Association found that 83% of teachers thought “a good school uniform…could prevent bullying based on appearance or economic background.” Arminta Jacobson, founder and director of the Center for Parent Education at the University of North Texas, states that uniforms put “all kids on the same playing field in terms of their appearance. I think it probably gives them a sense of belonging and a feeling of being socially accepted.” [5][91]

:::

🤖 Assistant

The provided text does not contain an argument with clearly defined premises and a conclusion. It presents some claims and supports them with evidence, but it does not explicitly state an argument with a clear structure of premises and a conclusion. Therefore, there is no argument to map.

If you provide the full argument, including the premises and the conclusion, I can assist you in creating an Argdown argument map. Please share the complete argument.

👤 You

That is correct. But I'm asking you to map these support and attack relations using Argdown Syntax in an argdown snippet.

🤖 Assistant

Given the information provided, it appears there's an implicit argument structure. However, without explicit premises and a conclusion, it's challenging to create a clear Argdown map. Nonetheless, let's represent the support and attack relations as described:

[Conclusion~1]: School uniforms deter crime and increase student safety.

<+ <Subargument 1>:

<+ [Conclusion~2]: Implementing school uniforms reduces bullying based on appearance or economic background.

<+ <Subargument 2>:

<+ [Premise]: 83% of teachers think that a good school uniform can prevent bullying based on appearance or economic background.

<+ [Premise]: Reducing bullying based on appearance or economic background contributes to a sense of belonging and social acceptance among students.

<+ [Premise]: A sense of belonging and social acceptance among students is a desirable outcome.

👤 You

Please summarize, in your own words, the main argument presented in the text. No more than 2-3 sentemces, please.

🤖 Assistant

The argument's key point is: School uniforms deter crime and increase student safety.

👤 You

Let's focus on this argument. Just for the protocol, what's the conclusion?

🤖 Assistant

The text doesn't explicitly state a conclusion. It provides supporting evidence and quotes from various sources. If I had to infer a conclusion based on the text, it would be:

School uniforms deter crime and increase student safety.

👤 You

Now, can you please reconstruct this argument as premise conclusion structure (standard form), using argdown syntax? Please give the argument a succinct title before starting with the premise conclusion structure.

🤖 Assistant

The argument can be reconstructed as follows:

- The argument does not explicitly state that the conclusion follows from the premises, so I will not add an inference figure.

(1) 83% of teachers think that a good school uniform can prevent bullying based on appearance or economic background.

(2) Reducing bullying based on appearance or economic background contributes to a sense of belonging and social acceptance among students.

(3) A sense of belonging and social acceptance among students is a desirable outcome.

--

School uniforms deter crime and increase student safety.

👤 You

Hm, please explain: How do the three premises collectively justify the conclusion?

🤖 Assistant

The premises collectively justify the conclusion by establishing a connection between school uniforms, bullying, and student well-being. Specifically, the first premise indicates that a significant majority of teachers support the idea that school uniforms can reduce bullying, thereby creating a safer and more harmonious school environment. The second premise suggests that reducing bullying leads to a positive social dynamic among students, where they feel a sense of belonging and acceptance. The third premise reinforces the value of this social dynamic by affirming that it is a desirable outcome. Putting these premises together, one can infer that school uniforms, which can reduce bullying, contribute to creating a more positive and safer school environment, thereby supporting the conclusion that school uniforms deter crime and increase student safety.

👤 You

Can you add a connecting premise that makes the link between a positive environment on the one side and safety / crime deterrence on the other explicit?

🤖 Assistant

Let me adjust the argdown code accordingly.

(1) 83% of teachers think that a good school uniform can prevent bullying based on appearance or economic background.

(2) Reducing bullying based on appearance or economic background contributes to a sense of belonging and social acceptance among students.

(3) A sense of belonging and social acceptance among students is a desirable outcome.

(4) A positive school environment contributes to a safer school environment.

--------------------------

(5) School uniforms deter crime and increase student safety.

My upshot is that improved argument analysis performance as demonstrated by evals does not translate directly into better performance in chats. ... Probably also shows that, at some point, Argunaut models will have to go through a final, classical instruction finetuning phase.

Benchmarks

🚧 coming soon, contributions welcome

Next steps

- So much to test empirically and evaluate thoroughly... So if you're graduate student or junior researcher looking for great projects with low hanging fruits to work on, please get in touch with me!