Migrated from GitHub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- data/LICENSE.md +43 -0

- data/docs/resource_listing.md +101 -0

- data/images/example_workflow/1.PNG +3 -0

- data/images/example_workflow/10.png +3 -0

- data/images/example_workflow/11.png +3 -0

- data/images/example_workflow/12.png +3 -0

- data/images/example_workflow/13.png +3 -0

- data/images/example_workflow/14.PNG +3 -0

- data/images/example_workflow/2.PNG +3 -0

- data/images/example_workflow/3.PNG +3 -0

- data/images/example_workflow/4.PNG +3 -0

- data/images/example_workflow/5.PNG +3 -0

- data/images/example_workflow/6.PNG +3 -0

- data/images/example_workflow/7.PNG +3 -0

- data/images/example_workflow/8.PNG +3 -0

- data/images/example_workflow/9.png +3 -0

- data/images/github_banner.png +3 -0

- data/images/protify_logo.png +3 -0

- data/images/synthyra_logo.png +3 -0

- data/probe_package_colab.ipynb +497 -0

- data/pyproject.toml +41 -0

- data/requirements.txt +20 -0

- data/setup_bioenv.sh +45 -0

- data/src/protify/base_models/__init__.py +14 -0

- data/src/protify/base_models/amplify.py +3 -0

- data/src/protify/base_models/ankh.py +78 -0

- data/src/protify/base_models/base_tokenizer.py +36 -0

- data/src/protify/base_models/dplm.py +388 -0

- data/src/protify/base_models/esm2.py +84 -0

- data/src/protify/base_models/esm3.py +3 -0

- data/src/protify/base_models/esmc.py +84 -0

- data/src/protify/base_models/get_base_models.py +215 -0

- data/src/protify/base_models/glm.py +90 -0

- data/src/protify/base_models/protbert.py +79 -0

- data/src/protify/base_models/proteinvec.py +3 -0

- data/src/protify/base_models/prott5.py +83 -0

- data/src/protify/base_models/random.py +62 -0

- data/src/protify/base_models/t5.py +150 -0

- data/src/protify/base_models/utils.py +19 -0

- data/src/protify/data/__init__.py +15 -0

- data/src/protify/data/data_collators.py +295 -0

- data/src/protify/data/data_mixin.py +472 -0

- data/src/protify/data/dataset_classes.py +319 -0

- data/src/protify/data/dataset_utils.py +101 -0

- data/src/protify/data/supported_datasets.py +99 -0

- data/src/protify/data/utils.py +31 -0

- data/src/protify/embedder.py +426 -0

- data/src/protify/github_banner.png +3 -0

- data/src/protify/gui.py +1046 -0

- data/src/protify/logger.py +287 -0

data/LICENSE.md

ADDED

|

@@ -0,0 +1,43 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Protify License (BSD 3‑Clause with Additional Restrictions)

|

| 2 |

+

|

| 3 |

+

Redistribution and use in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

|

| 4 |

+

|

| 5 |

+

1. Redistributions of source code must retain the above copyright notice, this list of conditions, and the following disclaimers in full.

|

| 6 |

+

|

| 7 |

+

2. Redistributions in binary form must reproduce the above copyright notice, this list of conditions, and the following disclaimers in the documentation and/or other materials provided with the distribution.

|

| 8 |

+

|

| 9 |

+

3. Neither the name of the copyright holder nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.

|

| 10 |

+

|

| 11 |

+

4. **Commercial Modification & Sale Restriction** – The Software, in whole or in part, may **not** be modified *or* sold for commercial purposes. Commercial entities and individuals may, however, utilize the unmodified Software for internal or external commercial activities, subject to compliance with all other terms of this licence.

|

| 12 |

+

|

| 13 |

+

5. **Attribution for Generated Molecules** – Any molecule or derivative generated—whether for commercial or non‑commercial use—using the outputs of the Protify system must include clear and conspicuous credit to **“Protify”** (e.g., “Molecule generated with the Protify system”). Such credit must appear in any publication, disclosure, promotional material, or commercial documentation in which the molecule is referenced, sold, transferred, or otherwise made available.

|

| 14 |

+

|

| 15 |

+

6. **No Removal of Notices** – You must not remove, obscure, or alter any proprietary notices (including attribution or copyright notices) that appear in or on the Software or that accompany the outputs of the Protify system.

|

| 16 |

+

|

| 17 |

+

---

|

| 18 |

+

|

| 19 |

+

### DISCLAIMER & USER AGREEMENT

|

| 20 |

+

|

| 21 |

+

Deep‑learning models, including those contained in the Protify system, generate outputs via advanced probabilistic algorithms that may be inaccurate, incomplete, or otherwise unsuitable for any given purpose. **By downloading, installing, or using the Software (including running any of its models), you acknowledge and agree that:**

|

| 22 |

+

|

| 23 |

+

* **Assumption of Risk** – You assume full responsibility for verifying the accuracy, fitness, and safety of all outputs produced by the Software—including, without limitation, any molecular structures, sequences, annotations, or recommendations.

|

| 24 |

+

* **No Professional Advice** – Outputs are provided for informational purposes only and do not constitute professional, scientific, medical, legal, or other advice. You must perform your own independent checks before relying on any output.

|

| 25 |

+

* **Indemnity** – To the maximum extent permitted by law, you agree to indemnify, defend, and hold harmless the copyright holder and contributors from and against any and all claims, damages, losses, liabilities, costs, or expenses (including reasonable attorneys’ fees) arising out of or related to your use of the Software or its outputs.

|

| 26 |

+

* **Compliance with Law** – You are solely responsible for ensuring that your use of the Software and its outputs complies with all applicable laws, regulations, and industry standards, including those relating to export control, intellectual‑property rights, and biosafety/biosecurity.

|

| 27 |

+

|

| 28 |

+

---

|

| 29 |

+

|

| 30 |

+

### WARRANTY DISCLAIMER

|

| 31 |

+

|

| 32 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS **“AS IS”** AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. WITHOUT LIMITING THE GENERALITY OF THE FOREGOING, NO WARRANTY IS MADE THAT THE SOFTWARE OR ITS OUTPUTS WILL BE ACCURATE, COMPLETE, NON‑INFRINGING, OR FREE FROM DEFECTS. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, PROFITS, OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE OR ITS OUTPUTS, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

| 33 |

+

|

| 34 |

+

---

|

| 35 |

+

|

| 36 |

+

### TERMINATION

|

| 37 |

+

|

| 38 |

+

Failure to comply with any of the above conditions automatically terminates your rights under this licence. Upon termination, you must cease all use, distribution, and reproduction of the Software and permanently delete or destroy all copies in your possession or control.

|

| 39 |

+

|

| 40 |

+

---

|

| 41 |

+

|

| 42 |

+

Except as expressly modified above, all terms of the standard BSD 3‑Clause License remain in full force and effect.

|

| 43 |

+

|

data/docs/resource_listing.md

ADDED

|

@@ -0,0 +1,101 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

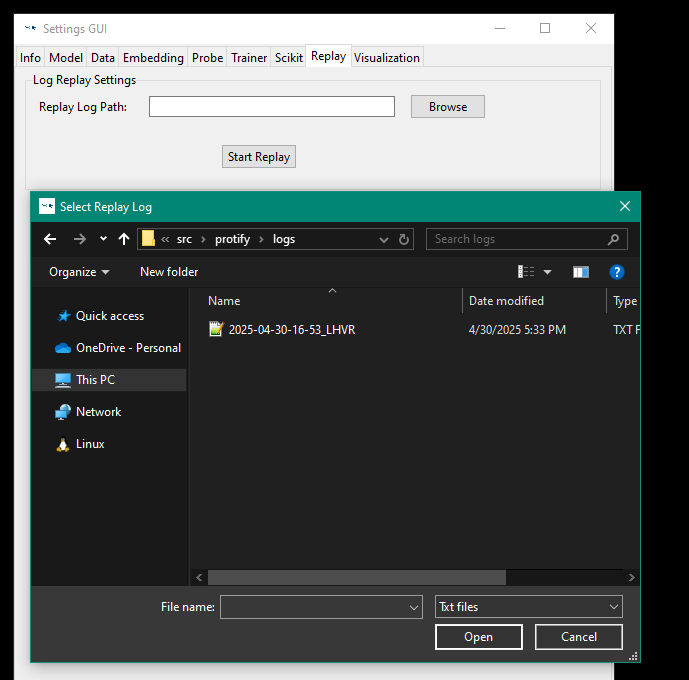

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Listing Supported Models and Datasets

|

| 2 |

+

|

| 3 |

+

Protify provides several ways to view and explore the supported models and datasets. This documentation explains how to use these features.

|

| 4 |

+

|

| 5 |

+

## Using the README Toggle Sections

|

| 6 |

+

|

| 7 |

+

The main README.md file contains expandable toggle sections for both models and datasets:

|

| 8 |

+

|

| 9 |

+

- **Currently Supported Models**: Click the toggle to expand and see a complete table of models with their descriptions, sizes, and types.

|

| 10 |

+

- **Currently Supported Datasets**: Click the toggle to expand and see a complete table of datasets with their descriptions, types, and tasks.

|

| 11 |

+

|

| 12 |

+

## Command-Line Listing

|

| 13 |

+

|

| 14 |

+

Protify provides command-line utilities for listing models and datasets with detailed information:

|

| 15 |

+

|

| 16 |

+

### Listing Models

|

| 17 |

+

|

| 18 |

+

To list all supported models with their descriptions:

|

| 19 |

+

|

| 20 |

+

```bash

|

| 21 |

+

# List all supported models

|

| 22 |

+

python -m src.protify.base_models.get_base_models --list

|

| 23 |

+

|

| 24 |

+

# To download standard models

|

| 25 |

+

python -m src.protify.base_models.get_base_models --download

|

| 26 |

+

```

|

| 27 |

+

|

| 28 |

+

### Listing Datasets

|

| 29 |

+

|

| 30 |

+

To list all supported datasets with their descriptions:

|

| 31 |

+

|

| 32 |

+

```bash

|

| 33 |

+

# List all datasets

|

| 34 |

+

python -m src.protify.data.dataset_utils --list

|

| 35 |

+

|

| 36 |

+

# Get information about a specific dataset

|

| 37 |

+

python -m src.protify.data.dataset_utils --info EC

|

| 38 |

+

```

|

| 39 |

+

|

| 40 |

+

### Combined Listing

|

| 41 |

+

|

| 42 |

+

For a combined view of both models and datasets:

|

| 43 |

+

|

| 44 |

+

```bash

|

| 45 |

+

# List both models and datasets

|

| 46 |

+

python -m src.protify.resource_info --all

|

| 47 |

+

|

| 48 |

+

# List only standard models and datasets

|

| 49 |

+

python -m src.protify.resource_info --all --standard-only

|

| 50 |

+

|

| 51 |

+

# List only models

|

| 52 |

+

python -m src.protify.resource_info --models

|

| 53 |

+

|

| 54 |

+

# List only datasets

|

| 55 |

+

python -m src.protify.resource_info --datasets

|

| 56 |

+

```

|

| 57 |

+

|

| 58 |

+

## Programmatic Access

|

| 59 |

+

|

| 60 |

+

You can also access model and dataset information programmatically:

|

| 61 |

+

|

| 62 |

+

```python

|

| 63 |

+

# For models

|

| 64 |

+

from src.protify.resource_info import model_descriptions

|

| 65 |

+

from src.protify.base_models.get_base_models import currently_supported_models, standard_models

|

| 66 |

+

|

| 67 |

+

# Get information about a specific model

|

| 68 |

+

model_info = model_descriptions.get('ESM2-150', {})

|

| 69 |

+

print(f"Model: ESM2-150")

|

| 70 |

+

print(f"Description: {model_info.get('description', 'N/A')}")

|

| 71 |

+

print(f"Size: {model_info.get('size', 'N/A')}")

|

| 72 |

+

print(f"Type: {model_info.get('type', 'N/A')}")

|

| 73 |

+

|

| 74 |

+

# For datasets

|

| 75 |

+

from src.protify.resource_info import dataset_descriptions

|

| 76 |

+

from src.protify.data.supported_datasets import supported_datasets

|

| 77 |

+

|

| 78 |

+

# Get information about a specific dataset

|

| 79 |

+

dataset_info = dataset_descriptions.get('EC', {})

|

| 80 |

+

print(f"Dataset: EC")

|

| 81 |

+

print(f"Description: {dataset_info.get('description', 'N/A')}")

|

| 82 |

+

print(f"Type: {dataset_info.get('type', 'N/A')}")

|

| 83 |

+

print(f"Task: {dataset_info.get('task', 'N/A')}")

|

| 84 |

+

```

|

| 85 |

+

|

| 86 |

+

## Model Group Types

|

| 87 |

+

|

| 88 |

+

Models in Protify are generally grouped into the following categories:

|

| 89 |

+

|

| 90 |

+

1. **Protein Language Models**: Pre-trained models that have learned protein properties from large-scale sequence data (e.g., ESM2, ProtBert)

|

| 91 |

+

2. **Baseline Controls**: Models with random weights for comparison (e.g., Random, Random-Transformer)

|

| 92 |

+

|

| 93 |

+

## Dataset Group Types

|

| 94 |

+

|

| 95 |

+

Datasets are categorized by their task types:

|

| 96 |

+

|

| 97 |

+

1. **Multi-label Classification**: Datasets where each protein can have multiple labels (e.g., EC, GO-CC)

|

| 98 |

+

2. **Classification**: Binary or multi-class classification tasks (e.g., DeepLoc-2, DeepLoc-10)

|

| 99 |

+

3. **Regression**: Prediction of continuous values (e.g., enzyme-kcat, optimal-temperature)

|

| 100 |

+

4. **Protein-Protein Interaction**: Tasks focused on protein interactions (e.g., human-ppi, gold-ppi)

|

| 101 |

+

5. **Token-wise Classification/Regression**: Residue-level prediction tasks (e.g., SecondaryStructure-3)

|

data/images/example_workflow/1.PNG

ADDED

|

Git LFS Details

|

data/images/example_workflow/10.png

ADDED

|

Git LFS Details

|

data/images/example_workflow/11.png

ADDED

|

Git LFS Details

|

data/images/example_workflow/12.png

ADDED

|

Git LFS Details

|

data/images/example_workflow/13.png

ADDED

|

Git LFS Details

|

data/images/example_workflow/14.PNG

ADDED

|

Git LFS Details

|

data/images/example_workflow/2.PNG

ADDED

|

Git LFS Details

|

data/images/example_workflow/3.PNG

ADDED

|

Git LFS Details

|

data/images/example_workflow/4.PNG

ADDED

|

Git LFS Details

|

data/images/example_workflow/5.PNG

ADDED

|

Git LFS Details

|

data/images/example_workflow/6.PNG

ADDED

|

Git LFS Details

|

data/images/example_workflow/7.PNG

ADDED

|

Git LFS Details

|

data/images/example_workflow/8.PNG

ADDED

|

Git LFS Details

|

data/images/example_workflow/9.png

ADDED

|

Git LFS Details

|

data/images/github_banner.png

ADDED

|

Git LFS Details

|

data/images/protify_logo.png

ADDED

|

Git LFS Details

|

data/images/synthyra_logo.png

ADDED

|

Git LFS Details

|

data/probe_package_colab.ipynb

ADDED

|

@@ -0,0 +1,497 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"metadata": {},

|

| 6 |

+

"source": [

|

| 7 |

+

"# OUT OF DATE - NEEDS TO BE UPDATED"

|

| 8 |

+

]

|

| 9 |

+

},

|

| 10 |

+

{

|

| 11 |

+

"cell_type": "code",

|

| 12 |

+

"execution_count": 1,

|

| 13 |

+

"metadata": {

|

| 14 |

+

"id": "mQ__d_petycW"

|

| 15 |

+

},

|

| 16 |

+

"outputs": [],

|

| 17 |

+

"source": [

|

| 18 |

+

"#@title **1. Setup**\n",

|

| 19 |

+

"\n",

|

| 20 |

+

"#@markdown ### Identification\n",

|

| 21 |

+

"huggingface_username = \"Synthyra\" #@param {type:\"string\"}\n",

|

| 22 |

+

"#@markdown ---\n",

|

| 23 |

+

"huggingface_token = \"\" #@param {type:\"string\"}\n",

|

| 24 |

+

"#@markdown ---\n",

|

| 25 |

+

"wandb_api_key = \"\" #@param {type:\"string\"}\n",

|

| 26 |

+

"#@markdown ---\n",

|

| 27 |

+

"synthyra_api_key = \"\" #@param {type:\"string\"}\n",

|

| 28 |

+

"#@markdown ---\n",

|

| 29 |

+

"github_token = \"\" #@param {type:\"string\"}\n",

|

| 30 |

+

"#@markdown ---\n",

|

| 31 |

+

"\n",

|

| 32 |

+

"\n",

|

| 33 |

+

"github_clone_path = f\"https://{github_token}@github.com/Synthyra/ProbePackageHolder.git\"\n",

|

| 34 |

+

"# !git clone {github_clone_path}\n",

|

| 35 |

+

"# %cd ProbePackageHolder\n",

|

| 36 |

+

"# !pip install -r requirements.txt --quiet\n"

|

| 37 |

+

]

|

| 38 |

+

},

|

| 39 |

+

{

|

| 40 |

+

"cell_type": "code",

|

| 41 |

+

"execution_count": null,

|

| 42 |

+

"metadata": {},

|

| 43 |

+

"outputs": [],

|

| 44 |

+

"source": [

|

| 45 |

+

"#@title **2. Session/Directory Settings**\n",

|

| 46 |

+

"\n",

|

| 47 |

+

"import torch\n",

|

| 48 |

+

"import argparse\n",

|

| 49 |

+

"from types import SimpleNamespace\n",

|

| 50 |

+

"from base_models.get_base_models import BaseModelArguments, standard_benchmark\n",

|

| 51 |

+

"from data.hf_data import HFDataArguments\n",

|

| 52 |

+

"from data.supported_datasets import supported_datasets\n",

|

| 53 |

+

"from embedder import EmbeddingArguments\n",

|

| 54 |

+

"from probes.get_probe import ProbeArguments\n",

|

| 55 |

+

"from probes.trainers import TrainerArguments\n",

|

| 56 |

+

"from main import MainProcess\n",

|

| 57 |

+

"\n",

|

| 58 |

+

"\n",

|

| 59 |

+

"main = MainProcess(argparse.Namespace(), GUI=True)\n",

|

| 60 |

+

"\n",

|

| 61 |

+

"#@markdown **Paths**\n",

|

| 62 |

+

"\n",

|

| 63 |

+

"#@markdown These will be created automatically if they don't exist\n",

|

| 64 |

+

"\n",

|

| 65 |

+

"#@markdown **Log Directory**\n",

|

| 66 |

+

"log_dir = \"logs\" #@param {type:\"string\"}\n",

|

| 67 |

+

"#@markdown ---\n",

|

| 68 |

+

"\n",

|

| 69 |

+

"#@markdown **Results Directory**\n",

|

| 70 |

+

"results_dir = \"results\" #@param {type:\"string\"}\n",

|

| 71 |

+

"#@markdown ---\n",

|

| 72 |

+

"\n",

|

| 73 |

+

"#@markdown **Model Save Directory**\n",

|

| 74 |

+

"model_save_dir = \"weights\" #@param {type:\"string\"}\n",

|

| 75 |

+

"#@markdown ---\n",

|

| 76 |

+

"\n",

|

| 77 |

+

"#@markdown **Embedding Save Directory**\n",

|

| 78 |

+

"embedding_save_dir = \"embeddings\" #@param {type:\"string\"}\n",

|

| 79 |

+

"#@markdown ---\n",

|

| 80 |

+

"\n",

|

| 81 |

+

"#@markdown **Download Directory**\n",

|

| 82 |

+

"#@markdown - Where embeddings are downloaded on Hugging Face\n",

|

| 83 |

+

"download_dir = \"Synthyra/mean_pooled_embeddings\" #@param {type:\"string\"}\n",

|

| 84 |

+

"#@markdown ---\n",

|

| 85 |

+

"\n",

|

| 86 |

+

"\n",

|

| 87 |

+

"main.full_args.hf_token = huggingface_token\n",

|

| 88 |

+

"main.full_args.wandb_api_key = wandb_api_key\n",

|

| 89 |

+

"main.full_args.synthyra_api_key = synthyra_api_key\n",

|

| 90 |

+

"main.full_args.log_dir = log_dir\n",

|

| 91 |

+

"main.full_args.results_dir = results_dir\n",

|

| 92 |

+

"main.full_args.model_save_dir = model_save_dir\n",

|

| 93 |

+

"main.full_args.embedding_save_dir = embedding_save_dir\n",

|

| 94 |

+

"main.full_args.download_dir = download_dir\n",

|

| 95 |

+

"main.full_args.replay_path = None\n",

|

| 96 |

+

"main.logger_args = SimpleNamespace(**main.full_args.__dict__)\n",

|

| 97 |

+

"main.start_log_gui()\n",

|

| 98 |

+

"\n",

|

| 99 |

+

"#@markdown Press play to setup the session:"

|

| 100 |

+

]

|

| 101 |

+

},

|

| 102 |

+

{

|

| 103 |

+

"cell_type": "code",

|

| 104 |

+

"execution_count": null,

|

| 105 |

+

"metadata": {

|

| 106 |

+

"id": "FFgNDvDAt0xp"

|

| 107 |

+

},

|

| 108 |

+

"outputs": [],

|

| 109 |

+

"source": [

|

| 110 |

+

"#@title **2. Data Settings**\n",

|

| 111 |

+

"\n",

|

| 112 |

+

"#@markdown **Max Sequence Length**\n",

|

| 113 |

+

"max_length = 2048 #@param {type:\"integer\"}\n",

|

| 114 |

+

"#@markdown ---\n",

|

| 115 |

+

"\n",

|

| 116 |

+

"#@markdown **Trim Sequences**\n",

|

| 117 |

+

"#@markdown - If true, sequences are removed if they are longer than the maximum length\n",

|

| 118 |

+

"#@markdown - If false, sequences are truncated to the maximum length\n",

|

| 119 |

+

"trim = False #@param {type:\"boolean\"}\n",

|

| 120 |

+

"#@markdown ---\n",

|

| 121 |

+

"\n",

|

| 122 |

+

"#@markdown **Dataset Names**\n",

|

| 123 |

+

"#@markdown Valid options (comma-separated):\n",

|

| 124 |

+

"\n",

|

| 125 |

+

"#@markdown *Multi-label classification:*\n",

|

| 126 |

+

"\n",

|

| 127 |

+

"#@markdown - EC, GO-CC, GO-BP, GO-MF\n",

|

| 128 |

+

"\n",

|

| 129 |

+

"#@markdown *Single-label classification:*\n",

|

| 130 |

+

"\n",

|

| 131 |

+

"#@markdown - MB, DeepLoc-2, DeepLoc-10, solubility, localization, material-production, cloning-clf, number-of-folds\n",

|

| 132 |

+

"\n",

|

| 133 |

+

"#@markdown *Regression:*\n",

|

| 134 |

+

"\n",

|

| 135 |

+

"#@markdown - enzyme-kcat,temperature-stability, optimal-temperature, optimal-ph, fitness-prediction, stability-prediction, fluorescence-prediction\n",

|

| 136 |

+

"\n",

|

| 137 |

+

"#@markdown *PPI:*\n",

|

| 138 |

+

"\n",

|

| 139 |

+

"#@markdown - human-ppi, peptide-HLA-MHC-affinity\n",

|

| 140 |

+

"\n",

|

| 141 |

+

"#@markdown *Tokenwise:*\n",

|

| 142 |

+

"\n",

|

| 143 |

+

"#@markdown - SecondaryStructure-3, SecondaryStructure-8\n",

|

| 144 |

+

"dataset_names = \"EC, DeepLoc-2, DeepLoc-10, enzyme-kcat\" #@param {type:\"string\"}\n",

|

| 145 |

+

"#@markdown ---\n",

|

| 146 |

+

"\n",

|

| 147 |

+

"data_paths = [supported_datasets[name.strip()] for name in dataset_names.split(\",\") if name.strip()]\n",

|

| 148 |

+

"\n",

|

| 149 |

+

"main.full_args.data_paths = data_paths\n",

|

| 150 |

+

"main.full_args.max_length = max_length\n",

|

| 151 |

+

"main.full_args.trim = trim\n",

|

| 152 |

+

"main.data_args = HFDataArguments(**main.full_args.__dict__)\n",

|

| 153 |

+

"args_dict = {k: v for k, v in main.full_args.__dict__.items() if k != 'all_seqs' and 'token' not in k.lower() and 'api' not in k.lower()}\n",

|

| 154 |

+

"main.logger_args = SimpleNamespace(**args_dict)\n",

|

| 155 |

+

"main.get_datasets()\n",

|

| 156 |

+

"\n",

|

| 157 |

+

"#@markdown Press play to load datasets:"

|

| 158 |

+

]

|

| 159 |

+

},

|

| 160 |

+

{

|

| 161 |

+

"cell_type": "code",

|

| 162 |

+

"execution_count": 5,

|

| 163 |

+

"metadata": {

|

| 164 |

+

"id": "D1iMWkLzt8QM"

|

| 165 |

+

},

|

| 166 |

+

"outputs": [],

|

| 167 |

+

"source": [

|

| 168 |

+

"#@title **3. Model Selection**\n",

|

| 169 |

+

"\n",

|

| 170 |

+

"#@markdown Comma-separated model names.\n",

|

| 171 |

+

"#@markdown If empty, defaults to `standard_benchmark`.\n",

|

| 172 |

+

"#@markdown Valid options (comma-separated):\n",

|

| 173 |

+

"#@markdown - `ESM2-8, ESM2-35, ESM2-150, ESM2-650`\n",

|

| 174 |

+

"#@markdown - `ESMC-300, ESMC-600`\n",

|

| 175 |

+

"#@markdown - `Random, Random-Transformer`\n",

|

| 176 |

+

"model_names = \"ESMC-300\" #@param {type:\"string\"}\n",

|

| 177 |

+

"#@markdown ---\n",

|

| 178 |

+

"\n",

|

| 179 |

+

"selected_models = [name.strip() for name in model_names.split(\",\") if name.strip()]\n",

|

| 180 |

+

"\n",

|

| 181 |

+

"if not selected_models:\n",

|

| 182 |

+

" selected_models = standard_benchmark\n",

|

| 183 |

+

"\n",

|

| 184 |

+

"main.full_args.model_names = selected_models\n",

|

| 185 |

+

"main.model_args = BaseModelArguments(**main.full_args.__dict__)\n",

|

| 186 |

+

"args_dict = {k: v for k, v in main.full_args.__dict__.items() if k != 'all_seqs' and 'token' not in k.lower() and 'api' not in k.lower()}\n",

|

| 187 |

+

"main.logger_args = SimpleNamespace(**args_dict)\n",

|

| 188 |

+

"main._write_args()\n",

|

| 189 |

+

"\n",

|

| 190 |

+

"#@markdown *Press play to choose models:*\n"

|

| 191 |

+

]

|

| 192 |

+

},

|

| 193 |

+

{

|

| 194 |

+

"cell_type": "code",

|

| 195 |

+

"execution_count": null,

|

| 196 |

+

"metadata": {

|

| 197 |

+

"id": "qHCDeczNt20y"

|

| 198 |

+

},

|

| 199 |

+

"outputs": [],

|

| 200 |

+

"source": [

|

| 201 |

+

"#@title **4. Embedding Settings**\n",

|

| 202 |

+

"#@markdown **Batch size**\n",

|

| 203 |

+

"batch_size = 4 #@param {type:\"integer\"}\n",

|

| 204 |

+

"#@markdown ---\n",

|

| 205 |

+

"\n",

|

| 206 |

+

"#@markdown **Number of dataloader workers**\n",

|

| 207 |

+

"#@markdown - We recommend 0 for small sets of sequences, but 4-8 for larger sets\n",

|

| 208 |

+

"num_workers = 0 #@param {type:\"integer\"}\n",

|

| 209 |

+

"#@markdown ---\n",

|

| 210 |

+

"\n",

|

| 211 |

+

"#@markdown **Download embeddings from Hugging Face**\n",

|

| 212 |

+

"#@markdown - If there is a precomputed embedding type that's useful to you, it is probably faster to download it\n",

|

| 213 |

+

"#@markdown - HIGHLY recommended for CPU users\n",

|

| 214 |

+

"download_embeddings = False #@param {type:\"boolean\"}\n",

|

| 215 |

+

"#@markdown ---\n",

|

| 216 |

+

"\n",

|

| 217 |

+

"#@markdown **Full residue embeddings**\n",

|

| 218 |

+

"#@markdown - If true, embeddings are saved as a matrix of shape `(L, d)`\n",

|

| 219 |

+

"#@markdown - If false, embeddings are pooled to `(d,)`\n",

|

| 220 |

+

"matrix_embed = False #@param {type:\"boolean\"}\n",

|

| 221 |

+

"#@markdown ---\n",

|

| 222 |

+

"\n",

|

| 223 |

+

"#@markdown **Embedding Pooling Types**\n",

|

| 224 |

+

"#@markdown - If more than one is passed, embeddings are concatenated\n",

|

| 225 |

+

"#@markdown Valid options (comma-separated):\n",

|

| 226 |

+

"#@markdown - `mean, max, norm, median, std, var, cls, parti`\n",

|

| 227 |

+

"#@markdown - `parti` (pool parti) must be used on its own\n",

|

| 228 |

+

"embedding_pooling_types = \"mean, std\" #@param {type:\"string\"}\n",

|

| 229 |

+

"#@markdown ---\n",

|

| 230 |

+

"\n",

|

| 231 |

+

"#@markdown **Embedding Data Type**\n",

|

| 232 |

+

"#@markdown - Embeddings are cast to this data type for storage\n",

|

| 233 |

+

"embed_dtype = \"float32\" #@param [\"float32\",\"float16\",\"bfloat16\",\"float8_e4m3fn\",\"float8_e5m2\"]\n",

|

| 234 |

+

"#@markdown ---\n",

|

| 235 |

+

"\n",

|

| 236 |

+

"#@markdown **Save embeddings to SQLite**\n",

|

| 237 |

+

"#@markdown - If true, embeddings are saved to a SQLite database\n",

|

| 238 |

+

"#@markdown - They will be accessed on the fly by the trainer\n",

|

| 239 |

+

"#@markdown - This is HIGHLY recommended for matrix embeddings\n",

|

| 240 |

+

"#@markdown - If false, embeddings are saved to a .pth file but loaded all at once\n",

|

| 241 |

+

"sql = False #@param {type:\"boolean\"}\n",

|

| 242 |

+

"#@markdown ---\n",

|

| 243 |

+

"\n",

|

| 244 |

+

"main.full_args.all_seqs = main.all_seqs\n",

|

| 245 |

+

"main.full_args.batch_size = batch_size\n",

|

| 246 |

+

"main.full_args.num_workers = num_workers\n",

|

| 247 |

+

"main.full_args.download_embeddings = download_embeddings\n",

|

| 248 |

+

"main.full_args.matrix_embed = matrix_embed\n",

|

| 249 |

+

"main.full_args.embedding_pooling_types = [p.strip() for p in embedding_pooling_types.split(\",\") if p.strip()]\n",

|

| 250 |

+

"if embed_dtype == \"float32\": main.embed_dtype = torch.float32\n",

|

| 251 |

+

"elif embed_dtype == \"float16\": main.embed_dtype = torch.float16\n",

|

| 252 |

+

"elif embed_dtype == \"bfloat16\": main.embed_dtype = torch.bfloat16 \n",

|

| 253 |

+

"elif embed_dtype == \"float8_e4m3fn\": main.embed_dtype = torch.float8_e4m3fn\n",

|

| 254 |

+

"elif embed_dtype == \"float8_e5m2\": main.embed_dtype = torch.float8_e5m2\n",

|

| 255 |

+

"else:\n",

|

| 256 |

+

" print(f\"Invalid embedding dtype: {embed_dtype}. Using float32.\")\n",

|

| 257 |

+

" main.embed_dtype = torch.float32\n",

|

| 258 |

+

"main.sql = sql\n",

|

| 259 |

+

"\n",

|

| 260 |

+

"\n",

|

| 261 |

+

"main.embedding_args = EmbeddingArguments(**main.full_args.__dict__)\n",

|

| 262 |

+

"args_dict = {k: v for k, v in main.full_args.__dict__.items() if k != 'all_seqs' and 'token' not in k.lower() and 'api' not in k.lower()}\n",

|

| 263 |

+

"main.logger_args = SimpleNamespace(**args_dict)\n",

|

| 264 |

+

"main.save_embeddings_to_disk()\n",

|

| 265 |

+

"\n",

|

| 266 |

+

"#@markdown *Press play to embed sequences:*\n"

|

| 267 |

+

]

|

| 268 |

+

},

|

| 269 |

+

{

|

| 270 |

+

"cell_type": "code",

|

| 271 |

+

"execution_count": 7,

|

| 272 |

+

"metadata": {

|

| 273 |

+

"id": "K7R-Htvit9Ti"

|

| 274 |

+

},

|

| 275 |

+

"outputs": [],

|

| 276 |

+

"source": [

|

| 277 |

+

"#@title **5. Probe Settings**\n",

|

| 278 |

+

"\n",

|

| 279 |

+

"#@markdown **Probe Type**\n",

|

| 280 |

+

"#@markdown - `linear`: a MLP for pooled embeddings\n",

|

| 281 |

+

"#@markdown - `transformer`: a transformer model for matrix embeddings\n",

|

| 282 |

+

"#@markdown - `retrievalnet`: custom combination of cross-attention and convolution for matrix embeddings\n",

|

| 283 |

+

"probe_type = \"linear\" #@param [\"linear\", \"transformer\", \"retrievalnet\"]\n",

|

| 284 |

+

"#@markdown ---\n",

|

| 285 |

+

"\n",

|

| 286 |

+

"#@markdown **Tokenwise**\n",

|

| 287 |

+

"#@markdown - If true, the objective is to predict a property of each token (matrix embeddings only)\n",

|

| 288 |

+

"#@markdown - If false, the objective is to predict a property of the entire sequence (pooled embeddings OR matrix embeddings)\n",

|

| 289 |

+

"tokenwise = False #@param {type:\"boolean\"}\n",

|

| 290 |

+

"#@markdown ---\n",

|

| 291 |

+

"\n",

|

| 292 |

+

"#@markdown **Pre-LayerNorm**\n",

|

| 293 |

+

"#@markdown - If true, a LayerNorm is applied as the first layer of the probe the probe\n",

|

| 294 |

+

"#@markdown - Typicall improves performance\n",

|

| 295 |

+

"pre_ln = True #@param {type:\"boolean\"}\n",

|

| 296 |

+

"#@markdown ---\n",

|

| 297 |

+

"\n",

|

| 298 |

+

"#@markdown **Number of layers**\n",

|

| 299 |

+

"#@markdown - Number of hidden layers in the probe\n",

|

| 300 |

+

"#@markdown - Linear probes have 1 input layer and 2 output layers, so 1 layer is a 4 layer MLP\n",

|

| 301 |

+

"#@markdown - This refers to how many transformer blocks are used in the transformer probe\n",

|

| 302 |

+

"#@markdown - Same for retrievalnet probes\n",

|

| 303 |

+

"n_layers = 1 #@param {type:\"integer\"}\n",

|

| 304 |

+

"#@markdown ---\n",

|

| 305 |

+

"\n",

|

| 306 |

+

"#@markdown **Hidden dimension**\n",

|

| 307 |

+

"#@markdown - The hidden dimension of the model\n",

|

| 308 |

+

"#@markdown - 2048 - 8192 is recommended for linear probes, 384 - 1536 is recommended for transformer probes\n",

|

| 309 |

+

"hidden_dim = 8192 #@param {type:\"integer\"}\n",

|

| 310 |

+

"#@markdown ---\n",

|

| 311 |

+

"\n",

|

| 312 |

+

"#@markdown **Dropout**\n",

|

| 313 |

+

"#@markdown - Dropout rate for the probe\n",

|

| 314 |

+

"#@markdown - 0.2 is recommended for linear, 0.1 otherwise\n",

|

| 315 |

+

"dropout = 0.2 #@param {type:\"number\"}\n",

|

| 316 |

+

"#@markdown ---\n",

|

| 317 |

+

"\n",

|

| 318 |

+

"#@markdown **Classifier dimension**\n",

|

| 319 |

+

"#@markdown - The dimension of the classifier layer (transformer, retrievalnet probes only)\n",

|

| 320 |

+

"classifier_dim = 4096 #@param {type:\"integer\"}\n",

|

| 321 |

+

"#@markdown ---\n",

|

| 322 |

+

"\n",

|

| 323 |

+

"#@markdown **Classifier Dropout**\n",

|

| 324 |

+

"#@markdown - Dropout rate for the classifier layer\n",

|

| 325 |

+

"classifier_dropout = 0.2 #@param {type:\"number\"}\n",

|

| 326 |

+

"#@markdown ---\n",

|

| 327 |

+

"\n",

|

| 328 |

+

"#@markdown **Number of heads**\n",

|

| 329 |

+

"#@markdown - Number of attention heads in models with attention\n",

|

| 330 |

+

"#@markdown - between `hidden_dim // 128` and `hidden_dim // 32` is recommended\n",

|

| 331 |

+

"n_heads = 4 #@param {type:\"integer\"}\n",

|

| 332 |

+

"#@markdown ---\n",

|

| 333 |

+

"\n",

|

| 334 |

+

"#@markdown **Rotary Embeddings**\n",

|

| 335 |

+

"#@markdown - If true, rotary embeddings are used with attention layers\n",

|

| 336 |

+

"rotary = True #@param {type:\"boolean\"}\n",

|

| 337 |

+

"#@markdown ---\n",

|

| 338 |

+

"\n",

|

| 339 |

+

"#@markdown **Probe Pooling Types**\n",

|

| 340 |

+

"#@markdown - If more than one is passed, embeddings are concatenated\n",

|

| 341 |

+

"#@markdown Valid options (comma-separated):\n",

|

| 342 |

+

"#@markdown - `mean, max, norm, median, std, var, cls`\n",

|

| 343 |

+

"#@markdown - Is how the transformer or retrievalnet embeddings are pooled for sequence-wise tasks\n",

|

| 344 |

+

"probe_pooling_types_str = \"mean, cls\" #@param {type:\"string\"}\n",

|

| 345 |

+

"\n",

|

| 346 |

+

"probe_pooling_types = [p.strip() for p in probe_pooling_types_str.split(\",\") if p.strip()]\n",

|

| 347 |

+

"\n",

|

| 348 |

+

"main.full_args.probe_type = probe_type\n",

|

| 349 |

+

"main.full_args.tokenwise = tokenwise\n",

|

| 350 |

+

"main.full_args.pre_ln = pre_ln\n",

|

| 351 |

+

"main.full_args.n_layers = n_layers\n",

|

| 352 |

+

"main.full_args.hidden_dim = hidden_dim\n",

|

| 353 |

+

"main.full_args.dropout = dropout\n",

|

| 354 |

+

"main.full_args.classifier_dim = classifier_dim\n",

|

| 355 |

+

"main.full_args.classifier_dropout = classifier_dropout\n",

|

| 356 |

+

"main.full_args.n_heads = n_heads\n",

|

| 357 |

+

"main.full_args.rotary = rotary\n",

|

| 358 |

+

"main.full_args.probe_pooling_types = probe_pooling_types\n",

|

| 359 |

+

"\n",

|

| 360 |

+

"main.probe_args = ProbeArguments(**main.full_args.__dict__)\n",

|

| 361 |

+

"args_dict = {k: v for k, v in main.full_args.__dict__.items() if k != 'all_seqs' and 'token' not in k.lower() and 'api' not in k.lower()}\n",

|

| 362 |

+

"main.logger_args = SimpleNamespace(**args_dict)\n",

|

| 363 |

+

"main._write_args()\n",

|

| 364 |

+

"\n",

|

| 365 |

+

"#@markdown ---\n",

|

| 366 |

+

"#@markdown Press play to configure the probe:\n"

|

| 367 |

+

]

|

| 368 |

+

},

|

| 369 |

+

{

|

| 370 |

+

"cell_type": "code",

|

| 371 |

+

"execution_count": 8,

|

| 372 |

+

"metadata": {

|

| 373 |

+

"id": "8W4OYnn4uIyU"

|

| 374 |

+

},

|

| 375 |

+

"outputs": [],

|

| 376 |

+

"source": [

|

| 377 |

+

"#@title **6. Training Settings**\n",

|

| 378 |

+

"\n",

|

| 379 |

+

"#@markdown **Use LoRA**\n",

|

| 380 |

+

"#@markdown - If true, LoRA on the base model\n",

|

| 381 |

+

"use_lora = False #@param {type:\"boolean\"}\n",

|

| 382 |

+

"#@markdown ---\n",

|

| 383 |

+

"\n",

|

| 384 |

+

"#@markdown **Hybrid Probe**\n",

|

| 385 |

+

"#@markdown - If true, the probe is trained on frozen embeddings\n",

|

| 386 |

+

"#@markdown - Then, the base model is finetuned alongside the probe\n",

|

| 387 |

+

"hybrid_probe = False #@param {type:\"boolean\"}\n",

|

| 388 |

+

"#@markdown ---\n",

|

| 389 |

+

"\n",

|

| 390 |

+

"#@markdown **Full Finetuning**\n",

|

| 391 |

+

"#@markdown - If true, the base model is finetuned for the task\n",

|

| 392 |

+

"full_finetuning = False #@param {type:\"boolean\"}\n",

|

| 393 |

+

"#@markdown ---\n",

|

| 394 |

+

"\n",

|

| 395 |

+

"#@markdown **Number of epochs**\n",

|

| 396 |

+

"num_epochs = 200 #@param {type:\"integer\"}\n",

|

| 397 |

+

"#@markdown ---\n",

|

| 398 |

+

"\n",

|

| 399 |

+

"#@markdown **Trainer Batch Size**\n",

|

| 400 |

+

"#@markdown - The batch size for probe training\n",

|

| 401 |

+

"#@markdown - We recommend between 32 and 256 with some combination of this and gradient accumulation steps\n",

|

| 402 |

+

"trainer_batch_size = 64 #@param {type:\"integer\"}\n",

|

| 403 |

+

"#@markdown ---\n",

|

| 404 |

+

"\n",

|

| 405 |

+

"#@markdown **Gradient Accumulation Steps**\n",

|

| 406 |

+

"gradient_accumulation_steps = 1 #@param {type:\"integer\"}\n",

|

| 407 |

+

"#@markdown ---\n",

|

| 408 |

+

"\n",

|

| 409 |

+

"#@markdown **Learning Rate**\n",

|

| 410 |

+

"lr = 0.0001 #@param {type:\"number\"}\n",

|

| 411 |

+

"#@markdown ---\n",

|

| 412 |

+

"\n",

|

| 413 |

+

"#@markdown **Weight Decay**\n",

|

| 414 |

+

"#@markdown - If you are having issues with overfitting, try increasing this\n",

|

| 415 |

+

"weight_decay = 0.0 #@param {type:\"number\"}\n",

|

| 416 |

+

"#@markdown ---\n",

|

| 417 |

+

"\n",

|

| 418 |

+

"#@markdown **Early Stopping Patience**\n",

|

| 419 |

+

"#@markdown - We recommend keep the epcohs high and using this to gage convergence\n",

|

| 420 |

+

"patience = 10 #@param {type:\"integer\"}\n",

|

| 421 |

+

"#@markdown ---\n",

|

| 422 |

+

"\n",

|

| 423 |

+

"main.full_args.use_lora = use_lora\n",

|

| 424 |

+

"main.full_args.hybrid_probe = hybrid_probe\n",

|

| 425 |

+

"main.full_args.full_finetuning = full_finetuning\n",

|

| 426 |

+

"main.full_args.num_epochs = num_epochs\n",

|

| 427 |

+

"main.full_args.trainer_batch_size = trainer_batch_size\n",

|

| 428 |

+

"main.full_args.gradient_accumulation_steps = gradient_accumulation_steps\n",

|

| 429 |

+

"main.full_args.lr = lr\n",

|

| 430 |

+

"main.full_args.weight_decay = weight_decay\n",

|

| 431 |

+

"main.full_args.patience = patience\n",

|

| 432 |

+

"\n",

|

| 433 |

+

"main.trainer_args = TrainerArguments(**main.full_args.__dict__)\n",

|

| 434 |

+

"args_dict = {k: v for k, v in main.full_args.__dict__.items() if k != 'all_seqs' and 'token' not in k.lower() and 'api' not in k.lower()}\n",

|

| 435 |

+

"main.logger_args = SimpleNamespace(**args_dict)\n",

|

| 436 |

+

"main._write_args()\n",

|

| 437 |

+

"\n",

|

| 438 |

+

"#@markdown ---\n",

|

| 439 |

+

"#@markdown Press play to run the trainer:\n",

|

| 440 |

+

"main.run_nn_probe()"

|

| 441 |

+

]

|

| 442 |

+

},

|

| 443 |

+

{

|

| 444 |

+

"cell_type": "code",

|

| 445 |

+

"execution_count": null,

|

| 446 |

+

"metadata": {

|

| 447 |

+

"id": "GdAk8wxWuJWO"

|

| 448 |

+

},

|

| 449 |

+

"outputs": [],

|

| 450 |

+

"source": [

|

| 451 |

+

"#@title **7. Log Replay**\n",

|

| 452 |

+

"\n",

|

| 453 |

+

"#@markdown **Replay Path**\n",

|

| 454 |

+

"#@markdown - Replay everything from a log by passing the path to the log file\n",

|

| 455 |

+

"replay_path = \"\" #@param {type:\"string\"}\n",

|

| 456 |

+

"#@markdown ---\n",

|

| 457 |

+

"\n",

|

| 458 |

+

"from logger import LogReplayer\n",

|

| 459 |

+

"replayer = LogReplayer(replay_path)\n",

|

| 460 |

+

"replay_args = replayer.parse_log()\n",

|

| 461 |

+

"replay_args.replay_path = replay_path\n",

|

| 462 |

+

"\n",

|

| 463 |

+

"for key, value in replay_args.__dict__.items():\n",

|

| 464 |

+

" if key in main.full_args.__dict__:\n",

|

| 465 |

+

" main.full_args[key] = value\n",

|

| 466 |

+

"\n",

|

| 467 |

+

"replayer.run_replay(main)\n",

|

| 468 |

+

"\n",

|

| 469 |

+

"#@markdown ---\n",

|

| 470 |

+

"#@markdown Press to replay logs:\n"

|

| 471 |

+

]

|

| 472 |

+

}

|

| 473 |

+

],

|

| 474 |

+

"metadata": {

|

| 475 |

+

"colab": {

|

| 476 |

+

"provenance": []

|

| 477 |

+

},

|

| 478 |

+

"kernelspec": {

|

| 479 |

+

"display_name": "Python 3",

|

| 480 |

+

"name": "python3"

|

| 481 |

+

},

|

| 482 |

+

"language_info": {

|

| 483 |

+

"codemirror_mode": {

|

| 484 |

+

"name": "ipython",

|

| 485 |

+

"version": 3

|

| 486 |

+

},

|

| 487 |

+

"file_extension": ".py",

|

| 488 |

+

"mimetype": "text/x-python",

|

| 489 |

+

"name": "python",

|

| 490 |

+

"nbconvert_exporter": "python",

|

| 491 |

+

"pygments_lexer": "ipython3",

|

| 492 |

+

"version": "3.11.8"

|

| 493 |

+

}

|

| 494 |

+

},

|

| 495 |

+

"nbformat": 4,

|

| 496 |

+

"nbformat_minor": 0

|

| 497 |

+

}

|

data/pyproject.toml

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[tool.poetry]

|

| 2 |

+

name = "Protify"

|

| 3 |

+

version = "0.0.5"

|

| 4 |

+

description = "Low code molecular property prediction"

|

| 5 |

+

authors = ["Synthyra <info@synthyra.com>"]

|

| 6 |

+

keywords = ["plm, protein, transformer"]

|

| 7 |

+

repository = "https://github.com/Synthyra/Protify"

|

| 8 |

+

license = "Protify License"

|

| 9 |

+

readme = "README.md"

|

| 10 |

+

include = [

|

| 11 |

+

"LICENSE.md",

|

| 12 |

+

"README.md",

|

| 13 |

+

]

|

| 14 |

+

|

| 15 |

+

packages = [

|

| 16 |

+

{ include = "protify", from = "src" },

|

| 17 |

+

]

|

| 18 |

+

|

| 19 |

+

[tool.poetry.dependencies]

|

| 20 |

+

python = "^3.8"

|

| 21 |

+

torch = ">=2.5.1"

|

| 22 |

+

torchvision = "*"

|

| 23 |

+

transformers = ">=4.47"

|

| 24 |

+

accelerate = ">=1.1.0"

|

| 25 |

+

tf-keras = "*"

|

| 26 |

+

tensorflow = "*"

|

| 27 |

+

torchinfo = "*"

|

| 28 |

+

torchmetrics = "*"

|

| 29 |

+

scikit-learn = "*"

|

| 30 |

+

scipy = "*"

|

| 31 |

+

datasets = "*"

|

| 32 |

+

einops = "*"

|

| 33 |

+

numpy = "==1.26.1"

|

| 34 |

+

networkx = ">=3.4.2"

|

| 35 |

+

xgboost = "*"

|

| 36 |

+

lightgbm = "*"

|

| 37 |

+

pyfiglet = "*"

|

| 38 |

+

|

| 39 |

+

[build-system]

|

| 40 |

+

requires = ["poetry-core>=1.0.0"]

|

| 41 |

+

build-backend = "poetry.core.masonry.api"

|

data/requirements.txt

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch>=2.5.1

|

| 2 |

+

torchvision

|

| 3 |

+

transformers>=4.47

|

| 4 |

+

accelerate>=1.1.0

|

| 5 |

+

peft

|

| 6 |

+

tf-keras

|

| 7 |

+

tensorflow

|

| 8 |

+

torchinfo

|

| 9 |

+

torchmetrics

|

| 10 |

+

scikit-learn==1.5.0

|

| 11 |

+

scipy>=1.13.1

|

| 12 |

+

datasets

|

| 13 |

+

einops

|

| 14 |

+

numpy==1.26.4

|

| 15 |

+

networkx>=3.4.2

|

| 16 |

+

xgboost

|

| 17 |

+

lightgbm

|

| 18 |

+

pyfiglet

|

| 19 |

+

seaborn>=0.13.2

|

| 20 |

+

matplotlib>=3.9.0

|

data/setup_bioenv.sh

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

# chmod +x setup_bioenv.sh

|

| 4 |

+

# ./setup_bioenv.sh

|

| 5 |

+

|

| 6 |

+

# Set up error handling

|

| 7 |

+

set -e # Exit immediately if a command exits with a non-zero status

|

| 8 |

+

|

| 9 |

+

echo "Setting up Python virtual environment for Protify..."

|

| 10 |

+

|

| 11 |

+

# Create virtual environment

|

| 12 |

+

python3 -m venv ~/bioenv

|

| 13 |

+

|

| 14 |

+

# Activate virtual environment

|

| 15 |

+

source ~/bioenv/bin/activate

|

| 16 |

+

|

| 17 |

+

# Update pip and setuptools

|

| 18 |

+

echo "Upgrading pip and setuptools..."

|

| 19 |

+

pip install --upgrade pip setuptools

|

| 20 |

+

|

| 21 |

+

# Install torch and torchvision

|

| 22 |

+

echo "Installing torch and torchvision..."

|

| 23 |

+

pip install --force-reinstall torch torchvision --index-url https://download.pytorch.org/whl/cu126

|

| 24 |

+

|

| 25 |

+

# Install requirements with force reinstall

|

| 26 |

+

echo "Installing requirements"

|

| 27 |

+

pip install -r requirements.txt

|

| 28 |

+

|

| 29 |

+

# List installed packages for verification

|

| 30 |

+

echo -e "\nInstalled packages:"

|

| 31 |

+

pip list

|

| 32 |

+

|

| 33 |

+

# Instructions for future use

|

| 34 |

+