Iwin Transformer: Hierarchical Vision Transformer using Interleaved Windows

Abstract

Iwin Transformer, a hierarchical vision transformer without position embeddings, combines interleaved window attention and depthwise separable convolution for efficient global information exchange, achieving competitive performance in image classification, semantic segmentation, and video action recognition.

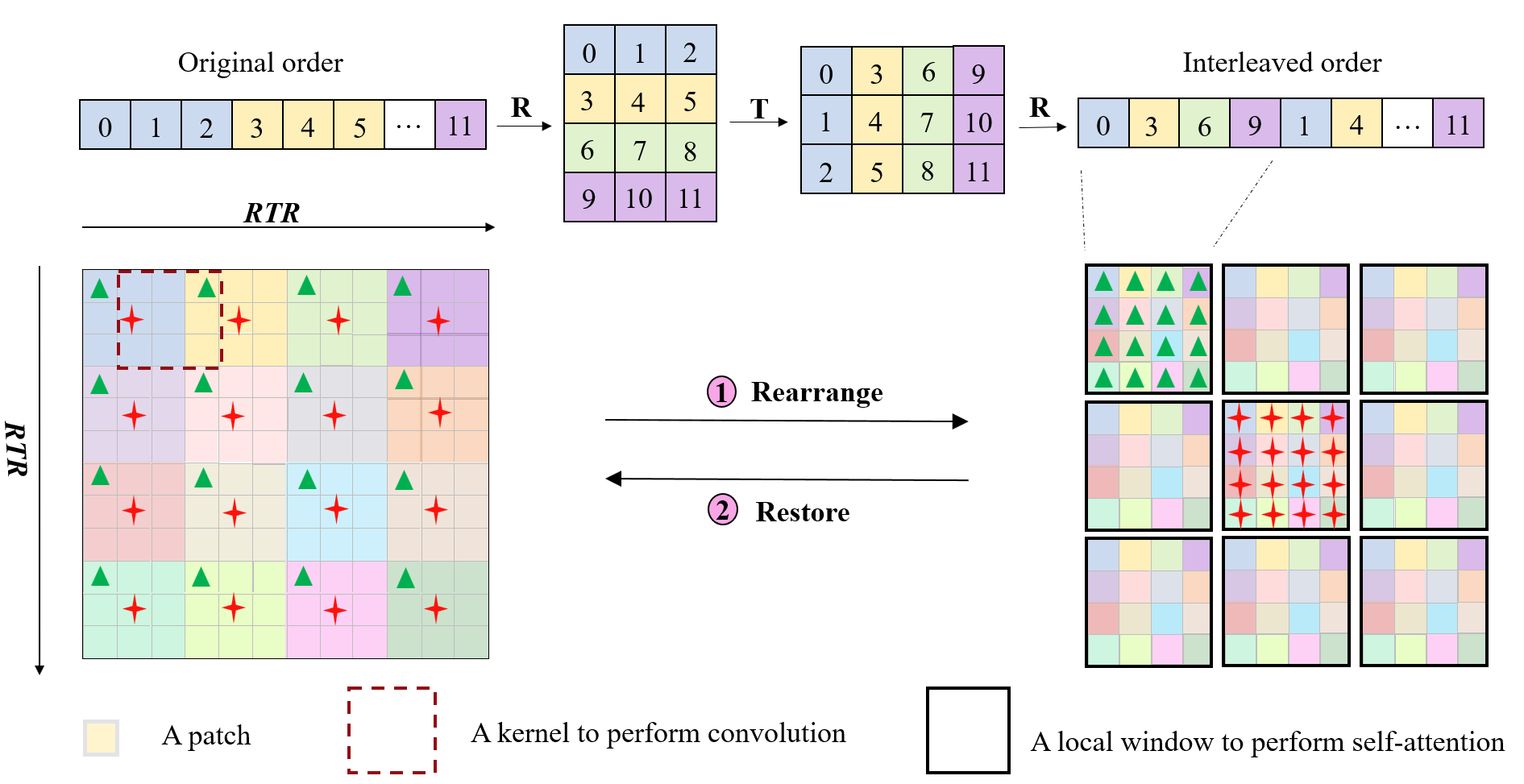

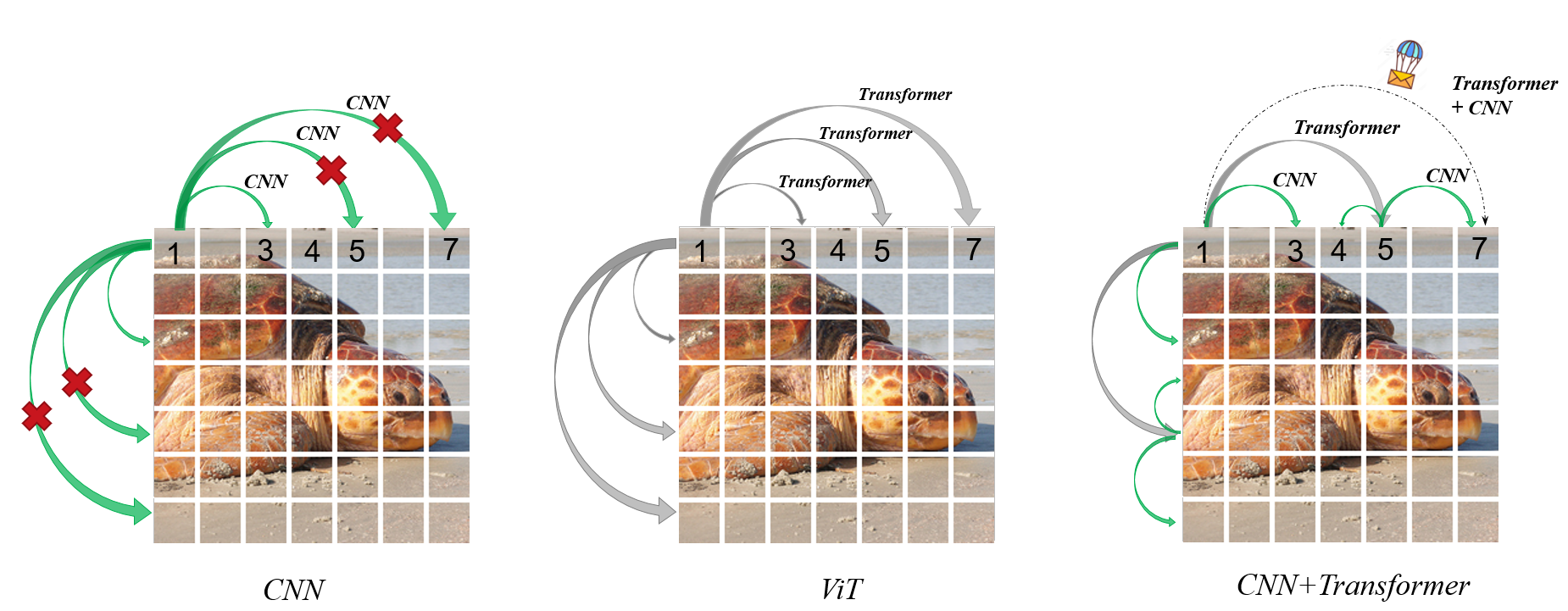

We introduce Iwin Transformer, a novel position-embedding-free hierarchical vision transformer, which can be fine-tuned directly from low to high resolution, through the collaboration of innovative interleaved window attention and depthwise separable convolution. This approach uses attention to connect distant tokens and applies convolution to link neighboring tokens, enabling global information exchange within a single module, overcoming Swin Transformer's limitation of requiring two consecutive blocks to approximate global attention. Extensive experiments on visual benchmarks demonstrate that Iwin Transformer exhibits strong competitiveness in tasks such as image classification (87.4 top-1 accuracy on ImageNet-1K), semantic segmentation and video action recognition. We also validate the effectiveness of the core component in Iwin as a standalone module that can seamlessly replace the self-attention module in class-conditional image generation. The concepts and methods introduced by the Iwin Transformer have the potential to inspire future research, like Iwin 3D Attention in video generation. The code and models are available at https://github.com/cominder/Iwin-Transformer.

Community

We introduce Iwin Transformer, a novel position-embedding-free hierarchical vision transformer, which can be fine-tuned directly from low to high resolution through the collaboration of innovative interleaved window attention and depthwise separable convolution. This approach uses attention to connect distant tokens and applies convolution to link neighboring tokens, enabling global information exchange within a single module, overcoming Swin Transformer's limitation of requiring two consecutive blocks to approximate global attention. Extensive experiments on visual benchmarks demonstrate that Iwin Transformer exhibits strong competitiveness in tasks such as image classification (87.4 top-1 accuracy on ImageNet-1K), semantic segmentation, and video action recognition. We also validate the effectiveness of the core component in Iwin as a standalone module that can seamlessly replace the self-attention module in class-conditional image generation. The concepts and methods introduced by the Iwin Transformer have the potential to inspire future research, like Iwin 3D Attention in video generation. The code and models are available at https://github.com/Cominder/Iwin-Transformer.

This is an automated message from the Librarian Bot. I found the following papers similar to this paper.

The following papers were recommended by the Semantic Scholar API

- FSATFusion: Frequency-Spatial Attention Transformer for Infrared and Visible Image Fusion (2025)

- S2AFormer: Strip Self-Attention for Efficient Vision Transformer (2025)

- Speed-up of Vision Transformer Models by Attention-aware Token Filtering (2025)

- Polyline Path Masked Attention for Vision Transformer (2025)

- DuoFormer: Leveraging Hierarchical Representations by Local and Global Attention Vision Transformer (2025)

- Token Transforming: A Unified and Training-Free Token Compression Framework for Vision Transformer Acceleration (2025)

Please give a thumbs up to this comment if you found it helpful!

If you want recommendations for any Paper on Hugging Face checkout this Space

You can directly ask Librarian Bot for paper recommendations by tagging it in a comment:

@librarian-bot

recommend

Models citing this paper 1

Datasets citing this paper 0

No dataset linking this paper

Spaces citing this paper 0

No Space linking this paper