Merge pull request #1171 from danielaskdd/add-temperature

Browse files- .github/workflows/linting.yaml +1 -1

- README-zh.md +1380 -0

- README.assets/b2aaf634151b4706892693ffb43d9093.png +3 -0

- README.assets/iShot_2025-03-23_12.40.08.png +3 -0

- README.md +55 -226

- env.example +11 -9

- lightrag/__init__.py +1 -1

- lightrag/api/README-zh.md +559 -0

- lightrag/api/README.md +3 -4

- lightrag/api/__init__.py +1 -1

- lightrag/api/lightrag_server.py +30 -22

- lightrag/api/routers/document_routes.py +38 -16

- lightrag/api/routers/graph_routes.py +5 -5

- lightrag/api/routers/ollama_api.py +11 -6

- lightrag/api/routers/query_routes.py +5 -5

- lightrag/api/utils_api.py +155 -42

- lightrag/api/webui/assets/index-CJhG62dt.css +0 -0

- lightrag/api/webui/assets/index-Cq65VeVX.css +0 -0

- lightrag/api/webui/assets/{index-DlScqWrq.js → index-DUmKHl1m.js} +0 -0

- lightrag/api/webui/index.html +0 -0

- lightrag/kg/faiss_impl.py +16 -24

- lightrag/kg/nano_vector_db_impl.py +18 -26

- lightrag/kg/networkx_impl.py +16 -24

- lightrag/kg/shared_storage.py +26 -34

- lightrag_webui/src/App.tsx +15 -15

- lightrag_webui/src/components/ApiKeyAlert.tsx +34 -25

- lightrag_webui/src/components/MessageAlert.tsx +0 -56

- lightrag_webui/src/components/{graph → status}/StatusCard.tsx +0 -0

- lightrag_webui/src/components/{graph → status}/StatusIndicator.tsx +1 -1

- lightrag_webui/src/components/ui/AsyncSearch.tsx +71 -73

- lightrag_webui/src/features/SiteHeader.tsx +14 -12

- lightrag_webui/src/locales/ar.json +6 -0

- lightrag_webui/src/locales/en.json +6 -0

- lightrag_webui/src/locales/fr.json +6 -0

- lightrag_webui/src/locales/zh.json +6 -0

.github/workflows/linting.yaml

CHANGED

|

@@ -27,4 +27,4 @@ jobs:

|

|

| 27 |

pip install pre-commit

|

| 28 |

|

| 29 |

- name: Run pre-commit

|

| 30 |

-

run: pre-commit run --all-files

|

|

|

|

| 27 |

pip install pre-commit

|

| 28 |

|

| 29 |

- name: Run pre-commit

|

| 30 |

+

run: pre-commit run --all-files --show-diff-on-failure

|

README-zh.md

CHANGED

|

@@ -0,0 +1,1380 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# LightRAG: Simple and Fast Retrieval-Augmented Generation

|

| 2 |

+

|

| 3 |

+

<img src="./README.assets/b2aaf634151b4706892693ffb43d9093.png" width="800" alt="LightRAG Diagram">

|

| 4 |

+

|

| 5 |

+

## 🎉 新闻

|

| 6 |

+

|

| 7 |

+

- [X] [2025.03.18]🎯📢LightRAG现已支持引文功能。

|

| 8 |

+

- [X] [2025.02.05]🎯📢我们团队发布了[VideoRAG](https://github.com/HKUDS/VideoRAG),用于理解超长上下文视频。

|

| 9 |

+

- [X] [2025.01.13]🎯📢我们团队发布了[MiniRAG](https://github.com/HKUDS/MiniRAG),使用小型模型简化RAG。

|

| 10 |

+

- [X] [2025.01.06]🎯📢现在您可以[使用PostgreSQL进行存储](#using-postgresql-for-storage)。

|

| 11 |

+

- [X] [2024.12.31]🎯📢LightRAG现在支持[通过文档ID删除](https://github.com/HKUDS/LightRAG?tab=readme-ov-file#delete)。

|

| 12 |

+

- [X] [2024.11.25]🎯📢LightRAG现在支持无缝集成[自定义知识图谱](https://github.com/HKUDS/LightRAG?tab=readme-ov-file#insert-custom-kg),使用户能够用自己的领域专业知识增强系统。

|

| 13 |

+

- [X] [2024.11.19]🎯📢LightRAG的综合指南现已在[LearnOpenCV](https://learnopencv.com/lightrag)上发布。非常感谢博客作者。

|

| 14 |

+

- [X] [2024.11.12]🎯📢LightRAG现在支持[Oracle Database 23ai的所有存储类型(KV、向量和图)](https://github.com/HKUDS/LightRAG/blob/main/examples/lightrag_oracle_demo.py)。

|

| 15 |

+

- [X] [2024.11.11]🎯📢LightRAG现在支持[通过实体名称删除实体](https://github.com/HKUDS/LightRAG?tab=readme-ov-file#delete)。

|

| 16 |

+

- [X] [2024.11.09]🎯📢推出[LightRAG Gui](https://lightrag-gui.streamlit.app),允许您插入、查询、可视化和下载LightRAG知识。

|

| 17 |

+

- [X] [2024.11.04]🎯📢现在您可以[使用Neo4J进行存储](https://github.com/HKUDS/LightRAG?tab=readme-ov-file#using-neo4j-for-storage)。

|

| 18 |

+

- [X] [2024.10.29]🎯📢LightRAG现在通过`textract`支持多种文件类型,包括PDF、DOC、PPT和CSV。

|

| 19 |

+

- [X] [2024.10.20]🎯📢我们为LightRAG添加了一个新功能:图形可视化。

|

| 20 |

+

- [X] [2024.10.18]🎯📢我们添加了[LightRAG介绍视频](https://youtu.be/oageL-1I0GE)的链接。感谢作者!

|

| 21 |

+

- [X] [2024.10.17]🎯📢我们创建了一个[Discord频道](https://discord.gg/yF2MmDJyGJ)!欢迎加入分享和讨论!🎉🎉

|

| 22 |

+

- [X] [2024.10.16]🎯📢LightRAG现在支持[Ollama模型](https://github.com/HKUDS/LightRAG?tab=readme-ov-file#quick-start)!

|

| 23 |

+

- [X] [2024.10.15]🎯📢LightRAG现在支持[Hugging Face模型](https://github.com/HKUDS/LightRAG?tab=readme-ov-file#quick-start)!

|

| 24 |

+

|

| 25 |

+

<details>

|

| 26 |

+

<summary style="font-size: 1.4em; font-weight: bold; cursor: pointer; display: list-item;">

|

| 27 |

+

算法流程图

|

| 28 |

+

</summary>

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

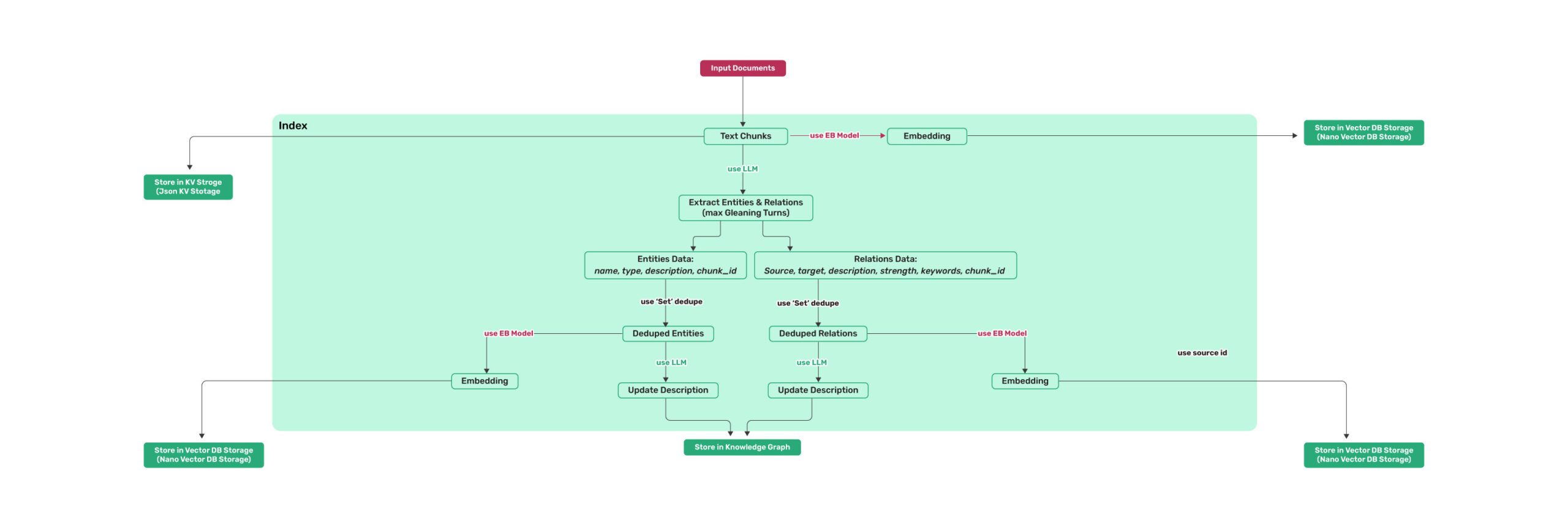

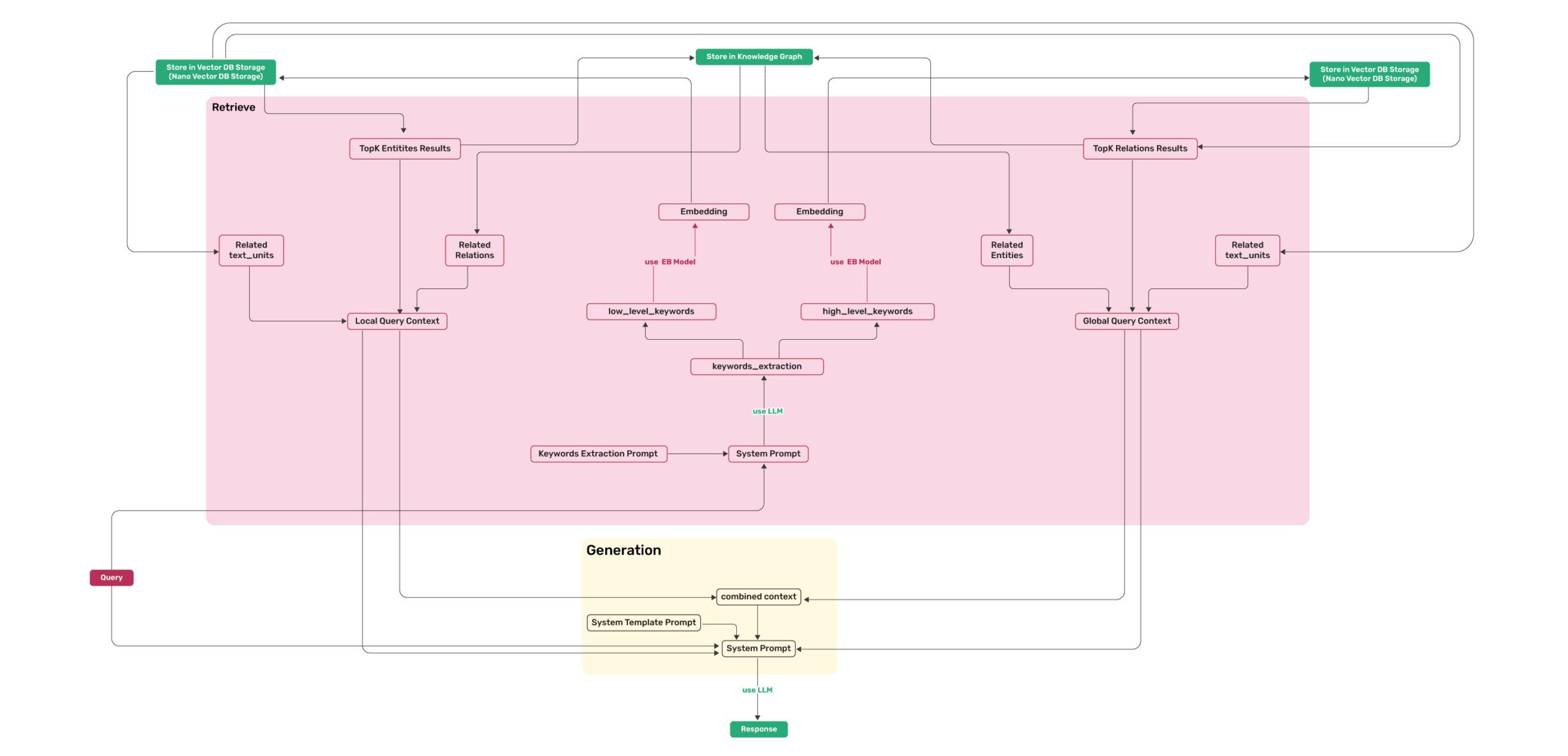

*图1:LightRAG索引流程图 - 图片来源:[Source](https://learnopencv.com/lightrag/)*

|

| 32 |

+

|

| 33 |

+

*图2:LightRAG检索和查询流程图 - 图片来源:[Source](https://learnopencv.com/lightrag/)*

|

| 34 |

+

|

| 35 |

+

</details>

|

| 36 |

+

|

| 37 |

+

## 安装

|

| 38 |

+

|

| 39 |

+

### 安装LightRAG核心

|

| 40 |

+

|

| 41 |

+

* 从源代码安装(推荐)

|

| 42 |

+

|

| 43 |

+

```bash

|

| 44 |

+

cd LightRAG

|

| 45 |

+

pip install -e .

|

| 46 |

+

```

|

| 47 |

+

|

| 48 |

+

* 从PyPI安装

|

| 49 |

+

|

| 50 |

+

```bash

|

| 51 |

+

pip install lightrag-hku

|

| 52 |

+

```

|

| 53 |

+

|

| 54 |

+

### 安装LightRAG服务器

|

| 55 |

+

|

| 56 |

+

LightRAG服务器旨在提供Web UI和API支持。Web UI便于文档索引、知识图谱探索和简单的RAG查询界面。LightRAG服务器还提供兼容Ollama的接口,旨在将LightRAG模拟为Ollama聊天模型。这使得AI聊天机器人(如Open WebUI)可以轻松访问LightRAG。

|

| 57 |

+

|

| 58 |

+

* 从PyPI安装

|

| 59 |

+

|

| 60 |

+

```bash

|

| 61 |

+

pip install "lightrag-hku[api]"

|

| 62 |

+

```

|

| 63 |

+

|

| 64 |

+

* 从源代码安装

|

| 65 |

+

|

| 66 |

+

```bash

|

| 67 |

+

# 如有必要,创建Python虚拟环境

|

| 68 |

+

# 以可编辑模式安装并支持API

|

| 69 |

+

pip install -e ".[api]"

|

| 70 |

+

```

|

| 71 |

+

|

| 72 |

+

**有关LightRAG服务器的更多信息,请参阅[LightRAG服务器](./lightrag/api/README.md)。**

|

| 73 |

+

|

| 74 |

+

## 快速开始

|

| 75 |

+

|

| 76 |

+

* [视频演示](https://www.youtube.com/watch?v=g21royNJ4fw)展示如何在本地运行LightRAG。

|

| 77 |

+

* 所有代码都可以在`examples`中找到。

|

| 78 |

+

* 如果使用OpenAI模型,请在环境中设置OpenAI API密钥:`export OPENAI_API_KEY="sk-..."`。

|

| 79 |

+

* 下载演示文本"狄更斯的圣诞颂歌":

|

| 80 |

+

|

| 81 |

+

```bash

|

| 82 |

+

curl https://raw.githubusercontent.com/gusye1234/nano-graphrag/main/tests/mock_data.txt > ./book.txt

|

| 83 |

+

```

|

| 84 |

+

|

| 85 |

+

## 查询

|

| 86 |

+

|

| 87 |

+

使用以下Python代码片段(在脚本中)初始化LightRAG并执行查询:

|

| 88 |

+

|

| 89 |

+

```python

|

| 90 |

+

import os

|

| 91 |

+

import asyncio

|

| 92 |

+

from lightrag import LightRAG, QueryParam

|

| 93 |

+

from lightrag.llm.openai import gpt_4o_mini_complete, gpt_4o_complete, openai_embed

|

| 94 |

+

from lightrag.kg.shared_storage import initialize_pipeline_status

|

| 95 |

+

from lightrag.utils import setup_logger

|

| 96 |

+

|

| 97 |

+

setup_logger("lightrag", level="INFO")

|

| 98 |

+

|

| 99 |

+

async def initialize_rag():

|

| 100 |

+

rag = LightRAG(

|

| 101 |

+

working_dir="your/path",

|

| 102 |

+

embedding_func=openai_embed,

|

| 103 |

+

llm_model_func=gpt_4o_mini_complete

|

| 104 |

+

)

|

| 105 |

+

|

| 106 |

+

await rag.initialize_storages()

|

| 107 |

+

await initialize_pipeline_status()

|

| 108 |

+

|

| 109 |

+

return rag

|

| 110 |

+

|

| 111 |

+

def main():

|

| 112 |

+

# 初始化RAG实例

|

| 113 |

+

rag = asyncio.run(initialize_rag())

|

| 114 |

+

# 插入文本

|

| 115 |

+

rag.insert("Your text")

|

| 116 |

+

|

| 117 |

+

# 执行朴素搜索

|

| 118 |

+

mode="naive"

|

| 119 |

+

# 执行本地搜索

|

| 120 |

+

mode="local"

|

| 121 |

+

# 执行全局搜索

|

| 122 |

+

mode="global"

|

| 123 |

+

# 执行混合搜索

|

| 124 |

+

mode="hybrid"

|

| 125 |

+

# 混合模式集成知识图谱和向量检索

|

| 126 |

+

mode="mix"

|

| 127 |

+

|

| 128 |

+

rag.query(

|

| 129 |

+

"这个故事的主要主题是什么?",

|

| 130 |

+

param=QueryParam(mode=mode)

|

| 131 |

+

)

|

| 132 |

+

|

| 133 |

+

if __name__ == "__main__":

|

| 134 |

+

main()

|

| 135 |

+

```

|

| 136 |

+

|

| 137 |

+

### 查询参数

|

| 138 |

+

|

| 139 |

+

```python

|

| 140 |

+

class QueryParam:

|

| 141 |

+

mode: Literal["local", "global", "hybrid", "naive", "mix"] = "global"

|

| 142 |

+

"""指定检索模式:

|

| 143 |

+

- "local":专注于上下文相关信息。

|

| 144 |

+

- "global":利用全局知识。

|

| 145 |

+

- "hybrid":结合本地和全局检索方法。

|

| 146 |

+

- "naive":执行基本搜索,不使用高级技术。

|

| 147 |

+

- "mix":集成知识图谱和向量检索。混合模式结合知识图谱和向量搜索:

|

| 148 |

+

- 同时使用结构化(KG)和非结构化(向量)信息

|

| 149 |

+

- 通过分析关系和上下文提供全面的答案

|

| 150 |

+

- 通过HTML img标签支持图像内容

|

| 151 |

+

- 允许通过top_k参数控制检索深度

|

| 152 |

+

"""

|

| 153 |

+

only_need_context: bool = False

|

| 154 |

+

"""如果为True,仅返回检索到的上下文而不生成响应。"""

|

| 155 |

+

response_type: str = "Multiple Paragraphs"

|

| 156 |

+

"""定义响应格式。示例:'Multiple Paragraphs'(多段落), 'Single Paragraph'(单段落), 'Bullet Points'(要点列表)。"""

|

| 157 |

+

top_k: int = 60

|

| 158 |

+

"""要检索的顶部项目数量。在'local'模式下代表实体,在'global'模式下代表关系。"""

|

| 159 |

+

max_token_for_text_unit: int = 4000

|

| 160 |

+

"""每个检索文本块允许的最大令牌数。"""

|

| 161 |

+

max_token_for_global_context: int = 4000

|

| 162 |

+

"""全局检索中关系描述的最大令牌分配。"""

|

| 163 |

+

max_token_for_local_context: int = 4000

|

| 164 |

+

"""本地检索中实体描述的最大令牌分配。"""

|

| 165 |

+

ids: list[str] | None = None # 仅支持PG向量数据库

|

| 166 |

+

"""用于过滤RAG的ID列表。"""

|

| 167 |

+

...

|

| 168 |

+

```

|

| 169 |

+

|

| 170 |

+

> top_k的默认值可以通过环境变量TOP_K更改。

|

| 171 |

+

|

| 172 |

+

<details>

|

| 173 |

+

<summary> <b>使用类OpenAI的API</b> </summary>

|

| 174 |

+

|

| 175 |

+

* LightRAG还支持类OpenAI的聊天/嵌入API:

|

| 176 |

+

|

| 177 |

+

```python

|

| 178 |

+

async def llm_model_func(

|

| 179 |

+

prompt, system_prompt=None, history_messages=[], keyword_extraction=False, **kwargs

|

| 180 |

+

) -> str:

|

| 181 |

+

return await openai_complete_if_cache(

|

| 182 |

+

"solar-mini",

|

| 183 |

+

prompt,

|

| 184 |

+

system_prompt=system_prompt,

|

| 185 |

+

history_messages=history_messages,

|

| 186 |

+

api_key=os.getenv("UPSTAGE_API_KEY"),

|

| 187 |

+

base_url="https://api.upstage.ai/v1/solar",

|

| 188 |

+

**kwargs

|

| 189 |

+

)

|

| 190 |

+

|

| 191 |

+

async def embedding_func(texts: list[str]) -> np.ndarray:

|

| 192 |

+

return await openai_embed(

|

| 193 |

+

texts,

|

| 194 |

+

model="solar-embedding-1-large-query",

|

| 195 |

+

api_key=os.getenv("UPSTAGE_API_KEY"),

|

| 196 |

+

base_url="https://api.upstage.ai/v1/solar"

|

| 197 |

+

)

|

| 198 |

+

|

| 199 |

+

async def initialize_rag():

|

| 200 |

+

rag = LightRAG(

|

| 201 |

+

working_dir=WORKING_DIR,

|

| 202 |

+

llm_model_func=llm_model_func,

|

| 203 |

+

embedding_func=EmbeddingFunc(

|

| 204 |

+

embedding_dim=4096,

|

| 205 |

+

max_token_size=8192,

|

| 206 |

+

func=embedding_func

|

| 207 |

+

)

|

| 208 |

+

)

|

| 209 |

+

|

| 210 |

+

await rag.initialize_storages()

|

| 211 |

+

await initialize_pipeline_status()

|

| 212 |

+

|

| 213 |

+

return rag

|

| 214 |

+

```

|

| 215 |

+

|

| 216 |

+

</details>

|

| 217 |

+

|

| 218 |

+

<details>

|

| 219 |

+

<summary> <b>使用Hugging Face模型</b> </summary>

|

| 220 |

+

|

| 221 |

+

* 如果您想使用Hugging Face模型,只需要按如下方式设置LightRAG:

|

| 222 |

+

|

| 223 |

+

参见`lightrag_hf_demo.py`

|

| 224 |

+

|

| 225 |

+

```python

|

| 226 |

+

# 使用Hugging Face模型初始化LightRAG

|

| 227 |

+

rag = LightRAG(

|

| 228 |

+

working_dir=WORKING_DIR,

|

| 229 |

+

llm_model_func=hf_model_complete, # 使用Hugging Face模型进行文本生成

|

| 230 |

+

llm_model_name='meta-llama/Llama-3.1-8B-Instruct', # Hugging Face的模型名称

|

| 231 |

+

# 使用Hugging Face嵌入函数

|

| 232 |

+

embedding_func=EmbeddingFunc(

|

| 233 |

+

embedding_dim=384,

|

| 234 |

+

max_token_size=5000,

|

| 235 |

+

func=lambda texts: hf_embed(

|

| 236 |

+

texts,

|

| 237 |

+

tokenizer=AutoTokenizer.from_pretrained("sentence-transformers/all-MiniLM-L6-v2"),

|

| 238 |

+

embed_model=AutoModel.from_pretrained("sentence-transformers/all-MiniLM-L6-v2")

|

| 239 |

+

)

|

| 240 |

+

),

|

| 241 |

+

)

|

| 242 |

+

```

|

| 243 |

+

|

| 244 |

+

</details>

|

| 245 |

+

|

| 246 |

+

<details>

|

| 247 |

+

<summary> <b>使用Ollama模型</b> </summary>

|

| 248 |

+

|

| 249 |

+

### 概述

|

| 250 |

+

|

| 251 |

+

如果您想使用Ollama模型,您需要拉取计划使用的模型和嵌入模型,例如`nomic-embed-text`。

|

| 252 |

+

|

| 253 |

+

然后您只需要按如下方式设置LightRAG:

|

| 254 |

+

|

| 255 |

+

```python

|

| 256 |

+

# 使用Ollama模型初始化LightRAG

|

| 257 |

+

rag = LightRAG(

|

| 258 |

+

working_dir=WORKING_DIR,

|

| 259 |

+

llm_model_func=ollama_model_complete, # 使用Ollama模型进行文本生成

|

| 260 |

+

llm_model_name='your_model_name', # 您的模型名称

|

| 261 |

+

# 使用Ollama嵌入函数

|

| 262 |

+

embedding_func=EmbeddingFunc(

|

| 263 |

+

embedding_dim=768,

|

| 264 |

+

max_token_size=8192,

|

| 265 |

+

func=lambda texts: ollama_embed(

|

| 266 |

+

texts,

|

| 267 |

+

embed_model="nomic-embed-text"

|

| 268 |

+

)

|

| 269 |

+

),

|

| 270 |

+

)

|

| 271 |

+

```

|

| 272 |

+

|

| 273 |

+

### 增加上下文大小

|

| 274 |

+

|

| 275 |

+

为了使LightRAG��常工作,上下文应至少为32k令牌。默认情况下,Ollama模型的上下文大小为8k。您可以通过以下两种方式之一实现这一点:

|

| 276 |

+

|

| 277 |

+

#### 在Modelfile中增加`num_ctx`参数。

|

| 278 |

+

|

| 279 |

+

1. 拉取模型:

|

| 280 |

+

|

| 281 |

+

```bash

|

| 282 |

+

ollama pull qwen2

|

| 283 |

+

```

|

| 284 |

+

|

| 285 |

+

2. 显示模型文件:

|

| 286 |

+

|

| 287 |

+

```bash

|

| 288 |

+

ollama show --modelfile qwen2 > Modelfile

|

| 289 |

+

```

|

| 290 |

+

|

| 291 |

+

3. 编辑Modelfile,添加以下行:

|

| 292 |

+

|

| 293 |

+

```bash

|

| 294 |

+

PARAMETER num_ctx 32768

|

| 295 |

+

```

|

| 296 |

+

|

| 297 |

+

4. 创建修改后的模型:

|

| 298 |

+

|

| 299 |

+

```bash

|

| 300 |

+

ollama create -f Modelfile qwen2m

|

| 301 |

+

```

|

| 302 |

+

|

| 303 |

+

#### 通过Ollama API设置`num_ctx`。

|

| 304 |

+

|

| 305 |

+

您可以使用`llm_model_kwargs`参数配置ollama:

|

| 306 |

+

|

| 307 |

+

```python

|

| 308 |

+

rag = LightRAG(

|

| 309 |

+

working_dir=WORKING_DIR,

|

| 310 |

+

llm_model_func=ollama_model_complete, # 使用Ollama模型进行文本生成

|

| 311 |

+

llm_model_name='your_model_name', # 您的模型名称

|

| 312 |

+

llm_model_kwargs={"options": {"num_ctx": 32768}},

|

| 313 |

+

# 使用Ollama嵌入函数

|

| 314 |

+

embedding_func=EmbeddingFunc(

|

| 315 |

+

embedding_dim=768,

|

| 316 |

+

max_token_size=8192,

|

| 317 |

+

func=lambda texts: ollama_embedding(

|

| 318 |

+

texts,

|

| 319 |

+

embed_model="nomic-embed-text"

|

| 320 |

+

)

|

| 321 |

+

),

|

| 322 |

+

)

|

| 323 |

+

```

|

| 324 |

+

|

| 325 |

+

#### 低RAM GPU

|

| 326 |

+

|

| 327 |

+

为了在低RAM GPU上运行此实验,您应该选择小型模型并调整上下文窗口(增加上下文会增加内存消耗)。例如,在6Gb RAM的改装挖矿GPU上运行这个ollama示例需要将上下文大小设置为26k,同时使用`gemma2:2b`。它能够在`book.txt`中找到197个实体和19个关系。

|

| 328 |

+

|

| 329 |

+

</details>

|

| 330 |

+

<details>

|

| 331 |

+

<summary> <b>LlamaIndex</b> </summary>

|

| 332 |

+

|

| 333 |

+

LightRAG支持与LlamaIndex集成。

|

| 334 |

+

|

| 335 |

+

1. **LlamaIndex** (`llm/llama_index_impl.py`):

|

| 336 |

+

- 通过LlamaIndex与OpenAI和其他提供商集成

|

| 337 |

+

- 详细设置和示例请参见[LlamaIndex文档](lightrag/llm/Readme.md)

|

| 338 |

+

|

| 339 |

+

### 使用示例

|

| 340 |

+

|

| 341 |

+

```python

|

| 342 |

+

# 使用LlamaIndex直接访问OpenAI

|

| 343 |

+

import asyncio

|

| 344 |

+

from lightrag import LightRAG

|

| 345 |

+

from lightrag.llm.llama_index_impl import llama_index_complete_if_cache, llama_index_embed

|

| 346 |

+

from llama_index.embeddings.openai import OpenAIEmbedding

|

| 347 |

+

from llama_index.llms.openai import OpenAI

|

| 348 |

+

from lightrag.kg.shared_storage import initialize_pipeline_status

|

| 349 |

+

from lightrag.utils import setup_logger

|

| 350 |

+

|

| 351 |

+

# 为LightRAG设置日志处理程序

|

| 352 |

+

setup_logger("lightrag", level="INFO")

|

| 353 |

+

|

| 354 |

+

async def initialize_rag():

|

| 355 |

+

rag = LightRAG(

|

| 356 |

+

working_dir="your/path",

|

| 357 |

+

llm_model_func=llama_index_complete_if_cache, # LlamaIndex兼容的完成函数

|

| 358 |

+

embedding_func=EmbeddingFunc( # LlamaIndex兼容的嵌入函数

|

| 359 |

+

embedding_dim=1536,

|

| 360 |

+

max_token_size=8192,

|

| 361 |

+

func=lambda texts: llama_index_embed(texts, embed_model=embed_model)

|

| 362 |

+

),

|

| 363 |

+

)

|

| 364 |

+

|

| 365 |

+

await rag.initialize_storages()

|

| 366 |

+

await initialize_pipeline_status()

|

| 367 |

+

|

| 368 |

+

return rag

|

| 369 |

+

|

| 370 |

+

def main():

|

| 371 |

+

# 初始化RAG实例

|

| 372 |

+

rag = asyncio.run(initialize_rag())

|

| 373 |

+

|

| 374 |

+

with open("./book.txt", "r", encoding="utf-8") as f:

|

| 375 |

+

rag.insert(f.read())

|

| 376 |

+

|

| 377 |

+

# 执行朴素搜索

|

| 378 |

+

print(

|

| 379 |

+

rag.query("这个故事的主要主题是什么?", param=QueryParam(mode="naive"))

|

| 380 |

+

)

|

| 381 |

+

|

| 382 |

+

# 执行本地搜索

|

| 383 |

+

print(

|

| 384 |

+

rag.query("这个故事的主要主题是什么?", param=QueryParam(mode="local"))

|

| 385 |

+

)

|

| 386 |

+

|

| 387 |

+

# 执行全局搜索

|

| 388 |

+

print(

|

| 389 |

+

rag.query("这个故事的主要主题是什么?", param=QueryParam(mode="global"))

|

| 390 |

+

)

|

| 391 |

+

|

| 392 |

+

# 执行混合搜索

|

| 393 |

+

print(

|

| 394 |

+

rag.query("这个故事的主要主题是什么?", param=QueryParam(mode="hybrid"))

|

| 395 |

+

)

|

| 396 |

+

|

| 397 |

+

if __name__ == "__main__":

|

| 398 |

+

main()

|

| 399 |

+

```

|

| 400 |

+

|

| 401 |

+

#### 详细文档和示例,请参见:

|

| 402 |

+

|

| 403 |

+

- [LlamaIndex文档](lightrag/llm/Readme.md)

|

| 404 |

+

- [直接OpenAI示例](examples/lightrag_llamaindex_direct_demo.py)

|

| 405 |

+

- [LiteLLM代理示例](examples/lightrag_llamaindex_litellm_demo.py)

|

| 406 |

+

|

| 407 |

+

</details>

|

| 408 |

+

<details>

|

| 409 |

+

<summary> <b>对话历史支持</b> </summary>

|

| 410 |

+

|

| 411 |

+

LightRAG现在通过对话历史功能支持多轮对话。以下是使用方法:

|

| 412 |

+

|

| 413 |

+

```python

|

| 414 |

+

# 创建对话历史

|

| 415 |

+

conversation_history = [

|

| 416 |

+

{"role": "user", "content": "主角对圣诞节的态度是什么?"},

|

| 417 |

+

{"role": "assistant", "content": "在故事开始时,埃比尼泽·斯克鲁奇对圣诞节持非常消极的态度..."},

|

| 418 |

+

{"role": "user", "content": "他的态度是如何改变的?"}

|

| 419 |

+

]

|

| 420 |

+

|

| 421 |

+

# 创建带有对话历史的查询参数

|

| 422 |

+

query_param = QueryParam(

|

| 423 |

+

mode="mix", # 或其他模式:"local"、"global"、"hybrid"

|

| 424 |

+

conversation_history=conversation_history, # 添加对话历史

|

| 425 |

+

history_turns=3 # 考虑最近的对话轮数

|

| 426 |

+

)

|

| 427 |

+

|

| 428 |

+

# 进行考虑对话历史的查询

|

| 429 |

+

response = rag.query(

|

| 430 |

+

"是什么导致了他性格的这种变化?",

|

| 431 |

+

param=query_param

|

| 432 |

+

)

|

| 433 |

+

```

|

| 434 |

+

|

| 435 |

+

</details>

|

| 436 |

+

|

| 437 |

+

<details>

|

| 438 |

+

<summary> <b>自定义提示支持</b> </summary>

|

| 439 |

+

|

| 440 |

+

LightRAG现在支持自定义提示,以便对系统行为进行精细控制。以下是使用方法:

|

| 441 |

+

|

| 442 |

+

```python

|

| 443 |

+

# 创建查询参数

|

| 444 |

+

query_param = QueryParam(

|

| 445 |

+

mode="hybrid", # 或其他模式:"local"、"global"、"hybrid"、"mix"和"naive"

|

| 446 |

+

)

|

| 447 |

+

|

| 448 |

+

# 示例1:使用默认系统提示

|

| 449 |

+

response_default = rag.query(

|

| 450 |

+

"可再生能源的主要好处是什么?",

|

| 451 |

+

param=query_param

|

| 452 |

+

)

|

| 453 |

+

print(response_default)

|

| 454 |

+

|

| 455 |

+

# 示例2:使用自定义提示

|

| 456 |

+

custom_prompt = """

|

| 457 |

+

您是环境科学领域的专家助手。请提供详细且结构化的答案,并附带示例。

|

| 458 |

+

---对话历史---

|

| 459 |

+

{history}

|

| 460 |

+

|

| 461 |

+

---知识库---

|

| 462 |

+

{context_data}

|

| 463 |

+

|

| 464 |

+

---响应规则---

|

| 465 |

+

|

| 466 |

+

- 目标格式和长度:{response_type}

|

| 467 |

+

"""

|

| 468 |

+

response_custom = rag.query(

|

| 469 |

+

"可再生能源的主要好处是什么?",

|

| 470 |

+

param=query_param,

|

| 471 |

+

system_prompt=custom_prompt # 传递自定义提示

|

| 472 |

+

)

|

| 473 |

+

print(response_custom)

|

| 474 |

+

```

|

| 475 |

+

|

| 476 |

+

</details>

|

| 477 |

+

|

| 478 |

+

<details>

|

| 479 |

+

<summary> <b>独立关键词提取</b> </summary>

|

| 480 |

+

|

| 481 |

+

我们引入了新函数`query_with_separate_keyword_extraction`来增强关键词提取功能。该函数将关键词提取过程与用户提示分开,专注于查询以提高提取关键词的相关性。

|

| 482 |

+

|

| 483 |

+

##### 工作原理

|

| 484 |

+

|

| 485 |

+

该函数将输入分为两部分:

|

| 486 |

+

|

| 487 |

+

- `用户查询`

|

| 488 |

+

- `提示`

|

| 489 |

+

|

| 490 |

+

然后仅对`用户查询`执行关键词提取。这种分离确保提取过程是集中和相关的,不受`提示`中任何额外语言的影响。它还允许`提示`纯粹用于响应格式化,保持用户原始问题的意图和清晰度。

|

| 491 |

+

|

| 492 |

+

##### 使用示例

|

| 493 |

+

|

| 494 |

+

这个`示例`展示了如何为教育内容定制函数,专注于为高年级学生提供详细解释。

|

| 495 |

+

|

| 496 |

+

```python

|

| 497 |

+

rag.query_with_separate_keyword_extraction(

|

| 498 |

+

query="解释重力定律",

|

| 499 |

+

prompt="提供适合学习物理的高中生的详细解释。",

|

| 500 |

+

param=QueryParam(mode="hybrid")

|

| 501 |

+

)

|

| 502 |

+

```

|

| 503 |

+

|

| 504 |

+

</details>

|

| 505 |

+

|

| 506 |

+

<details>

|

| 507 |

+

<summary> <b>插入自定义KG</b> </summary>

|

| 508 |

+

|

| 509 |

+

```python

|

| 510 |

+

custom_kg = {

|

| 511 |

+

"chunks": [

|

| 512 |

+

{

|

| 513 |

+

"content": "Alice和Bob正在合作进行量子计算研究。",

|

| 514 |

+

"source_id": "doc-1"

|

| 515 |

+

}

|

| 516 |

+

],

|

| 517 |

+

"entities": [

|

| 518 |

+

{

|

| 519 |

+

"entity_name": "Alice",

|

| 520 |

+

"entity_type": "person",

|

| 521 |

+

"description": "Alice是一位专门研究量子物理的研究员。",

|

| 522 |

+

"source_id": "doc-1"

|

| 523 |

+

},

|

| 524 |

+

{

|

| 525 |

+

"entity_name": "Bob",

|

| 526 |

+

"entity_type": "person",

|

| 527 |

+

"description": "Bob是一位数学家。",

|

| 528 |

+

"source_id": "doc-1"

|

| 529 |

+

},

|

| 530 |

+

{

|

| 531 |

+

"entity_name": "量子计算",

|

| 532 |

+

"entity_type": "technology",

|

| 533 |

+

"description": "量子计算利用量子力学现象进行计算。",

|

| 534 |

+

"source_id": "doc-1"

|

| 535 |

+

}

|

| 536 |

+

],

|

| 537 |

+

"relationships": [

|

| 538 |

+

{

|

| 539 |

+

"src_id": "Alice",

|

| 540 |

+

"tgt_id": "Bob",

|

| 541 |

+

"description": "Alice和Bob是研究伙伴。",

|

| 542 |

+

"keywords": "合作 研究",

|

| 543 |

+

"weight": 1.0,

|

| 544 |

+

"source_id": "doc-1"

|

| 545 |

+

},

|

| 546 |

+

{

|

| 547 |

+

"src_id": "Alice",

|

| 548 |

+

"tgt_id": "量子计算",

|

| 549 |

+

"description": "Alice进行量子计算研究。",

|

| 550 |

+

"keywords": "研究 专业",

|

| 551 |

+

"weight": 1.0,

|

| 552 |

+

"source_id": "doc-1"

|

| 553 |

+

},

|

| 554 |

+

{

|

| 555 |

+

"src_id": "Bob",

|

| 556 |

+

"tgt_id": "量子计算",

|

| 557 |

+

"description": "Bob研究量子计算。",

|

| 558 |

+

"keywords": "研究 应用",

|

| 559 |

+

"weight": 1.0,

|

| 560 |

+

"source_id": "doc-1"

|

| 561 |

+

}

|

| 562 |

+

]

|

| 563 |

+

}

|

| 564 |

+

|

| 565 |

+

rag.insert_custom_kg(custom_kg)

|

| 566 |

+

```

|

| 567 |

+

|

| 568 |

+

</details>

|

| 569 |

+

|

| 570 |

+

## 插入

|

| 571 |

+

|

| 572 |

+

#### 基本插入

|

| 573 |

+

|

| 574 |

+

```python

|

| 575 |

+

# 基本插入

|

| 576 |

+

rag.insert("文本")

|

| 577 |

+

```

|

| 578 |

+

|

| 579 |

+

<details>

|

| 580 |

+

<summary> <b> 批量插入 </b></summary>

|

| 581 |

+

|

| 582 |

+

```python

|

| 583 |

+

# 基本批量插入:一次插入多个文本

|

| 584 |

+

rag.insert(["文本1", "文本2",...])

|

| 585 |

+

|

| 586 |

+

# 带有自定义批量大小配置的批量插入

|

| 587 |

+

rag = LightRAG(

|

| 588 |

+

working_dir=WORKING_DIR,

|

| 589 |

+

addon_params={

|

| 590 |

+

"insert_batch_size": 4 # 每批处理4个文档

|

| 591 |

+

}

|

| 592 |

+

)

|

| 593 |

+

|

| 594 |

+

rag.insert(["文本1", "文本2", "文本3", ...]) # 文档将以4个为一批进行处理

|

| 595 |

+

```

|

| 596 |

+

|

| 597 |

+

`addon_params`中的`insert_batch_size`参数控制插入过程中每批处理的文档数量。这对于以下情况很有用:

|

| 598 |

+

|

| 599 |

+

- 管理大型文档集合的内存使用

|

| 600 |

+

- 优化处理速度

|

| 601 |

+

- 提供更好的进度跟踪

|

| 602 |

+

- 如果未指定,默认值为10

|

| 603 |

+

|

| 604 |

+

</details>

|

| 605 |

+

|

| 606 |

+

<details>

|

| 607 |

+

<summary> <b> 带ID插入 </b></summary>

|

| 608 |

+

|

| 609 |

+

如果您想为文档提供自己的ID,文档数量和ID数量必须相同。

|

| 610 |

+

|

| 611 |

+

```python

|

| 612 |

+

# 插入单个文本,并为其提供ID

|

| 613 |

+

rag.insert("文本1", ids=["文本1的ID"])

|

| 614 |

+

|

| 615 |

+

# 插入多个文本,并为它们提供ID

|

| 616 |

+

rag.insert(["文本1", "文本2",...], ids=["文本1的ID", "文本2的ID"])

|

| 617 |

+

```

|

| 618 |

+

|

| 619 |

+

</details>

|

| 620 |

+

|

| 621 |

+

<details>

|

| 622 |

+

<summary><b>使用管道插入</b></summary>

|

| 623 |

+

|

| 624 |

+

`apipeline_enqueue_documents`和`apipeline_process_enqueue_documents`函数允许您对文档进行增量插入到图中。

|

| 625 |

+

|

| 626 |

+

这对于需要在后台处理文档的场景很有用,同时仍允许主线程继续执行。

|

| 627 |

+

|

| 628 |

+

并使用例程处理新文档。

|

| 629 |

+

|

| 630 |

+

```python

|

| 631 |

+

rag = LightRAG(..)

|

| 632 |

+

|

| 633 |

+

await rag.apipeline_enqueue_documents(input)

|

| 634 |

+

# 您的循环例程

|

| 635 |

+

await rag.apipeline_process_enqueue_documents(input)

|

| 636 |

+

```

|

| 637 |

+

|

| 638 |

+

</details>

|

| 639 |

+

|

| 640 |

+

<details>

|

| 641 |

+

<summary><b>插入多文件类型支持</b></summary>

|

| 642 |

+

|

| 643 |

+

`textract`支持读取TXT、DOCX、PPTX、CSV和PDF等文件类型。

|

| 644 |

+

|

| 645 |

+

```python

|

| 646 |

+

import textract

|

| 647 |

+

|

| 648 |

+

file_path = 'TEXT.pdf'

|

| 649 |

+

text_content = textract.process(file_path)

|

| 650 |

+

|

| 651 |

+

rag.insert(text_content.decode('utf-8'))

|

| 652 |

+

```

|

| 653 |

+

|

| 654 |

+

</details>

|

| 655 |

+

|

| 656 |

+

<details>

|

| 657 |

+

<summary><b>引文功能</b></summary>

|

| 658 |

+

|

| 659 |

+

通过提供文件路径,系统确保可以将来源追溯到其原始文档。

|

| 660 |

+

|

| 661 |

+

```python

|

| 662 |

+

# 定义文档及其文件路径

|

| 663 |

+

documents = ["文档内容1", "文档内容2"]

|

| 664 |

+

file_paths = ["path/to/doc1.txt", "path/to/doc2.txt"]

|

| 665 |

+

|

| 666 |

+

# 插入带有文件路径的文档

|

| 667 |

+

rag.insert(documents, file_paths=file_paths)

|

| 668 |

+

```

|

| 669 |

+

|

| 670 |

+

</details>

|

| 671 |

+

|

| 672 |

+

## 存储

|

| 673 |

+

|

| 674 |

+

<details>

|

| 675 |

+

<summary> <b>使用Neo4J进行存储</b> </summary>

|

| 676 |

+

|

| 677 |

+

* 对于生产级场景,您很可能想要利用企业级解决方案

|

| 678 |

+

* 进行KG存储。推荐在Docker中运行Neo4J以进行无缝本地测试。

|

| 679 |

+

* 参见:https://hub.docker.com/_/neo4j

|

| 680 |

+

|

| 681 |

+

```python

|

| 682 |

+

export NEO4J_URI="neo4j://localhost:7687"

|

| 683 |

+

export NEO4J_USERNAME="neo4j"

|

| 684 |

+

export NEO4J_PASSWORD="password"

|

| 685 |

+

|

| 686 |

+

# 为LightRAG设置日志记录器

|

| 687 |

+

setup_logger("lightrag", level="INFO")

|

| 688 |

+

|

| 689 |

+

# 当您启动项目时,请确保通过指定kg="Neo4JStorage"来覆盖默认的KG:NetworkX。

|

| 690 |

+

|

| 691 |

+

# 注意:默认设置使用NetworkX

|

| 692 |

+

# 使用Neo4J实现初始化LightRAG。

|

| 693 |

+

async def initialize_rag():

|

| 694 |

+

rag = LightRAG(

|

| 695 |

+

working_dir=WORKING_DIR,

|

| 696 |

+

llm_model_func=gpt_4o_mini_complete, # 使用gpt_4o_mini_complete LLM模型

|

| 697 |

+

graph_storage="Neo4JStorage", #<-----------覆盖KG默认值

|

| 698 |

+

)

|

| 699 |

+

|

| 700 |

+

# 初始化数据库连接

|

| 701 |

+

await rag.initialize_storages()

|

| 702 |

+

# 初始化文档处理的管道状态

|

| 703 |

+

await initialize_pipeline_status()

|

| 704 |

+

|

| 705 |

+

return rag

|

| 706 |

+

```

|

| 707 |

+

|

| 708 |

+

参见test_neo4j.py获取工作示例。

|

| 709 |

+

|

| 710 |

+

</details>

|

| 711 |

+