🐱 Sana Model Card

Demos

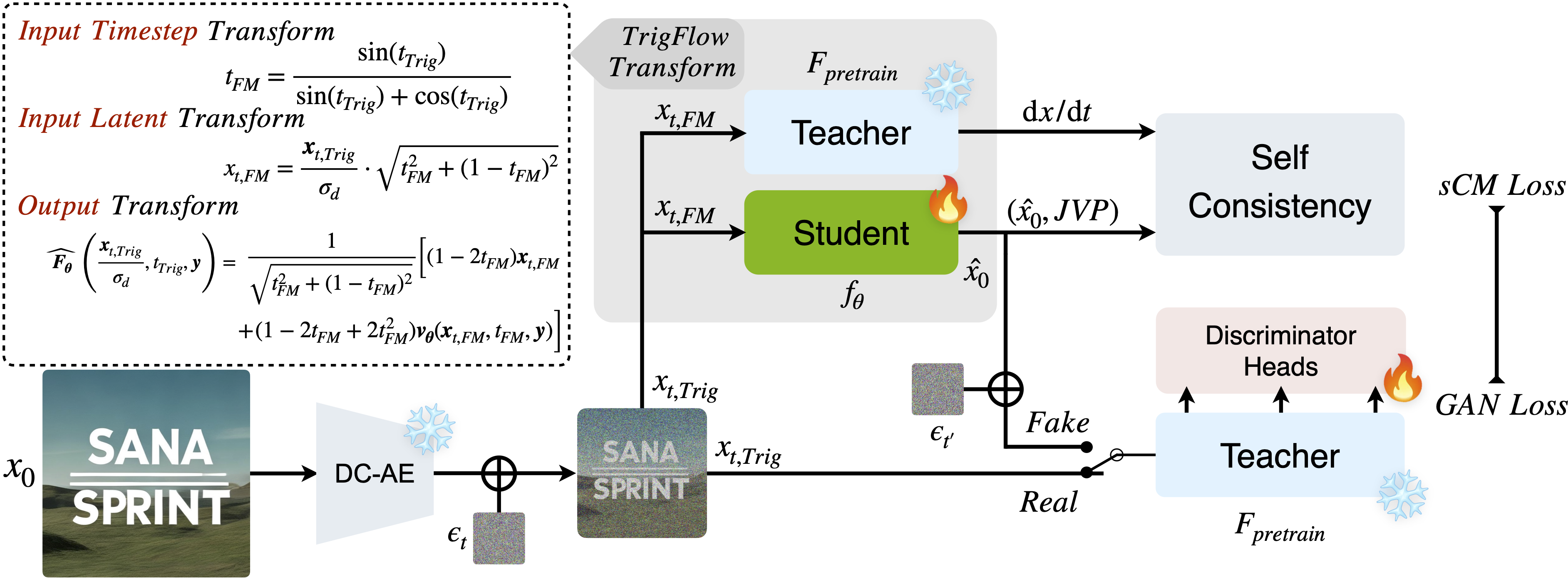

Training Pipeline

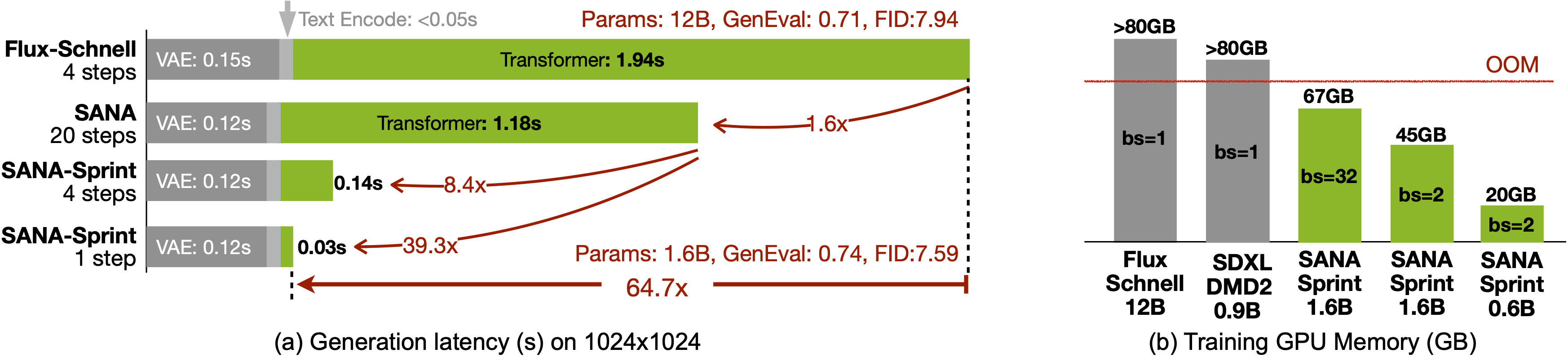

Model Efficiency

SANA-Sprint is an ultra-efficient diffusion model for text-to-image (T2I) generation, reducing inference steps from 20 to 1-4 while achieving state-of-the-art performance. Key innovations include: (1) A training-free approach for continuous-time consistency distillation (sCM), eliminating costly retraining; (2) A unified step-adaptive model for high-quality generation in 1-4 steps; and (3) ControlNet integration for real-time interactive image generation. SANA-Sprint achieves 7.59 FID and 0.74 GenEval in just 1 step — outperforming FLUX-schnell (7.94 FID / 0.71 GenEval) while being 10× faster (0.1s vs 1.1s on H100). With latencies of 0.1s (T2I) and 0.25s (ControlNet) for 1024×1024 images on H100, and 0.31s (T2I) on an RTX 4090, SANA-Sprint is ideal for AI-powered consumer applications (AIPC).

Source code is available at https://github.com/NVlabs/Sana.

Model Description

- Developed by: NVIDIA, Sana

- Model type: One-Step Diffusion with Continuous-Time Consistency Distillation

- Model size: 1.6B parameters

- Model precision: torch.bfloat16 (BF16)

- Model resolution: This model is developed to generate 1024px based images with multi-scale heigh and width.

- License: NSCL v2-custom. Governing Terms: NVIDIA License. Additional Information: Gemma Terms of Use | Google AI for Developers for Gemma-2-2B-IT, Gemma Prohibited Use Policy | Google AI for Developers.

- Model Description: This is a model that can be used to generate and modify images based on text prompts. It is a Linear Diffusion Transformer that uses one fixed, pretrained text encoders (Gemma2-2B-IT) and one 32x spatial-compressed latent feature encoder (DC-AE).

- Resources for more information: Check out our GitHub Repository and the SANA-Sprint report on arXiv.

Model Sources

For research purposes, we recommend our generative-models Github repository (https://github.com/NVlabs/Sana), which is more suitable for both training and inference

MIT Han-Lab provides free SANA-Sprint inference.

- Repository: https://github.com/NVlabs/Sana

- Demo: https://nv-sana.mit.edu/sprint

- Guidance: https://github.com/NVlabs/Sana/asset/docs/sana_sprint.md

🧨 Diffusers

Under construction PR

from diffusers import SanaSprintPipeline

import torch

pipeline = SanaSprintPipeline.from_pretrained(

"Efficient-Large-Model/Sana_Sprint_1.6B_1024px_diffusers",

torch_dtype=torch.bfloat16

)

pipeline.to("cuda:0")

prompt = "a tiny astronaut hatching from an egg on the moon"

image = pipeline(prompt=prompt, num_inference_steps=2).images[0]

image.save("sana_sprint.png")

Uses

Direct Use

The model is intended for research purposes only. Possible research areas and tasks include

Generation of artworks and use in design and other artistic processes.

Applications in educational or creative tools.

Research on generative models.

Safe deployment of models which have the potential to generate harmful content.

Probing and understanding the limitations and biases of generative models.

Excluded uses are described below.

Out-of-Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

Limitations and Bias

Limitations

- The model does not achieve perfect photorealism

- The model cannot render complex legible text

- fingers, .etc in general may not be generated properly.

- The autoencoding part of the model is lossy.

Bias

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

Model tree for Efficient-Large-Model/Sana_Sprint_1.6B_1024px_diffusers

Unable to build the model tree, the base model loops to the model itself. Learn more.