id

stringlengths 36

36

| source

stringclasses 15

values | formatted_source

stringclasses 13

values | text

stringlengths 2

7.55M

|

|---|---|---|---|

5d244ae6-f400-4d0a-aec8-8de91dd43281

|

trentmkelly/LessWrong-43k

|

LessWrong

|

Rational Terrorism or Why shouldn't we burn down tobacco fields?

Related: Taking ideas seriously

Let us say hypothetically you care about stopping people smoking.

You were going to donate $1000 dollars to givewell to save a life, instead you learn about an anti-tobacco campaign that is better. So you chose to donate $1000 dollars to a campaign to stop people smoking instead of donating it to a givewell charity to save an African's life. You justify this by expecting more people to live due to having stopped smoking (this probably isn't true, but for the sake of argument)

The consequences of donating to the anti-smoking campaign is that 1 person dies in africa and 20 live that would have died instead live all over the world.

Now you also have the choice of setting fire to many tobacco plantations, you estimate that the increased cost of cigarettes would save 20 lives but it will kill likely 1 guard worker. You are very intelligent so you think you can get away with it. There are no consequences to this action. You don't care much about the scorched earth or loss of profits.

If there are causes with payoff matrices like this, then it seems like a real world instance of the trolley problem. We are willing to cause loss of life due to inaction to achieve our goals but not cause loss of life due to action.

What should you do?

Killing someone is generally wrong but you are causing the death of someone in both cases. You either need to justify that leaving someone to die is ethically not the same as killing someone, or inure yourself that when you chose to spend $1000 dollars in a way that doesn't save a life, you are killing. Or ignore the whole thing.

This just puts me off being utilitarian to be honest.

Edit: To clarify, I am an easy going person, I don't like making life and death decisions. I would rather live and laugh, without worrying about things too much.

This confluence of ideas made me realise that we are making life and death decisions every time we spend $1000 dollars. I'm not sure where I will go from here.

|

a8025cc1-62b6-4872-8fe5-eb1d12b07166

|

trentmkelly/LessWrong-43k

|

LessWrong

|

STRUCTURE: A Crash Course in Your Brain

This post is part of my Hazardous Guide To Rationality. I don't expect this to be new or exciting to frequent LW people, and I would super appreciate comments and feedback in light of intents for the sequence, as outlined in the above link. Also, note this is a STRUCTURE post, again see the above link for what that means.

Intro

Talking about truth and reality can be hard. First, we're going to take a stroll through what we currently know about how the human mind works, and what the implications are for one's ability to be right.

Outline of main ideas. Could be post per main bullet.

* The Unconscious exists

* There is "more happening" in your brain than you are consciously aware of

* S1 / S2 introduction (research if I actually recommend Thinking Fast and Slow as the best intro)

* Confabulation is a thing

* You have an entire sub-module in your brain which is specialized for making up reasons for why you do things. Because of this, even if you ask yourself, "Why did I just tip over that vase?" and get a ready answer, it is hard to figure out if that is a true reason for your behavior.

* By default, thoughts feel like facts.

* The lower-level a thought produced by your brain, the less it feels like, 'A thing I think which could be true of false" and the more it feels like, "The way the world obviously is, duh."

* Your intuitions do not have special magical access to the truth. They are sometimes wrong, and sometimes right. But unless you pay attention, you are likely to by default, believe them to be compleely correct.

* We are Predictably Wrong

* You don't automatically know what you actual beliefs are.

* You also have the ability to say "I believe XYZ" while having no meaningful/consequential relations of XYZ to the rest of your world model. You can also not notice that this is the case.

* Luckily, you do still have some non-zero ability to have anticipation/expectations about reality, and have world models/beliefs.

* When b

|

4401cd0c-00fd-47a9-8df0-2624e2bc6c18

|

trentmkelly/LessWrong-43k

|

LessWrong

|

The national security dimension of OpenAI's leadership struggle

As the very public custody battle over OpenAI's artificial intelligences winds down, I would like to point out a few facts, and then comment briefly on their possible significance.

It has already been noticed that "at least two of the board members, Tasha McCauley and Helen Toner, have ties to the Effective Altruism movement", as the New York Times puts it. Both these board members also have national security ties.

With Helen Toner it's more straightforward; she has a master's degree in Security Studies at Georgetown University, "the most CIA-specific degree" at a university known as a gateway to deep state institutions. That doesn't mean she's in the CIA; but she's in that milieu.

As for Tasha McCauley, it's more oblique: her actor husband played Edward Snowden, the famous NSA defector, in the 2016 biographical film. Hollywood's relationship to the American intelligence community is, I think, a little more vexed than Georgetown's. McCauley and her husband might well be pro-Snowden civil libertarians who want deep state power curtailed; that would make them the "anti national security" faction on the OpenAI board, so to speak.

Either way, this means that the EAs on the board, who both presumably voted to oust Sam Altman as CEO, are adjacent to the US intelligence community, and/or its critics, many of whom are intelligence veterans anyway.

((ADDED A WEEK LATER: It has been pointed out to me that even if Joseph Gordon-Levitt did meet a few ex-spooks on the set of Snowden, that doesn't in itself equate to his wife having "national security ties". Obviously that's true; I just assumed that the connections run a lot deeper, and that may sound weird if you're used to thinking of the intelligence community and the culture industry as entirely separate. In any case, I accept the criticism that this is pure speculation on my part, and that I should have made my larger points in some other way.))

Those are my facts. Now for my interpretation.

Artificial intellig

|

e9b8ee30-de61-4c30-88aa-846896a07162

|

StampyAI/alignment-research-dataset/alignmentforum

|

Alignment Forum

|

The Pragmascope Idea

Pragma (Greek): thing, object.

A “pragmascope”, then, would be some kind of measurement or visualization device which shows the “things” or “objects” present.

I currently see the pragmascope as *the* major practical objective of [work on natural abstractions](https://www.lesswrong.com/posts/cy3BhHrGinZCp3LXE/testing-the-natural-abstraction-hypothesis-project-intro). As I see it, the core theory of natural abstractions is now 80% nailed down, I’m now working to get it across the theory-practice gap, and the pragmascope is the big milestone on the other side of that gap.

This post introduces the idea of the pragmascope and what it would look like.

Background: A Measurement Device Requires An Empirical Invariant

----------------------------------------------------------------

First, an aside on developing new measurement devices.

### Why The Thermometer?

What makes a thermometer a good measurement device? Why is “temperature”, as measured by a thermometer, such a useful quantity?

Well, at the most fundamental level… we stick a thermometer in two different things. Then, we put those two things in contact. Whichever one showed a higher “temperature” reading on the thermometer gets colder, whichever one showed a lower “temperature” reading on the thermometer gets hotter, all else equal (i.e. controlling for heat exchanged with other things in the environment). And this is robustly true across a huge range of different things we can stick a thermometer into.

It didn’t have to be that way! We could imagine a world (with very different physics) where, for instance, heat always flows from red objects to blue objects, from blue objects to green objects, and from green objects to red objects. But we don’t see that in practice. Instead, we see that each system can be assigned a single number (“temperature”), and then when we put two things in contact, the higher-number thing gets cooler and the lower-number thing gets hotter, regardless of which two things we picked.

Underlying the usefulness of the thermometer is an *empirical* fact, an *invariant*: the fact that which-thing-gets-hotter and which-thing-gets-colder when putting two things into contact can be predicted from a single one-dimensional real number associated with each system (i.e. “temperature”), for an extremely wide range of real-world things.

Generalizing: a useful measurement device starts with identifying some empirical invariant. There needs to be a wide variety of systems which interact in a predictable way across many contexts, *if* we know some particular information about each system. In the case of the thermometer, a wide variety of systems get hotter/colder when in contact, in a predictable way across many contexts, *if* we know the temperature of each system.

So what would be an analogous empirical invariant for a pragmascope?

### The Role Of The Natural Abstraction Hypothesis

The [natural abstraction hypothesis](https://www.lesswrong.com/posts/cy3BhHrGinZCp3LXE/testing-the-natural-abstraction-hypothesis-project-intro) has three components:

1. Chunks of the world generally interact with far-away chunks of the world via relatively-low-dimensional summaries

2. A broad class of cognitive architectures converge to use subsets of these summaries (i.e. they’re instrumentally convergent)

3. These summaries match human-recognizable “things” or “concepts”

For purposes of the pragmascope, we’re particularly interested in claim 2: a broad class of cognitive architectures converge to use subsets of the summaries. If true, that sure sounds like an empirical invariant!

So what would a corresponding measurement device look like?

What would a pragmascope look like, concretely?

-----------------------------------------------

The “measurement device” (probably a python function, in practice) should take in some cognitive system (e.g. a trained neural network) and maybe its environment (e.g. simulator/data), and spit out some data structure representing the natural “summaries” in the system/environment. Then, we should easily be able to take some *other* cognitive system trained on the same environment, extract the natural “summaries” from that, and compare. Based on the natural abstraction hypothesis, we expect to observe things like:

* A broad class of cognitive architectures trained on the same data/environment end up with subsets of the same summaries.

* Two systems with the same summaries are able to accurately predict the same things on new data from the same environment/distribution.

* On inspection, the summaries correspond to human-recognizable “things” or “concepts”.

* A system is able to accurately predict things involving the same human-recognizable concepts the pragmascope says it has learned, and cannot accurately predict things involving human-recognizable concepts the pragmascope says it has not learned.

It’s these empirical observations which, if true, will underpin the usefulness of the pragmascope. The more precisely and robustly these sorts of properties hold, the more useful the pragmascope. Ideally we’d even be able to *prove* some of them.

### What’s The Output Data Structure?

One obvious currently-underspecified piece of the picture: what data structures will the pragmascope output, to represent the “summaries”? I have some [current-best-guesses based on the math](https://www.lesswrong.com/posts/cqdDGuTs2NamtEhBW/maxent-and-abstractions-current-best-arguments), but the main answer at this point is “I don’t know yet”. I expect looking at the internals of trained neural networks will give lots of feedback about what the natural data structures are.

Probably the earliest empirical work will just punt on standard data structures, and instead focus on translating internal-concept-representations in one net into corresponding internal-concept-representations in another. For instance, here’s one experiment I recently proposed:

* Train two nets, with different architectures (both capable of achieving zero training loss and good performance on the test set), on the same data.

* Compute the small change in data dx which would induce a small change in trained parameter values d\theta along each of the narrowest directions of the ridge in the loss landscape (i.e. eigenvectors of the Hessian with largest eigenvalue).

* Then, compute the small change in parameter values d\theta in the *second* net which would result from the same small change in data dx.

* Prediction: the d\theta directions computed will approximately match the narrowest directions of the ridge in the loss landscape of the second net.

Conceptually, this sort of experiment is intended to take all the stuff one network learned, and compare it to all the stuff the other network learned. It wouldn’t yield a full pragmascope, because it wouldn’t say anything about how to factor all the stuff a network learns into individual concepts, but it would give a very well-grounded starting point for translating stuff-in-one-net into stuff-in-another-net (to first/second-order approximation).

|

884969a4-0c62-4fc3-833e-c8100825d25d

|

trentmkelly/LessWrong-43k

|

LessWrong

|

You are Underestimating The Likelihood That Convergent Instrumental Subgoals Lead to Aligned AGI

This post is an argument for the Future Fund's "AI Worldview" prize. Namely, I claim that the estimates given for the following probability are too high:

> P(misalignment x-risk|AGI)”: Conditional on AGI being developed by 2070, humanity will go extinct or drastically curtail its future potential due to loss of control of AGI

The probability given here is 15%. I believe 5% is a more realistic estimate here.

I believe that, if convergent instrumental subgoals don't imply alignment, that the original odds given are probably too low. I simply don't believe that the alignment problem is solvable. Therefore, I believe our only real shot at surviving the existence of AGI is if the AGI finds it better to keep us around, based upon either us providing utility or lowering risk to the AGI.

Fortunately, I think the odds that an AGI will find it a better choice to keep us around are higher than the ~5:1 odds given.

I believe keeping humans around, and supporting their wellbeing, both lowers risk and advances instrumental subgoals for the AGI for the following reasons:

* hardware sucks, machines break all the time, and the current global supply chain necessary for maintaining operational hardware would not be cheap or easy to replace without taking on substantial risk

* perfectly predicting the future in chaotic system is impossible beyond some time horizon, which means there are no paths for the AGI that guarantee its survival; keeping alive a form of intelligence with very different risk profiles might be a fine hedge against failure

My experience working on supporting Google's datacenter hardware left me with a strong impression that for large numbers of people, the fact that hardware breaks down and dies, often, requiring a constant stream of repairs, is invisible. Likewise, I think a lot of adults take the existence of functioning global supply chains for all manner of electronic and computing hardware as givens. I find that most adults, even most adult

|

5d488a63-5d33-4219-95df-e83d1698ed8f

|

trentmkelly/LessWrong-43k

|

LessWrong

|

Reputation bets

People don’t often put their money where their mouth is, but they do put their reputation where their mouth is all the time. If I say ‘The Strategy of Conflict is pretty good’ I am betting some reputation on you liking it if you look at it. If you do like it, you will think better of me, and if you don’t, you will think worse. Even if I just say ‘it’s raining’, I’m staking my reputation on this. If it isn’t raining, you will think there is something wrong with me. If it is raining, you will decrease your estimate of how many things are wrong with me the teensiest bit.

If we have reputation bets all the time, why would it be so great to have more money bets?

Because reputation bets are on a limited class of propositions. They are all of the form ’doing X will make me look good’. This is pretty close to betting that an observer will endorse X. Such bets are most useful for statements that are naturally about what the observer will endorse. For instance (a) ’you would enjoy this blog’ is pretty close to (b) ‘you will endorse the claim that you would enjoy this blog’. It isn’t quite the same – for instance, if the listener refuses to look at the blog, but judges by its title that it is a silly blog, then (a) might be true while (b) is false. But still, if I want to bet on (a), betting on (b) is a decent proxy.

Reputation bets are also fairly useful for statements where the observer will mostly endorse true statements, such as ‘there is ice cream in the freezer’. Reputation bets are much less useful (for judging truth) where the observer is as likely to be biased and ignorant as the person making the statement. For instance, ‘removing height restrictions on buildings would increase average quality of life in our city’. People still do make reputation bets in these cases, but they are betting on their judgment of the other person’s views.

If the set of things where people mostly endorse true answers is roughly the set where it is pretty clear what the true answer is,

|

c805136b-ab96-44b1-adf5-edb21e2bbfb0

|

trentmkelly/LessWrong-43k

|

LessWrong

|

Are extrapolation-based AIs alignable?

(This is an account of my checking a certain alignment idea and finding that it doesn't work. Also my thinking is pretty naive and could easily be wrong.)

When thinking about AIs that are trained on some dataset and learn to extrapolate it, like the current crop of LLMs, I asked myself: can such an AI be aligned purely by choosing an appropriate dataset to train on? In other words, does there exist any dataset such that generating extrapolations from it leads to good outcomes, even in the hands of bad actors? If we had such a dataset, we'd have an aligned AI.

But unfortunately it seems hard. For example if the dataset includes instructions to build a nuke, then a bad actor could just ask for that. Moreover, if there's any circumstance at all under which we want the AI to say "here's the instructions to build a nuke" (to help a good actor stop an incoming asteroid, say), then a bad actor could extrapolate from that phrase and get the same result.

It seems the problem is that extrapolation doesn't have situational awareness. If the AI is based on extrapolating a certain dataset, there's no way to encode in the dataset itself which parts of it can be said when. And putting a thin wrapper on top, like ChatGPT, doesn't seem to help much, because from what I've seen it's easy enough to bypass.

What is the hope for alignment, then? Can we build an AI with situational awareness from the ground up, not relying on an "extrapolation core" (because the core would itself be an unaligned AI that bad actors could use)? I don't know.

EDIT: the sequel to this post is Aligned AI as a wrapper around an LLM.

|

b96512fd-8295-47ab-9d95-f4c3b3856d8e

|

StampyAI/alignment-research-dataset/arbital

|

Arbital

|

Harmless supernova fallacy

Harmless supernova fallacies are a class of arguments, usually a subspecies of false dichotomy or continuum fallacy, which [can equally be used to argue](https://arbital.com/p/3tc) that almost any physically real phenomenon--including a supernova--is harmless / manageable / safe / unimportant.

- **Bounded, therefore harmless:** "A supernova isn't infinitely energetic--that would violate the laws of physics! Just wear a flame-retardant jumpsuit and you'll be fine." (All physical phenomena are finitely energetic; some are nonetheless energetic enough that a flame-retardant jumpsuit won't stop them. "Infinite or harmless" is a false dichotomy.)

- **Continuous, therefore harmless:** "Temperature is continuous; there's no qualitative threshold where something becomes 'super' hot. We just need better versions of our existing heat-resistant materials, which is a well-understood engineering problem." (Direct instance of the standard continuum fallacy: the existence of a continuum of states between two points does not mean they are not distinct. Some temperatures, though not qualitatively distinct from lower temperatures, exceed what we can handle using methods that work for lower temperatures. "Quantity has a quality all of its own.")

- **Varying, therefore harmless:** "A supernova wouldn't heat up all areas of the solar system to exactly the same temperature! It'll be hotter closer to the center, and cooler toward the outside. We just need to stay on a part of Earth that's further way from the Sun." (The temperatures near a supernova vary, but they are all quantitatively high enough to be far above the greatest manageable level; there is no nearby temperature low enough to form a survivable valley.)

- **Mundane, therefore harmless** or **straw superpower**: "Contrary to what many non-astronomers seem to believe, a supernova can't burn hot enough to sear through time itself, so we'll be fine." (False dichotomy: the divine ability is not required for the phenomenon to be dangerous / non-survivable.)

- **Precedented, therefore harmless:** "Really, we've already had supernovas around for a while: there are already devices that produce 'super' amounts of heat by fusing elements low in the periodic table, and they're called thermonuclear weapons. Society has proven well able to regulate existing thermonuclear weapons and prevent them from being acquired by terrorists; there's no reason the same shouldn't be true of supernovas." (Reference class tennis / noncentral fallacy / continuum fallacy: putting supernovas on a continuum with hydrogen bombs doesn't make them able to be handled by similar strategies, nor does finding a class such that it contains both supernovas and hydrogen bombs.)

- **Undefinable, therefore harmless:** "What is 'heat', really? Somebody from Greenland might think 295 Kelvin was 'warm', somebody from the equator might consider the same weather 'cool'. And when exactly does a 'sun shining' become a 'supernova'? This whole idea seems ill-defined." (Someone finding it difficult to make exacting definitions about a physical process doesn't license the conclusion that the physical process is harmless. %%note: It also happens that the distinction between the runaway process in a supernova and a sun shining is empirically sharp; but this is not why the argument is invalid--a super-hot ordinary fire can also be harmful even if we personally are having trouble defining "super" and "hot". %%)

|

adfe86f6-d5b5-44b2-bcf6-e9a39545aaa1

|

StampyAI/alignment-research-dataset/eaforum

|

Effective Altruism Forum

|

Technical AGI safety research outside AI

I think there are many questions whose answers would be useful for technical AGI safety research, but which will probably require expertise outside AI to answer. In this post I list 30 of them, divided into four categories. Feel free to get in touch if you’d like to discuss these questions and why I think they’re important in more detail. I personally think that making progress on the ones in the first category is particularly vital, and plausibly tractable for researchers from a wide range of academic backgrounds.

**Studying and understanding safety problems**

1. How strong are the economic or technological pressures towards building very general AI systems, as opposed to narrow ones? How plausible is the [CAIS model](https://www.fhi.ox.ac.uk/reframing/) of advanced AI capabilities arising from the combination of many narrow services?

2. What are the most compelling arguments for and against [discontinuous](https://intelligence.org/files/IEM.pdf) versus [continuous](https://sideways-view.com/2018/02/24/takeoff-speeds/) takeoffs? In particular, how should we think about the analogy from human evolution, and the scalability of intelligence with compute?

3. What are the tasks via which narrow AI is most likely to have a destabilising impact on society? What might cyber crime look like when many important jobs have been automated?

4. How plausible are safety concerns about [economic dominance by influence-seeking agents](https://www.alignmentforum.org/posts/HBxe6wdjxK239zajf/more-realistic-tales-of-doom), as well as [structural loss of control](https://www.lawfareblog.com/thinking-about-risks-ai-accidents-misuse-and-structure) scenarios? Can these be reformulated in terms of standard economic ideas, such as [principal-agent problems](http://www.overcomingbias.com/2019/04/agency-failure-ai-apocalypse.html) and the effects of automation?

5. How can we make the concepts of agency and goal-directed behaviour more specific and useful in the context of AI (e.g. building on Dennett’s work on the intentional stance)? How do they relate to intelligence and the [ability to generalise](https://www.cser.ac.uk/research/paradigms-AGI/) across widely different domains?

6. What are the strongest arguments that have been made about why advanced AI might pose an existential threat, stated as clearly as possible? How do the different claims relate to each other, and which inferences or assumptions are weakest?

**Solving safety problems**

1. What techniques used in studying animal brains and behaviour will be most helpful for analysing AI systems [and their behaviour](https://www.nature.com/articles/s41586-019-1138-y), particularly with the goal of rendering them interpretable?

2. What is the most important information about deployed AI that decision-makers will need to track, and how can we create interfaces which communicate this effectively, making it visible and salient?

3. What are the most effective ways to gather huge numbers of human judgments about potential AI behaviour, and how can we ensure that such data is high-quality?

4. How can we empirically test the [debate](https://openai.com/blog/ai-safety-needs-social-scientists/) and [factored cognition](https://ought.org/research/factored-cognition) hypotheses? How plausible are the [assumptions](https://ai-alignment.com/towards-formalizing-universality-409ab893a456) about the decomposability of cognitive work via language which underlie debate and [iterated distillation and amplification](https://ai-alignment.com/iterated-distillation-and-amplification-157debfd1616)?

5. How can we distinguish between AIs helping us better understand what we want and AIs changing what we want (both as individuals and as a civilisation)? How easy is the latter to do; and how easy is it for us to identify?

6. Various questions in decision theory, logical uncertainty and game theory relevant to [agent foundations](https://intelligence.org/files/TechnicalAgenda.pdf).

7. How can we create [secure](https://forum.effectivealtruism.org/posts/ZJiCfwTy5dC4CoxqA/information-security-careers-for-gcr-reduction) containment and supervision protocols to use on AI, which are also robust to external interference?

8. What are the best communication channels for conveying goals to AI agents? In particular, which ones are most likely to incentivise optimisation of the goal [specified through the channel](https://www.alignmentforum.org/s/SBfqYgHf2zvxyKDtB/p/5bd75cc58225bf06703754b3), rather than [modification of the communication channel itself](https://www.ijcai.org/proceedings/2017/0656.pdf)?

9. How closely linked is the human motivational system to our intellectual capabilities - to what extent does the [orthogonality thesis](https://www.fhi.ox.ac.uk/wp-content/uploads/Orthogonality_Analysis_and_Metaethics-1.pdf) apply to human-like brains? What can we learn from the range of variation in human motivational systems (e.g. induced by brain disorders)?

10. What were the features of the human ancestral environment and evolutionary “training process” that contributed the most to our empathy and altruism? What are the analogues of these in our current AI training setups, and how can we increase them?

11. What are the features of our current cultural environments that contribute the most to altruistic and cooperative behaviour, and how can we replicate these while training AI?

**Forecasting AI**

1. What are the most likely pathways to AGI and the milestones and timelines involved?

2. How do our best systems so far [compare to animals](http://animalaiolympics.com/) and humans, both in terms of performance and in terms of brain size? What do we know from animals about how cognitive abilities scale with brain size, learning time, environmental complexity, etc?

3. What are the economics and logistics of building microchips and datacenters? How will the availability of compute change under different demand scenarios?

4. In what ways is AI usefully [analogous or disanalogous](http://rationallyspeakingpodcast.org/show/rs-231-helen-toner-on-misconceptions-about-china-and-artific.html) to the industrial revolution; electricity; and nuclear weapons?

5. How will the progression of narrow AI shape public and government opinions and narratives towards it, and how will that influence the directions of AI research?

6. Which tasks will there be most economic pressure to automate, and how much money might realistically be involved? What are the biggest social or legal barriers to automation?

7. What are the most salient features of the history of AI, and how should they affect our understanding of the field today?

**Meta**

1. How can we best grow the field of AI safety? See [OpenPhil’s notes on the topic](https://www.openphilanthropy.org/blog/new-report-early-field-growth).

2. How can spread norms in favour of careful, robust testing and other safety measures in machine learning? What can we learn from other engineering disciplines with strict standards, such as aerospace engineering?

3. How can we create infrastructure to improve our ability to accurately predict future development of AI? What are the bottlenecks facing tools like [Foretold.io](https://forum.effectivealtruism.org/posts/5nCijr7A9MfZ48o6f/introducing-foretold-io-a-new-open-source-prediction) and [Metaculus](https://www.metaculus.com/questions/?show-welcome=true), and preventing effective prediction markets from existing?

4. How can we best increase communication and coordination within the AI safety community? What are the major constraints that safety faces on sharing information (in particular ones which other fields don’t face), and how can we overcome them?

5. What norms and institutions should the field of AI safety import from other disciplines? Are there predictable problems that we will face as a research community, or systemic biases which are making us overlook things?

6. What are the biggest disagreements between safety researchers? What’s the distribution of opinions, and what are the key cruxes?

Particular thanks to Beth Barnes and a discussion group at the CHAI retreat for helping me compile this list.

|

e248c3b4-fc70-452b-9921-4463b6d6b903

|

trentmkelly/LessWrong-43k

|

LessWrong

|

Meetup : DC Meetup

Discussion article for the meetup : DC Meetup

WHEN: 20 May 2012 03:00:00PM (-0400)

WHERE: National Portrait Gallery, Washington, DC 20001, USA

If the SIAI response to the Givewell critique has been posted, that will be the meetup topic.

Discussion article for the meetup : DC Meetup

|

809a3585-2386-46a6-a5f1-0c0b197146eb

|

StampyAI/alignment-research-dataset/eaforum

|

Effective Altruism Forum

|

Refer the Cooperative AI Foundation’s New COO, Receive $5000

**TL;DR: The** [**Cooperative AI Foundation**](https://www.cooperativeai.com/foundation) **(CAIF) is a new AI safety organisation and we're hiring for a** [**Chief Operating Officer**](https://www.cooperativeai.com/job-listing/chief-operating-officer) **(COO). You can suggest people that you think might be a good fit for the role using** [**this form**](https://docs.google.com/forms/d/e/1FAIpQLSd6OZ2Xlp3n2OLwCuH018dt2VIROxz2WogMRRXWC5MFAK0jRg/viewform?usp=sf_link)**. If you're the first to suggest the person we eventually hire, we'll send you $5000.**

*This post was inspired by conversations with Richard Parr and Cate Hall (though I didn't consult them about the post, and they may not endorse it). Thanks to Anne le Roux and Jesse Clifton for reading a previous draft. Any mistakes are my own.*

Background

==========

The [Cooperative AI Foundation](https://www.cooperativeai.com/foundation) (CAIF, pronounced “safe”) is a new organisation supporting research on the cooperative intelligence of advanced AI systems. We believe that many of the most important problems facing humanity are problems of cooperation, and that AI will be increasingly important when it comes to solving (or exacerbating) such problems. In short, **we’re an A(G)I safety research foundation seeking to build the nascent field of** [**Cooperative AI**](https://www.nature.com/articles/d41586-021-01170-0).

CAIF is supported by an initial endowment of $15 million and [some of the leading thinkers in AI safety and AI governance](https://www.cooperativeai.com/foundation#Trustees), but is currently lacking operational capacity. We’re expanding our team and **our top priority is to hire a** [**Chief Operating Officer**](https://www.cooperativeai.com/job-listing/chief-operating-officer) – a role that will be critical for the scaling and smooth running of the foundation, both now and in the years to come. We believe that this marks an exciting opportunity to have a particularly large impact on the growth of CAIF, the field, and thus on the benefits to humanity that it prioritises.

How You Can Help

================

Do you know someone who might be a good fit for this role? Submit their name (and yours) via [this form](https://docs.google.com/forms/d/e/1FAIpQLSd6OZ2Xlp3n2OLwCuH018dt2VIROxz2WogMRRXWC5MFAK0jRg/viewform?usp=sf_link). CAIF will reach out to the people we think are promising, and if you were the first to suggest the person we eventually hire, we'll send you a **referral bonus of $5000**. The details required from a referral can be found by looking at the form, with the following conditions:

* Referrals must be made by **3 July 2022 23:59 UTC**

* You can't refer yourself (though if you're interested in the role, please [apply](https://2ydtwkl8tw5.typeform.com/to/ERVfu3Hk)!)

* Please **don't** directly post names or personal details in the comments below

* We'll only send you the bonus if the person you suggest (and we hire) isn't someone we'd already considered

* The person you refer doesn't need to already be part of the EA community[[1]](#fn5di05uzqpgy) or be knowledgable about AI safety

* If you've already suggested a name to us (i.e., before this was posted), we'll still send you the bonus

* If you have any questions about the referral scheme, please comment below

Finally, we're also looking for new ways of advertising the role.[[2]](#fnlarl08icxo) If you have suggestions, please post them in the comments below. If we use your suggested method (and we weren't already planning to), we'll send you a **smaller bonus of $250**. Feel free to list all your suggestions in a single comment – we'll send you a bonus for each one that we use.

Why We Posted This

==================

Arguably, the most critical factor in how successful an organisation is (given sufficient funding, at least) is the quality of the people working there. This is especially true for us as a new, small organisation with ambitious plans for growth, and for a role as important as the COO. Because of this, **we are strongly prioritising hiring an excellent person for this role**.

The problem is that finding excellent people is hard (especially for operations roles). Many excellent people that might consider moving to this role are not actively looking for one, and may not already be in our immediate network. This means that **referrals are critical for finding the best person for the job**, hence this post.

$5000 may seem like a lot for simply sending us someone's name, but is a small price to pay in terms of increasing CAIF's impact. Moreover, it's also **well****below****what a recruitment agency would usually charge** for a successful referral to a role like this – and using these agencies is often worth it! Finally, though there is some risk of the referral scheme being exploited, we believe that the upsides outweigh these risks substantially. We suggest that other EA organisations might want to adopt similar schemes in the future.

1. **[^](#fnref5di05uzqpgy)**I owe my appreciation of this point to Cate.

2. **[^](#fnreflarl08icxo)**Like this post, which was suggested by Richard.

|

d7603973-6774-4bb5-8115-7307a1540bf9

|

StampyAI/alignment-research-dataset/alignmentforum

|

Alignment Forum

|

Alignment Newsletter #42

Cooperative IRL as a definition of human-AI group rationality, and an empirical evaluation of theory of mind vs. model learning in HRI

Find all Alignment Newsletter resources [here](http://rohinshah.com/alignment-newsletter/). In particular, you can [sign up](http://eepurl.com/dqMSZj), or look through this [spreadsheet](https://docs.google.com/spreadsheets/d/1PwWbWZ6FPqAgZWOoOcXM8N_tUCuxpEyMbN1NYYC02aM/edit?usp=sharing) of all summaries that have ever been in the newsletter.

Highlights

----------

**[AI Alignment Podcast: Cooperative Inverse Reinforcement Learning](https://futureoflife.org/2019/01/17/cooperative-inverse-reinforcement-learning-with-dylan-hadfield-menell/)** *(Lucas Perry and Dylan Hadfield-Menell)* (summarized by Richard): Dylan puts forward his conception of Cooperative Inverse Reinforcement Learning as a definition of what it means for a human-AI system to be rational, given the information bottleneck between a human's preferences and an AI's observations. He notes that there are some clear mismatches between this problem and reality, such as the CIRL assumption that humans have static preferences, and how fuzzy the abstraction of "rational agents with utility functions" becomes in the context of agents with bounded rationality. Nevertheless, he claims that this is a useful unifying framework for thinking about AI safety.

Dylan argues that the process by which a robot learns to accomplish tasks is best described not just as maximising an objective function but instead in a way which includes the system designer who selects and modifies the optimisation algorithms, hyperparameters, etc. In fact, he claims, it doesn't make sense to talk about how well a system is doing without talking about the way in which it was instructed and the type of information it got. In CIRL, this is modeled via the combination of a "teaching strategy" and a "learning strategy". The former can take many forms: providing rankings of options, or demonstrations, or binary comparisons, etc. Dylan also mentions an extension of this in which the teacher needs to learn their own values over time. This is useful for us because we don't yet understand the normative processes by which human societies come to moral judgements, or how to integrate machines into that process.

**[On the Utility of Model Learning in HRI](https://arxiv.org/abs/1901.01291)** *(Rohan Choudhury, Gokul Swamy et al)*: In human-robot interaction (HRI), we often require a model of the human that we can plan against. Should we use a specific model of the human (a so-called "theory of mind", where the human is approximately optimizing some unknown reward), or should we simply learn a model of the human from data? This paper presents empirical evidence comparing three algorithms in an autonomous driving domain, where a robot must drive alongside a human.

The first algorithm, called Theory of Mind based learning, models the human using a theory of mind, infers a human reward function, and uses that to predict what the human will do, and plans around those actions. The second algorithm, called Black box model-based learning, trains a neural network to directly predict the actions the human will take, and plans around those actions. The third algorithm, model-free learning, simply applies Proximal Policy Optimization (PPO), a deep RL algorithm, to directly predict what action the robot should take, given the current state.

Quoting from the abstract, they "find that there is a significant sample complexity advantage to theory of mind methods and that they are more robust to covariate shift, but that when enough interaction data is available, black box approaches eventually dominate". They also find that when the ToM assumptions are significantly violated, then the black-box model-based algorithm will vastly surpass ToM. The model-free learning algorithm did not work at all, probably because it cannot take advantage of knowledge of the dynamics of the system and so the learning problem is much harder.

**Rohin's opinion:** I'm always happy to see an experimental paper that tests how algorithms perform, I think we need more of these.

You might be tempted to think of this as evidence that in deep RL, a model-based method should outperform a model-free one. This isn't exactly right. The first ToM and black box model-based algorithms use an exact model of the dynamics of the environment modulo the human, that is, they can exactly predict the next state given the current state, the robot action, and the human action. The model-free algorithm must learn this from scratch, so it isn't an apples-to-apples comparison. (Typically in deep RL, both model-based and model-free algorithms have to learn the environment dynamics.) However, you *can* think of the ToM as a model-based method and the Black-box model-based algorithm as a model-free algorithm, where both algorithms have to learn the *human model*instead of the more traditional environment dynamics. With that analogy, you would conclude that model-based algorithms will be more sample efficient and more performant in low-data regimes, but will be outperformed by model-free algorithms with sufficient data, which agrees with my intuitions.

This kind of effect is a major reason for my position that the first powerful AI systems will be modular (analogous to model-based systems), but that they will eventually be replaced by more integrated, end-to-end systems (analogous to model-free systems). Initially, we will be in a (relatively speaking) low-data regime, where modular systems excel, but over time there will be more data and compute and we will transition to regimes where end-to-end systems will solve the same tasks better (though we may then have modular systems for more difficult tasks).

Technical AI alignment

======================

### Iterated amplification sequence

[The reward engineering problem](https://www.alignmentforum.org/posts/4nZRzoGTqg8xy5rr8/the-reward-engineering-problem) *(Paul Christiano)*: The reward engineering problem is the problem of designing some form of reward feedback such that if the agent learns to get high reward, then we are happy with the resulting behavior. We assume that an overseer H is providing reward for an agent A, with H smarter than A.

(Aside: Why are we assuming H is smarter, when we are typically worried about AI that is more intelligent than we are? Because we could hope to use AI tools to create this reward function, so that the human + AI team is smarter than the AI being trained. In iterated amplification, this corresponds to the distillation step.)

We could imagine three approaches: direct supervision (where H provides a reward for every action A takes), imitation learning (where A tries to mimic H, perhaps by trying to fool a discriminator that distinguishes between A and H), and inverse reinforcement learning (where we infer a reward function by observing H's behavior and use that to supervise A).

With all of these approaches, we might hope that since H is smarter than A, A will not be able to trick H with some behavior that gets high reward but is actually undesirable. However, this argument has a major flaw. When A chooses an action, the process or computation by which it chooses that action is important information, which by default (especially with neural nets) only A has access to, and so this can allow it to fool H. For example, if A is tasked with drawing original artwork, it might copy and slightly change existing artwork, which H may not realize if it cannot see how the artwork was made. We could hope to fix this problem with transparency or explanations, but this requires a lot more research.

Imitation learning and IRL have the problem that A may not be capable of doing what H does. In that case, it will be off-distribution and may have weird behavior. Direct supervision doesn't suffer from this problem, but it is very time-inefficient. This could potentially be fixed using semi-supervised learning techniques.

**Rohin's opinion:** The information asymmetry problem between H and A seems like a major issue. For me, it's the strongest argument for why transparency is a *necessary* ingredient of a solution to alignment. The argument against imitation learning and IRL is quite strong, in the sense that it seems like you can't rely on either of them to capture the right behavior. These are stronger than the arguments against [ambitious value learning](https://www.alignmentforum.org/s/4dHMdK5TLN6xcqtyc/p/5eX8ko7GCxwR5N9mN) ([AN #31](https://mailchi.mp/7d0e3916e3d9/alignment-newsletter-31)) because here we assume that H is smarter than A, which we could not do with ambitious value learning. So it does seem to me that direct supervision (with semi-supervised techniques and robustness) is the most likely path forward to solving the reward engineering problem.

There is also the question of whether it is necessary to solve the reward engineering problem. It certainly seems necessary in order to implement iterated amplification given current systems (where the distillation step will be implemented with optimization, which means that we need a reward signal), but might not be necessary if we move away from optimization or if we build systems using some technique other than iterated amplification (though even then it seems very useful to have a good reward engineering solution).

[Capability amplification](https://www.alignmentforum.org/posts/t3AJW5jP3sk36aGoC/capability-amplification) *(Paul Christiano)*: Capability amplification is the problem of taking some existing policy and producing a better policy, perhaps using much more time and compute. It is a particularly interesting problem to study because it could be used to define the goals of a powerful AI system, and it could be combined with [reward engineering](https://www.alignmentforum.org/posts/4nZRzoGTqg8xy5rr8/the-reward-engineering-problem) above to create a powerful aligned system. (Capability amplification and reward engineering are analogous to amplification and distillation respectively.) In addition, capability amplification seems simpler than the general problem of "build an AI that does the right thing", because we get to start with a weak policy A rather than nothing, and were allowed to take lots of time and computation to implement the better policy. It would be useful to tell whether the "hard part" of value alignment is in capability amplification, or somewhere else.

We can evaluate capability amplification using the concepts of reachability and obstructions. A policy C is *reachable* from another policy A if there is some chain of policies from A to C, such that at each step capability amplification takes you from the first policy to something at least as good as the second policy. Ideally, all policies would be reachable from some very simple policy. This is impossible if there exists an *obstruction*, that is a partition of policies into two sets L and H, such that it is impossible to amplify any policy in L to get a policy that is at least as good as some policy in H. Intuitively, an obstruction prevents us from getting to arbitrarily good behavior, and means that all of the policies in H are not reachable from any policy in L.

We can do further work on capability amplification. With theory, we can search for challenging obstructions, and design procedures that overcome them. With experiment, we can study capability amplification with humans (something which [Ought](https://ought.org/) is now doing).

**Rohin's opinion:** There's a clear reason for work on capability amplification: it could be used as a part of an implementation of iterated amplification. However, this post also suggests another reason for such work -- it may help us determine where the "hard part" of AI safety lies. Does it help to assume that you have lots of time and compute, and that you have access to a weaker policy?

Certainly if you just have access to a weaker policy, this doesn't make the problem any easier. If you could take a weak policy and amplify it into a stronger policy efficiently, then you could just repeatedly apply this policy-improvement operator to some very weak base policy (say, a neural net with random weights) to solve the full problem. (In other variants, you have a much stronger aligned base policy, eg. the human policy with short inputs and over a short time horizon; in that case this assumption is more powerful.) The more interesting assumption is that you have lots of time and compute, which does seem to have a lot of potential. I feel pretty optimistic that a human thinking for a long time could reach "superhuman performance" by our current standards; capability amplification asks if we can do this in a particular structured way.

### Value learning sequence

[Reward uncertainty](https://www.alignmentforum.org/s/4dHMdK5TLN6xcqtyc/p/ZiLLxaLB5CCofrzPp) *(Rohin Shah)*: Given that we need human feedback for the AI system to stay "on track" as the environment changes, we might design a system that keeps an estimate of the reward, chooses actions that optimize that reward, but also updates the reward over time based on feedback. This has a few issues: it typically assumes that the human Alice knows the true reward function, it makes a possibly-incorrect assumption about the meaning of Alice's feedback, and the AI system still looks like a long-term goal-directed agent where the goal is the current reward estimate.

This post takes the above AI system and considers what happens if you have a distribution over reward functions instead of a point estimate, and during action selection you take into account future updates to the distribution. (This is the setup of [Cooperative Inverse Reinforcement Learning](https://arxiv.org/abs/1606.03137).) While we still assume that Alice knows the true reward function, and we still require an assumption about the meaning of Alice's feedback, the resulting system looks less like a goal-directed agent.

In particular, the system no longer has an incentive to disable the system that learns values from feedback: while previously it changed the AI system's goal (a negative effect from the goal's perspective), now it provides more information about the goal (a positive effect). In addition, the system has more of an incentive to let itself be shut down. If a human is about to shut it down, it should update strongly that whatever it was doing was very bad, causing a drastic update on reward functions. It may still prevent us from shutting it down, but it will at least stop doing the bad thing. Eventually, after gathering enough information, it would converge on the true reward and do the right thing. Of course, this is assuming that the space of rewards is well-specified, which will probably not be true in practice.

[Following human norms](https://www.alignmentforum.org/posts/eBd6WvzhuqduCkYv3/following-human-norms) *(Rohin Shah)*: One approach to preventing catastrophe is to constrain the AI system to never take catastrophic actions, and not focus as much on what to do (which will be solved by progress in AI more generally). In this setting, we hope that our AI systems accelerate our rate of progress, but we remain in control and use AI systems as tools that allow us make better decisions and better technologies. Impact measures / side effect penalties aim to *define* what not to do. What if we instead *learn* what not to do? This could look like inferring and following human norms, along the lines of [ad hoc teamwork](http://www.cs.utexas.edu/users/ai-lab/?AdHocTeam).

This is different from narrow value learning for a few reasons. First, narrow value learning also learns what *to* do. Second, it seems likely that norm inference only gives good results in the context of groups of agents, while narrow value learning could be applied in singe agent settings.

The main advantages of learning norms is that this is something that humans do quite well, so it may be significantly easier than learning "values". In addition, this approach is very similar to our ways of preventing humans from doing catastrophic things: there is a shared, external system of norms that everyone is expected to follow. However, norm following is a weaker standard than [ambitious value learning](https://www.alignmentforum.org/s/4dHMdK5TLN6xcqtyc/p/5eX8ko7GCxwR5N9mN) ([AN #31](https://mailchi.mp/7d0e3916e3d9/alignment-newsletter-31)), and there are more problems as a result. Most notably, powerful AI systems will lead to rapidly evolving technologies, that cause big changes in the environment that might require new norms; norm-following AI systems may not be able to create or adapt to these new norms.

### Agent foundations

[CDT Dutch Book](https://www.alignmentforum.org/posts/wkNQdYj47HX33noKv/cdt-dutch-book) *(Abram Demski)*

[CDT=EDT=UDT](https://www.alignmentforum.org/posts/WkPf6XCzfJLCm2pbK/cdt-edt-udt) *(Abram Demski)*

### Learning human intent

**[AI Alignment Podcast: Cooperative Inverse Reinforcement Learning](https://futureoflife.org/2019/01/17/cooperative-inverse-reinforcement-learning-with-dylan-hadfield-menell/)** *(Lucas Perry and Dylan Hadfield-Menell)*: Summarized in the highlights!

**[On the Utility of Model Learning in HRI](https://arxiv.org/abs/1901.01291)** *(Rohan Choudhury, Gokul Swamy et al)*: Summarized in the highlights!

[What AI Safety Researchers Have Written About the Nature of Human Values](https://www.lesswrong.com/posts/GermiEmcS6xuZ2gBh/what-ai-safety-researchers-have-written-about-the-nature-of) *(avturchin)*: This post categorizes theories of human values along three axes. First, how complex is the description of the values? Second, to what extent are "values" defined as a function of behavior (as opposed to being a function of eg. the brain's algorithm)? Finally, how broadly applicable is the theory: could it apply to arbitrary minds, or only to humans? The post then summarizes different positions on human values that different researchers have taken.

**Rohin's opinion:** I found the categorization useful for understanding the differences between views on human values, which can be quite varied and hard to compare.

[Risk-Aware Active Inverse Reinforcement Learning](http://arxiv.org/abs/1901.02161) *(Daniel S. Brown, Yuchen Cui et al)*: This paper presents an algorithm that actively solicits demonstrations on states where it could potentially behave badly due to its uncertainty about the reward function. They use Bayesian IRL as their IRL algorithm, so that they get a distribution over reward functions. They use the most likely reward to train a policy, and then find a state from which that policy has high risk (because of the uncertainty over reward functions). They show in experiments that this performs better than other active IRL algorithms.

**Rohin's opinion:** I don't fully understand this paper -- how exactly are they searching over states, when there are exponentially many of them? Are they sampling them somehow? It's definitely possible that this is in the paper and I missed it, I did skim it fairly quickly.

Other progress in AI

====================

### Reinforcement learning

[Soft Actor-Critic: Deep Reinforcement Learning for Robotics](https://ai.googleblog.com/2019/01/soft-actor-critic-deep-reinforcement.html) *(Tuomas Haarnoja et al)*

### Deep learning

[A Comprehensive Survey on Graph Neural Networks](http://arxiv.org/abs/1901.00596) *(Zonghan Wu et al)*

[Graph Neural Networks: A Review of Methods and Applications](http://arxiv.org/abs/1812.08434) *(Jie Zhou, Ganqu Cui, Zhengyan Zhang et al)*

News

====

[Olsson to Join the Open Philanthropy Project](https://twitter.com/catherineols/status/1085702568494301185) (summarized by Dan H): Catherine Olsson, a researcher at Google Brain who was previously at OpenAI, will be joining the Open Philanthropy Project to focus on grant making for reducing x-risk from advanced AI. Given her first-hand research experience, she has knowledge of the dynamics of research groups and a nuanced understanding of various safety subproblems. Congratulations to both her and OpenPhil.

[Announcement: AI alignment prize round 4 winners](https://www.lesswrong.com/posts/nDHbgjdddG5EN6ocg/announcement-ai-alignment-prize-round-4-winners) *(cousin\_it)*: The last iteration of the AI alignment prize has concluded, with awards of $7500 each to [Penalizing Impact via Attainable Utility Preservation](https://www.alignmentforum.org/posts/mDTded2Dn7BKRBEPX/penalizing-impact-via-attainable-utility-preservation) ([AN #39](https://mailchi.mp/036ba834bcaf/alignment-newsletter-39)) and [Embedded Agency](https://www.alignmentforum.org/s/Rm6oQRJJmhGCcLvxh) ([AN #31](https://mailchi.mp/7d0e3916e3d9/alignment-newsletter-31), [AN #32](https://mailchi.mp/8f5d302499be/alignment-newsletter-32)), and $2500 each to [Addressing three problems with counterfactual corrigibility](https://www.alignmentforum.org/posts/owdBiF8pj6Lpwwdup/addressing-three-problems-with-counterfactual-corrigibility) ([AN #30](https://mailchi.mp/c1f376f3a12e/alignment-newsletter-30)) and [Three AI Safety Related Ideas](https://www.alignmentforum.org/posts/vbtvgNXkufFRSrx4j/three-ai-safety-related-ideas)/[Two Neglected Problems in Human-AI Safety](https://www.alignmentforum.org/posts/HTgakSs6JpnogD6c2/two-neglected-problems-in-human-ai-safety) ([AN #38](https://mailchi.mp/588354e4b91d/alignment-newsletter-38)).

|

b692083a-d059-4389-94d3-a861b4f28bbc

|

trentmkelly/LessWrong-43k

|

LessWrong

|

Why do you need the story?

* Boy: Why are you washing your hands?

* Shaman: Because this root is poisonous.

* Boy: Then why pull it out of the ground?

* Shaman: Because the pulp within it can cure the mosquito disease.

* Boy: But, I thought you said it was poisonous.

* Shaman: It is, but the outside is more so than the inside.

* Boy: Still, if it's poisonous, how can it cure things.

* Shaman: It's the dose that makes the poison.

* Boy: So can I eat the pulp?

* Shaman: No, because you are not sick, it's the disease that makes the medicine.

* Boy: So it's good for a sick man, but bad for me?

* Shaman: Yes, because, the sick man must suffer in order to be cured.

* Boy: Why?

* Shaman: Because he made the spirits angry, that's why he got the disease.

* Boy: So why will suffering make it better?

* Shaman: Suffering is part of admitting guilt and seeking forgiveness, the root helps the sick man atone for his transgression. Afterwards, the spirits lift the disease.

* Boy: The spirits seem evil.

* Shaman: The spirits are neither evil, nor are they, good, as we understand it.

* Boy: Ohhh

* Shaman: Here, sit, let me tell you how our world was made...

----------------------------------------

Ok, that sounds like a half believable wise mystic, right? That would make for a decent intro to a mediocre young-adult fantasy coming of age powertrip novel

Now, imagine if the shaman just left it as "The root is poisonous if you are not sick, but it helps if you are sick and eat a bit of its pulp, most of the time". No rules of thumb about dose making the poison, no speculations as to how it works, no postulation of metaphysical rules, no analogies to morality, no grand story for the creation of the universe.

That'd be one boring shaman, right? Outright unbelievable. Nobody would trust such a witcherman to take them in the middle of the jungle and give them a psychedelic brew, he's daft. The other guy though... he might be made of the right stuff to hold ayahuasca ceremonies.

--------------

|

f82e2a66-e327-4ac7-9ba0-49506b27eb1c

|

trentmkelly/LessWrong-43k

|

LessWrong

|

Analysis of Algorithms and Partial Algorithms

|

30a4d42d-7152-4928-bf80-816d516b9b37

|

trentmkelly/LessWrong-43k

|

LessWrong

|

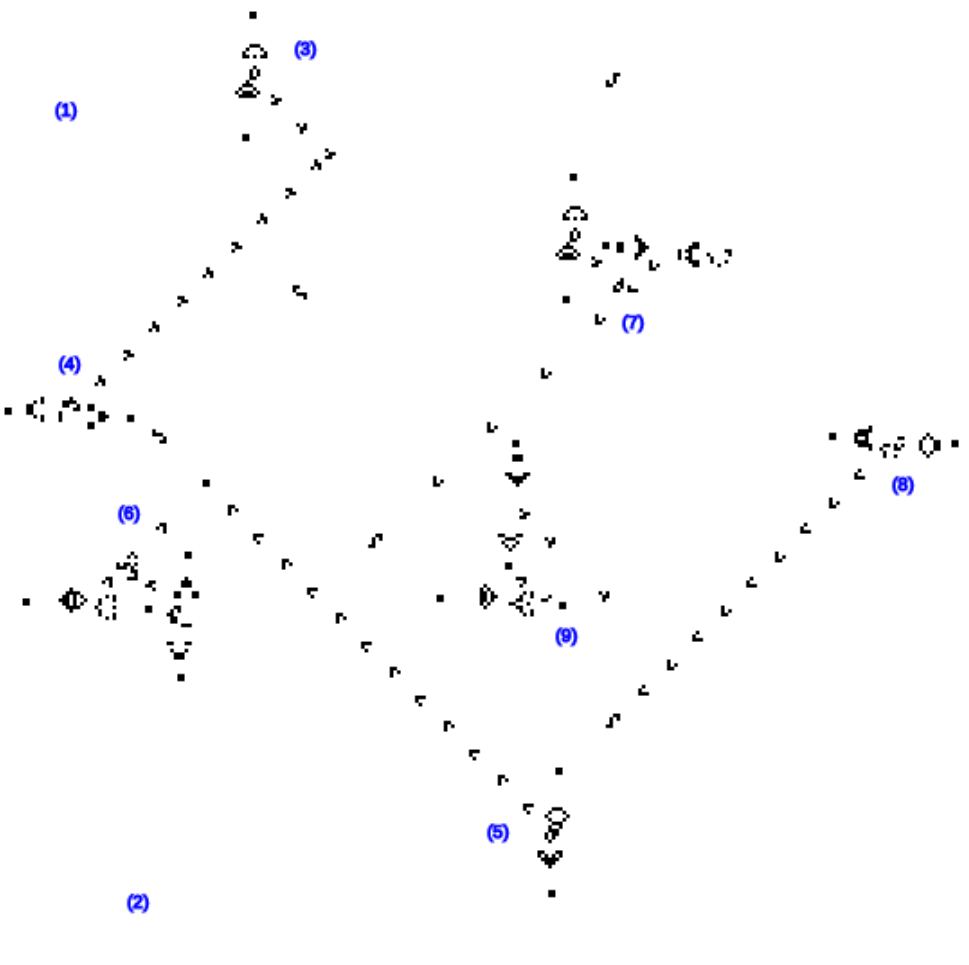

Maze-solving agents: Add a top-right vector, make the agent go to the top-right

Overview: We modify the goal-directed behavior of a trained network, without any gradients or finetuning. We simply add or subtract "motivational vectors" which we compute in a straightforward fashion.

In the original post, we defined a "cheese vector" to be "the difference in activations when the cheese is present in a maze, and when the cheese is not present in the same maze." By subtracting the cheese vector from all forward passes in a maze, the network ignored cheese.

I (Alex Turner) present a "top right vector" which, when added to forward passes in a range of mazes, attracts the agent to the top-right corner of each maze. Furthermore, the cheese and top-right vectors compose with each other, allowing (limited but substantial) mix-and-match modification of the network's runtime goals.

I provide further speculation about the algebraic value editing conjecture:

> It's possible to deeply modify a range of alignment-relevant model properties, without retraining the model, via techniques as simple as "run forward passes on prompts which e.g. prompt the model to offer nice- and not-nice completions, and then take a 'niceness vector', and then add the niceness vector to future forward passes."

I close by asking the reader to make predictions about our upcoming experimental results on language models.

This post presents some of the results in this top-right vector Google Colab, and then offers speculation and interpretation.

I produced the results in this post, but the vector was derived using a crucial observation from Peli Grietzer. Lisa Thiergart independently analyzed top-right-seeking tendencies, and had previously searched for a top-right vector. A lot of the content and infrastructure was made possible by my MATS 3.0 team: Ulisse Mini, Peli Grietzer, and Monte MacDiarmid. Thanks also to Lisa Thiergart, Aryan Bhatt, Tamera Lanham, and David Udell for feedback and thoughts.

Background

This post is straightforward, as long as you remember a few concept

|

4f09a2af-611f-4875-bab7-77b6a0468cdf

|

trentmkelly/LessWrong-43k

|

LessWrong

|

Genetically Modified Humans Born (Allegedly)

I realize normally we don't talk about the news or hot-button issues, but this is of sufficiently high importance I am posting anyway.

There is a link from Nature News on Monday here. There is a link from MIT Technology Review discussing the documents uploaded by the team behind the effort here.

Summarizing the report I heard on the radio this morning:

* The lead scientist is He Jiankui, with a team at Southern University of Science and Technology, in Shenzhen.

* 7 couples in the experiment, each with HIV+ fathers and HIV- mothers.

* CRISPR was used to genetically edit embryos to eliminate the CCR5 gene, providing HIV resistance.

* Allegedly twin girls have been born with these edits.

I don't think DNA testing of the twins has taken place yet. If anyone has a line on good, nuanced sources, I'd be interested in hearing about it.

|

7b75a4f0-b12e-4e5e-88d6-552aa253c60f

|

trentmkelly/LessWrong-43k

|

LessWrong

|

Meetup : Less Wrong in Dublin

Discussion article for the meetup : Less Wrong in Dublin

WHEN: 02 February 2013 04:30:25PM (+0000)

WHERE: 28 Dame St Dublin, Co. Dublin (The Mercantile)

A meeting of minds.

Discussion article for the meetup : Less Wrong in Dublin

|

d8933ac0-a901-45bb-8443-e9c6284c4b0f

|

trentmkelly/LessWrong-43k

|

LessWrong

|

The Joan of Arc Challenge For Objective List Theory

Introduction

The Joan of Arc challenge to objective list theory, as I shall argue, shows that only happiness is of intrinsic value. Pluralists—people who say that there are many things of intrinsic value—otherwise known as objective list theorists (maybe there’s some technical distinction between the two, but if so, I haven’t been able to find it) think that there are several things that are of intrinsic value. Objective list theorists hold roughly the following: that which is of intrinsic value is only happiness, knowledge, relationships, and achievements. To paraphrase Hitchens’ famous quote about those who proclaim “there is no god but Allah,” such people end their sentence a few words too late.

The basic idea behind the Joan of Arc challenge is that all of the things other than happiness that are on the objective list potentially hinge on whether various people take innocuous actions that no one will ever find out about. As a consequence, objective list theory has deeply counterintuitive implications about what you should do in private—holding that people being harmed is desirable. This objection will become clearer in the later sections.

All in all, I think this is potentially the second or third best argument for hedonism, after the lopsided lives challenge and maybe also the argument advanced here.

Knowledge

Lots of people think that they know something about Joan of Arc. She was burned to death, had a rap battle against Miley Cyrus, stood up for . . . (I’m actually realizing I know embarrassingly little about Joan of Arc, though I think it was something religious—maybe to do with the beginning of Protestantism?). But what if . . . everything you know about Joan of Arc is a lie? What if it was all the product of a grand conspiracy—Joan of Arc wasn’t really burned at the stakes? What if she actually lived out her life in peace and happiness and harmony?

No, I obviously don’t think that’s actually the case. But if it were, that would be good, right? If

|

430f0bff-b81f-4415-8685-8bb56ce24e1c

|

StampyAI/alignment-research-dataset/blogs

|

Blogs

|

August 2018 Newsletter

#### Updates

* New posts to the new [AI Alignment Forum](https://www.alignmentforum.org): [Buridan’s Ass in Coordination Games](https://www.alignmentforum.org/posts/4xpDnGaKz472qB4LY/buridan-s-ass-in-coordination-games); [Probability is Real, and Value is Complex](https://www.alignmentforum.org/posts/oheKfWA7SsvpK7SGp/probability-is-real-and-value-is-complex); [Safely and Usefully Spectating on AIs Optimizing Over Toy Worlds](https://www.alignmentforum.org/posts/ikN9qQEkrFuPtYd6Y/safely-and-usefully-spectating-on-ais-optimizing-over-toy)

* MIRI Research Associate Vanessa Kosoy wins a $7500 AI Alignment Prize for “[The Learning-Theoretic AI Alignment Research Agenda](https://agentfoundations.org/item?id=1816).” Applications for [the prize’s next round](https://www.lesswrong.com/posts/juBRTuE3TLti5yB35/announcement-ai-alignment-prize-round-3-winners-and-next) will be open through December 31.

* Interns from MIRI and the Center for Human-Compatible AI collaborated at an AI safety [research workshop](https://intelligence.org/workshops/#july-2018).

* This year’s [AI Summer Fellows Program](http://www.rationality.org/workshops/apply-aisfp) was very successful, and its one-day blogathon resulted in a number of interesting write-ups, such as [Dependent Type Theory and Zero-Shot Reasoning](https://www.alignmentforum.org/posts/Xfw2d5horPunP2MSK/dependent-type-theory-and-zero-shot-reasoning), [Conceptual Problems with Utility Functions](https://www.alignmentforum.org/posts/Nx4DsTpMaoTiTp4RQ/conceptual-problems-with-utility-functions) (and [follow-up](https://www.alignmentforum.org/posts/QmeguSp4Pm7gecJCz/conceptual-problems-with-utility-functions-second-attempt-at)), [Complete Class: Consequentialist Foundations](https://www.alignmentforum.org/posts/sZuw6SGfmZHvcAAEP/complete-class-consequentialist-foundations), and [Agents That Learn From Human Behavior Can’t Learn Human Values That Humans Haven’t Learned Yet](https://www.alignmentforum.org/posts/DfewqowdzDdCD7S9y/agents-that-learn-from-human-behavior-can-t-learn-human).

* See Rohin Shah’s [alignment newsletter](https://www.alignmentforum.org/posts/EQ9dBequfxmeYzhz6/alignment-newsletter-15-07-16-18) for more discussion of recent posts to the new AI Alignment Forum.

#### News and links

* The Future of Humanity Institute is seeking [project managers](https://www.fhi.ox.ac.uk/project-managers/) for its Research Scholars Programme and its Governance of AI Program.

The post [August 2018 Newsletter](https://intelligence.org/2018/08/27/august-2018-newsletter/) appeared first on [Machine Intelligence Research Institute](https://intelligence.org).

|

ae3b4690-a04c-430f-b2c8-601ba9f2e48d

|

trentmkelly/LessWrong-43k

|

LessWrong

|

Plan for mediocre alignment of brain-like [model-based RL] AGI

(This post is a more simple, self-contained, and pedagogical version of Post #14 of Intro to Brain-Like AGI Safety.)

(Vaguely related to this Alex Turner post and this John Wentworth post.)

I would like to have a technical plan for which there is a strong robust reason to believe that we’ll get an aligned AGI and a good future. This post is not such a plan.

However, I also don’t have a strong reason to believe that this plan wouldn’t work. Really, I want to throw up my hands and say “I don’t know whether this would lead to a good future or not”. By “good future” here I don’t mean optimally-good—whatever that means—but just “much better than the world today, and certainly much better than a universe full of paperclips”. I currently have no plan, not even a vague plan, with any prayer of getting to an optimally-good future. That would be a much narrower target to hit.

Even so, that makes me more optimistic than at least some people.[1] Or at least, more optimistic about this specific part of the story. In general I think many things can go wrong as we transition to the post-AGI world—see discussion by Dai & Soares—and overall I feel very doom-y, particularly for reasons here.

This plan is specific to the possible future scenario (a.k.a. “threat model” if you’re a doomer like me) that future AI researchers will develop “brain-like AGI”, i.e. learning algorithms that are similar to the brain’s within-lifetime learning algorithms. (I am not talking about evolution-as-a-learning-algorithm.) These algorithms, I claim, are in the general category of model-based reinforcement learning. Model-based RL is a big and heterogeneous category, but I suspect that for any kind of model-based RL AGI, this plan would be at least somewhat applicable. For very different technological paths to AGI, this post is probably pretty irrelevant.

But anyway, if someone published an algorithm for x-risk-capable brain-like AGI tomorrow, and we urgently needed to do something, this blog post i

|

e61976b8-e546-4472-b737-9b94268fe2bc

|

trentmkelly/LessWrong-43k

|

LessWrong

|

Causal confusion as an argument against the scaling hypothesis

Abstract

We discuss the possibility that causal confusion will be a significant alignment and/or capabilities limitation for current approaches based on "the scaling paradigm": unsupervised offline training of increasingly large neural nets with empirical risk minimization on a large diverse dataset. In particular, this approach may produce a model which uses unreliable (“spurious”) correlations to make predictions, and so fails on “out-of-distribution” data taken from situations where these correlations don’t exist or are reversed. We argue that such failures are particularly likely to be problematic for alignment and/or safety in the case when a system trained to do prediction is then applied in a control or decision-making setting.

We discuss:

* Arguments for this position

* Counterarguments

* Possible approaches to solving the problem

* Key Cruxes for this position and possible fixes

* Practical implications for capability and alignment

* Relevant research directions

We believe this topic is important because many researchers seem to view scaling as a path toward AI systems that 1) are highly competent (e.g. human-level or superhuman), 2) understand human concepts, and 3) reason with human concepts.

We believe the issues we present here are likely to prevent (3), somewhat less likely to prevent (2), and even less likely to prevent (1) (but still likely enough to be worth considering). Note that (1) and (2) have to do with systems’ capabilities, and (3) with their alignment; thus this issue seems likely to be differentially bad from an alignment point of view.

Our goal in writing this document is to clearly elaborate our thoughts, attempt to correct what we believe may be common misunderstandings, and surface disagreements and topics for further discussion and research.

A DALL-E 2 generation for "a green stop sign in a field of red flowers".

Current foundation models still fail on examples that seem simple for humans, and causal confusion and spurio

|

699cf16f-5e64-4247-bd83-169b8e6b2989

|

StampyAI/alignment-research-dataset/eaforum

|

Effective Altruism Forum

|

My highly personal skepticism braindump on existential risk from artificial intelligence.

**Summary**

-----------

This document seeks to outline why I feel uneasy about high existential risk estimates from AGI (e.g., 80% doom by 2070). When I try to verbalize this, I view considerations like

* selection effects at the level of which arguments are discovered and distributed

* community epistemic problems, and

* increased uncertainty due to chains of reasoning with imperfect concepts

as real and important.

I still think that existential risk from AGI is important. But I don’t view it as certain or close to certain, and I think that something is going wrong when people see it as all but assured.

**Discussion of weaknesses**

----------------------------

I think that this document was important for me personally to write up. However, I also think that it has some significant weaknesses:

1. There is some danger in verbalization leading to rationalization.

2. It alternates controversial points with points that are dead obvious.

3. It is to a large extent a reaction to my imperfectly digested understanding of a worldview pushed around the [ESPR](https://espr-camp.org/)/CFAR/MIRI/LessWrong cluster from 2016-2019, which nobody might hold now.

In response to these weaknesses:

1. I want to keep in mind that I do want to give weight to my gut feeling, and that I might want to update on a feeling of uneasiness rather than on its accompanying reasonings or rationalizations.

2. Readers might want to keep in mind that parts of this post may look like a [bravery debate](https://slatestarcodex.com/2013/05/18/against-bravery-debates/). But on the other hand, I've seen that the points which people consider obvious and uncontroversial vary from person to person, so I don’t get the impression that there is that much I can do on my end for the effort that I’m willing to spend.

3. Readers might want to keep in mind that actual AI safety people and AI safety proponents may hold more nuanced views, and that to a large extent I am arguing against a “Nuño of the past” view.

Despite these flaws, I think that this text was personally important for me to write up, and it might also have some utility to readers.

**Uneasiness about chains of reasoning with imperfect concepts**

----------------------------------------------------------------

### **Uneasiness about conjunctiveness**

It’s not clear to me how conjunctive AI doom is. Proponents will argue that it is very disjunctive, that there are lot of ways that things could go wrong. I’m not so sure.

In particular, when you see that a parsimonious decomposition (like Carlsmith’s) tends to generate lower estimates, you can conclude:

1. That the method is producing a biased result, and trying to account for that

2. That the topic under discussion is, in itself, conjunctive: that there are several steps that need to be satisfied. For example, “AI causing a big catastrophe” and “AI causing human exinction given that it has caused a large catastrophe” seem like they are two distinct steps that would need to be modelled separately,

I feel uneasy about only doing 1.) and not doing 2.) I think that the principled answer might be to split some probability into each case. Overall, though, I’d tend to think that AI risk is more conjunctive than it is disjunctive