viewer: false

tags:

- uv-script

- computer-vision

- object-detection

- sam3

- image-processing

license: apache-2.0

SAM3 Object Detection

Detect objects in images using Meta's sam3 (Segment Anything Model 3) with text prompts. Process HuggingFace datasets with zero-shot object detection using natural language descriptions.

Quick Start

Requires GPU. Use HuggingFace Jobs for cloud execution:

hf jobs uv run --flavor a100-large \

-s HF_TOKEN=HF_TOKEN \

https://huggingface.co/datasets/uv-scripts/sam3/raw/main/detect-objects.py \

input-dataset \

output-dataset \

--class-name photograph

Example Output

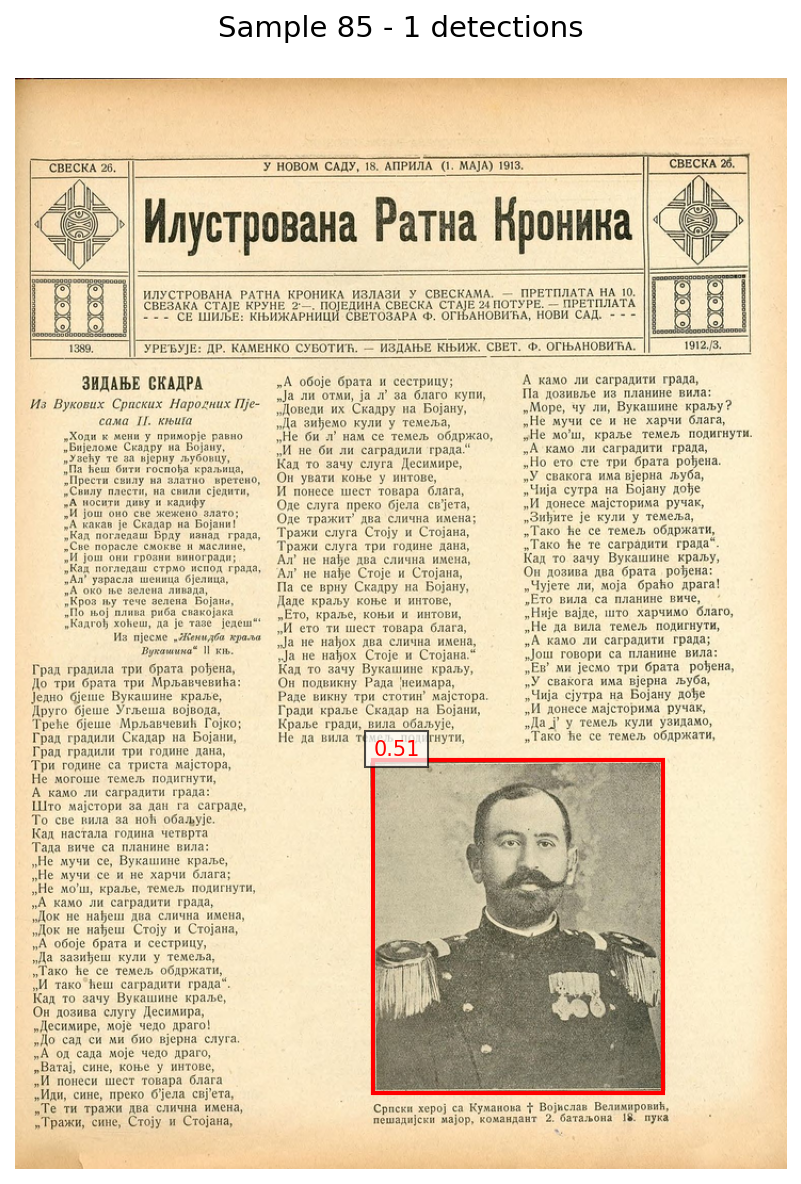

Here's an example of detected objects (photographs in historical newspapers) with bounding boxes and confidence scores:

Photograph detected in a historical newspaper with bounding box and confidence score. Generated from davanstrien/newspapers-image-predictions.

Local Execution

If you have a CUDA GPU locally:

uv run detect-objects.py INPUT OUTPUT --class-name CLASSNAME

Arguments

Required:

input_dataset- Input HF dataset IDoutput_dataset- Output HF dataset ID--class-name- Object class to detect (e.g.,"photograph","animal","table")

Common options:

--confidence-threshold FLOAT- Min confidence (default: 0.5)--batch-size INT- Batch size (default: 4)--max-samples INT- Limit samples for testing--image-column STR- Image column name (default: "image")--private- Make output private

All options

--mask-threshold FLOAT Mask generation threshold (default: 0.5)

--split STR Dataset split (default: "train")

--shuffle Shuffle before processing

--model STR Model ID (default: "facebook/sam3")

--dtype STR Precision: float32|float16|bfloat16

--hf-token STR HF token (or use HF_TOKEN env var)

HuggingFace Jobs Examples

Historical Newspapers

Detect photographs in historical newspaper scans:

hf jobs uv run --flavor a100-large \

-s HF_TOKEN=HF_TOKEN \

https://huggingface.co/datasets/uv-scripts/sam3/raw/main/detect-objects.py \

davanstrien/newspapers-with-images-after-photography \

my-username/newspapers-detected \

--class-name photograph \

--confidence-threshold 0.6 \

--batch-size 8

Document Tables

Extract tables from document scans:

hf jobs uv run --flavor a100-large \

-s HF_TOKEN=HF_TOKEN \

https://huggingface.co/datasets/uv-scripts/sam3/raw/main/detect-objects.py \

my-documents \

documents-with-tables \

--class-name table

Wildlife Camera Traps

Detect animals in camera trap images:

hf jobs uv run --flavor a100-large \

-s HF_TOKEN=HF_TOKEN \

https://huggingface.co/datasets/uv-scripts/sam3/raw/main/detect-objects.py \

wildlife-images \

wildlife-detections \

--class-name animal \

--confidence-threshold 0.5

Quick Testing

Test on a small subset before full run:

hf jobs uv run --flavor a100-large \

-s HF_TOKEN=HF_TOKEN \

https://huggingface.co/datasets/uv-scripts/sam3/raw/main/detect-objects.py \

large-dataset \

test-output \

--class-name object \

--max-samples 20

Using Different GPU Flavors

# L4 (cost-effective)

--flavor l4x1

# A100 (fastest)

--flavor a100

See HF Jobs pricing.

Output Format

Adds objects column with ClassLabel-based detections:

{

"objects": [

{

"bbox": [x, y, width, height],

"category": 0, # Always 0 for single class

"score": 0.87

}

]

}

Load and use:

from datasets import load_dataset

ds = load_dataset("username/output", split="train")

# ClassLabel feature preserves your class name

class_name = ds.features["objects"].feature["category"].names[0]

print(f"Detected class: {class_name}")

for sample in ds:

for obj in sample["objects"]:

print(f"{class_name}: {obj['score']:.2f} at {obj['bbox']}")

Detecting Multiple Object Types

To detect multiple object types, run the script multiple times with different --class-name values:

# Detect photographs

hf jobs uv run ... --class-name photograph

# Detect illustrations

hf jobs uv run ... --class-name illustration

# Merge results as needed

Performance

| GPU | Batch Size | ~Images/sec |

|---|---|---|

| L4 | 4-8 | 2-4 |

| A10 | 8-16 | 4-6 |

Varies by image size and detection complexity

Common Use Cases

- Documents:

--class-name tableor--class-name figure - Newspapers:

--class-name photographor--class-name illustration - Wildlife:

--class-name animalor--class-name bird - Products:

--class-name productor--class-name label

Troubleshooting

- No CUDA: Use HF Jobs (see examples above)

- OOM errors: Reduce

--batch-size - Few detections: Lower

--confidence-thresholdor try different class descriptions - Wrong column: Use

--image-column your_column_name

About SAM3

SAM3 is Meta's zero-shot vision model. Describe any object in natural language and it will detect it—no training required.

Note: This script uses transformers from git (SAM3 not yet in stable release).

See Also

More UV scripts at huggingface.co/uv-scripts:

- dataset-creation - Create HF datasets from files

- vllm - Fast LLM inference

- ocr - Document OCR

License

Apache 2.0