Please make MOE models

You guys are making the best models for local inference, I love GLM4-32b and 9b models. I can't aniticipate enough, what an wonderful MOE you could make? Always it would be great if it having both Thinking and non thinking modes like qwen3 series of models.

We will make it. Although I don't know their open-source time yet, we will try our best, and we will disclose information once there is new progress.

I agree. Would love to see a smaller one similar to Qwen 3 30B A3B and a bigger one. Native multimodality and more attention heads for better instruct following over a larger context would be nice to see as well.

Yes, a GLM 30B A3B would be great.

I vote for 70~80B-A8~9B, A3B is fast but practically too weak.

We will make it. Although I don't know their open-source time yet, we will try our best, and we will disclose information once there is new progress.

Thank you, looking forward to it.

I vote for 70~80B-A8~9B, A3B is fast but practically too weak.

GLM already surprised me with 32b and 9b models as it done or out done SOTA models in simiar tasks. Their MOE model will be also the same. Just like mistral 24b multimodal model, if it is multimodel also, it will be just cherry on the cake for open source community!

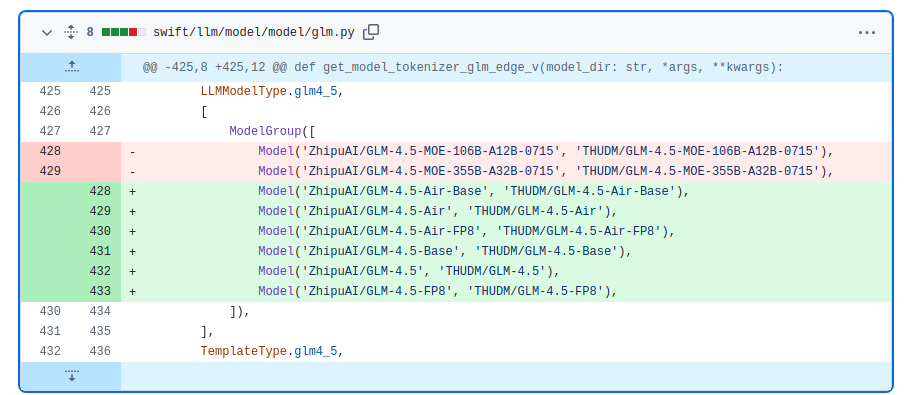

They will release a 100B A10B MoE model

100B A10B MoE model is not the final one(In PR), it is only a test name. Please wait for our final ckpt and the size may changed (approximate)

Most people have 32 GB RAM, so I would like to see a MoE with around 30-40b total parameters.

This PR comes from the ModelScope community, not us. Please wait for our update.

please have AWQ version as well