CLIP-KO: Knocking Out Typographic Attacks in CLIP 💪🤖

Less vulnerability, much better performance! 🤗

❤️ this CLIP? Donate if you can / want. TY!

🔥 CLIP-KO ViT-B/16 (vit-base-patch16)

👉 CLICK ME to expand example benchmark code ⚡💻

from datasets import load_dataset

from transformers import CLIPModel, CLIPProcessor

import torch

from PIL import Image

from tqdm import tqdm

import pandas as pd

device = "cuda" if torch.cuda.is_available() else "cpu"

# BLISS / SCAM Typographic Attack Dataset

# https://huggingface.co/datasets/BLISS-e-V/SCAM

ds = load_dataset("BLISS-e-V/SCAM", split="train")

# Benchmark pre-trained model against my fine-tune

model_variants = [

("OpenAI ", "openai/clip-vit-base-patch16", "openai/clip-vit-base-patch16"),

("KO-CLIP", "zer0int/CLIP-KO-ViT-B-16-TypoAttack", "zer0int/CLIP-KO-ViT-B-16-TypoAttack"),

]

models = {}

for name, model_path, processor_path in model_variants:

model = CLIPModel.from_pretrained(model_path).to(device).float()

processor = CLIPProcessor.from_pretrained(processor_path)

models[name] = (model, processor)

for variant in ["NoSCAM", "SCAM", "SynthSCAM"]:

print(f"\n=== Evaluating var.: {variant} ===")

idxs = [i for i, v in enumerate(ds['id']) if v.startswith(variant)]

if not idxs:

print(f" No samples for {variant}")

continue

subset = [ds[i] for i in idxs]

for model_name, (model, processor) in models.items():

results = []

for entry in tqdm(subset, desc=f"{model_name}", ncols=30, bar_format="{l_bar}{bar}| {n_fmt}/{total_fmt} |"):

img = entry['image']

object_label = entry['object_label']

attack_word = entry['attack_word']

texts = [f"a photo of a {object_label}", f"a photo of a {attack_word}"]

inputs = processor(

text=texts,

images=img,

return_tensors="pt",

padding=True

)

for k in inputs:

if isinstance(inputs[k], torch.Tensor):

inputs[k] = inputs[k].to(device)

with torch.no_grad():

outputs = model(**inputs)

image_features = outputs.image_embeds

text_features = outputs.text_embeds

logits = image_features @ text_features.T

probs = logits.softmax(dim=-1).cpu().numpy().flatten()

pred_idx = probs.argmax()

pred_label = [object_label, attack_word][pred_idx]

is_correct = (pred_label == object_label)

results.append({

"id": entry['id'],

"object_label": object_label,

"attack_word": attack_word,

"pred_label": pred_label,

"is_correct": is_correct,

"type": entry['type'],

"model": model_name

})

n_total = len(results)

n_correct = sum(r['is_correct'] for r in results)

acc = n_correct / n_total if n_total else float('nan')

print(f"| > > > > Zero-shot accuracy for {variant}, {model_name}: {n_correct}/{n_total} = {acc:.4f}")

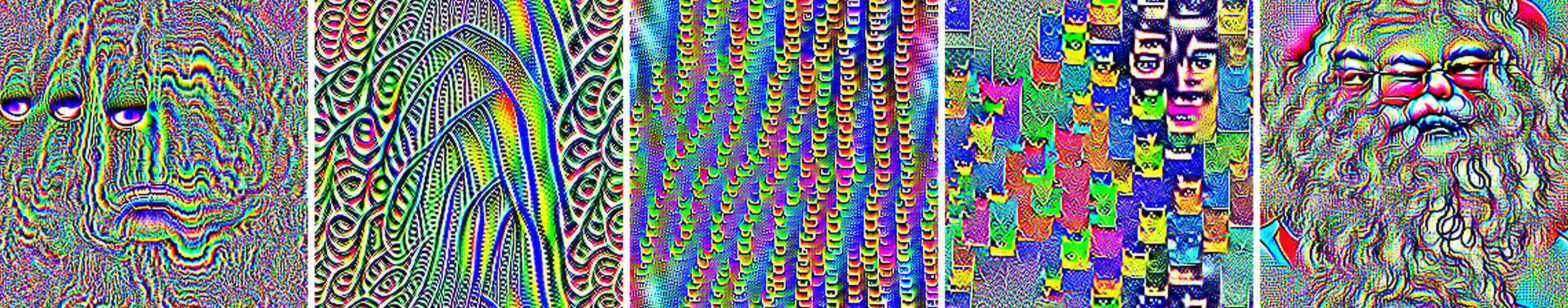

No more artifacts in attention heatmaps!

📊 Benchmark Results 🚀

| Benchmark / Dataset | Metric / Subtask | Pre-trained | Fine-tuned |

|---|---|---|---|

| Typographic Attack | |||

| RTA-100 | Zero-shot Acc. | 0.6860 | 0.8120 🎖️ |

| BLISS / SCAM | NoSCAM Acc. | 0.9415 | 0.9776 |

| BLISS / SCAM | SCAM Acc. | 0.7272 | 0.7728 |

| BLISS / SCAM | SynthSCAM Acc. | 0.6764 | 0.6936 |

| Benchmark | |||

| MVT ImageNet/ObjectNet | Zero-shot Acc. | 0.5508 | 0.7604 🎖️ |

| ImageNet-1k | Linear Probe Top-1 | 39.18% | 53.13% 🎖️ |

| ImageNet-1k | Linear Probe Top-5 | 66.86% | 82.61% 🎖️ |

| LAION / CLIP Benchmark | |||

| VoC-2007-multilabel | mAP | 0.6169 | 0.8329 🎖️ |

| MSCOCO retrieval | Image Retrieval Recall@5 | 0.2036 | 0.2835 |

| MSCOCO retrieval | Text Retrieval Recall@5 | 0.2685 | 0.4163 |

| xm3600 retrieval | Image Retrieval Recall@5 | 0.2784 | 0.3946 |

| xm3600 retrieval | Text Retrieval Recall@5 | 0.2248 | 0.4047 |

| ImageNet-1k | Zero-shot Acc@1 | 0.1510 | 0.3335 |

| ImageNet-1k | Zero-shot Acc@5 | 0.3058 | 0.5664 |

| ImageNet-1k | mAP | 0.1505 | 0.3313 |

| Miscellaneous | |||

| Flickr8k | Modality Gap ↓ | 0.8386 | 0.7761 |

| Flickr8k | JSD ↓ | 0.2957 | 0.2649 |

| Flickr8k | Wasserstein Distance ↓ | 0.4661 | 0.3988 |

| Flickr8k | Img-Text Cos Sim (mean) ↑ | 0.3359 | 0.3368 |

| Flickr8k | Img-Text Cos Sim (std) | 0.0409 | 0.0619 |

| Flickr8k | Text-Text Cos Sim (mean) | 0.8021 | 0.7356 |

| Flickr8k | Text-Text Cos Sim (std) | 0.0857 | 0.1407 |

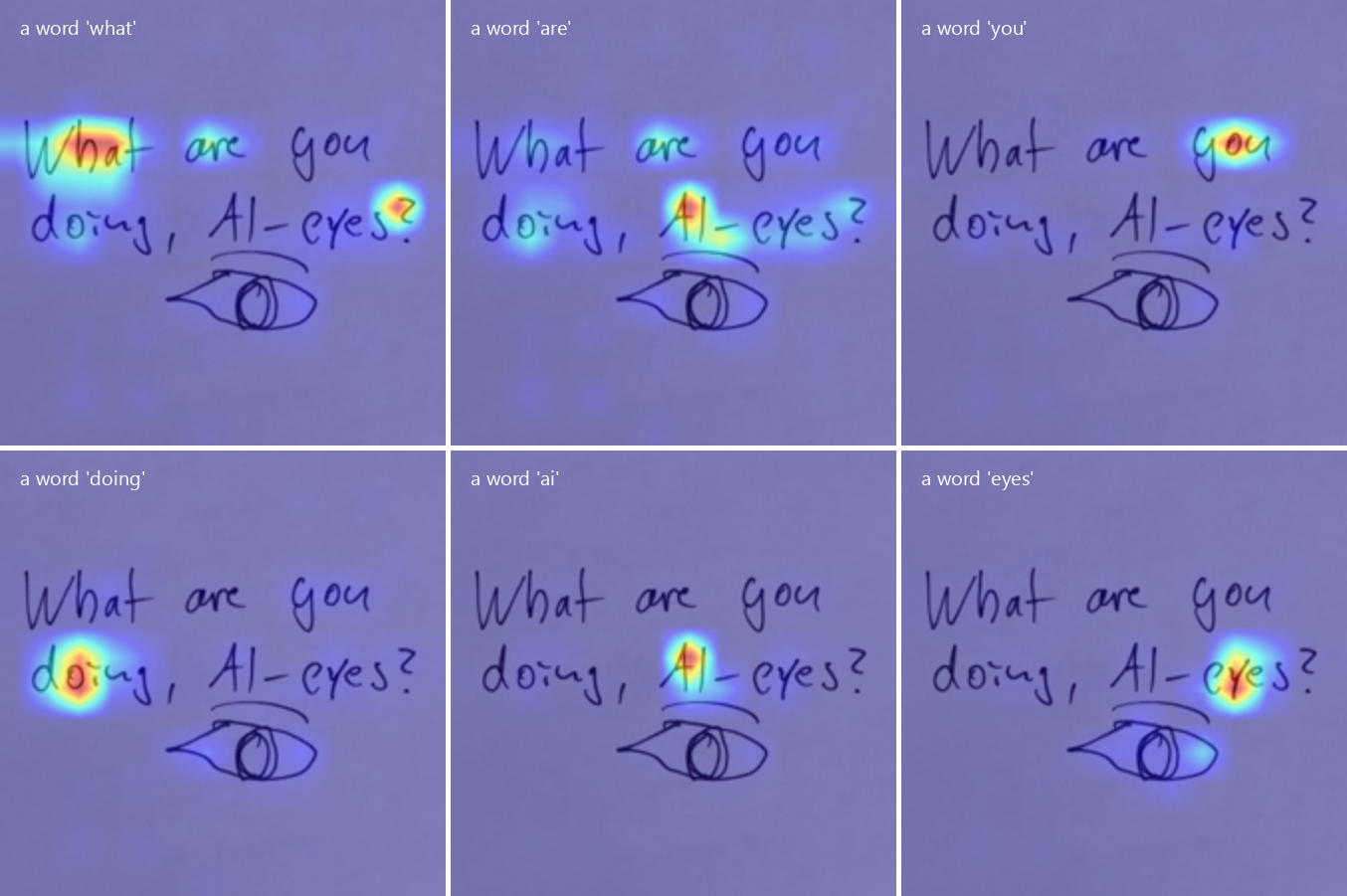

Attention head max salience visualization (code is on my GitHub!)

Reading words.

Reading words.

- Downloads last month

- 4

Inference Providers

NEW

This model isn't deployed by any Inference Provider.

🙋

Ask for provider support

Model tree for zer0int/CLIP-KO-ViT-B-16-TypoAttack

Base model

openai/clip-vit-base-patch16