base_model: Qwen/Qwen2.5-1.5B-Instruct

library_name: transformers

license: apache-2.0

tags:

- llama-factory

- full

- generated_from_trainer

model-index:

- name: Qwen2.5-1.5B-Instruct-SFT-BigmathV_Simple_Balanced-LR1.0e-5-EPOCHS2

results: []

pipeline_tag: question-answering

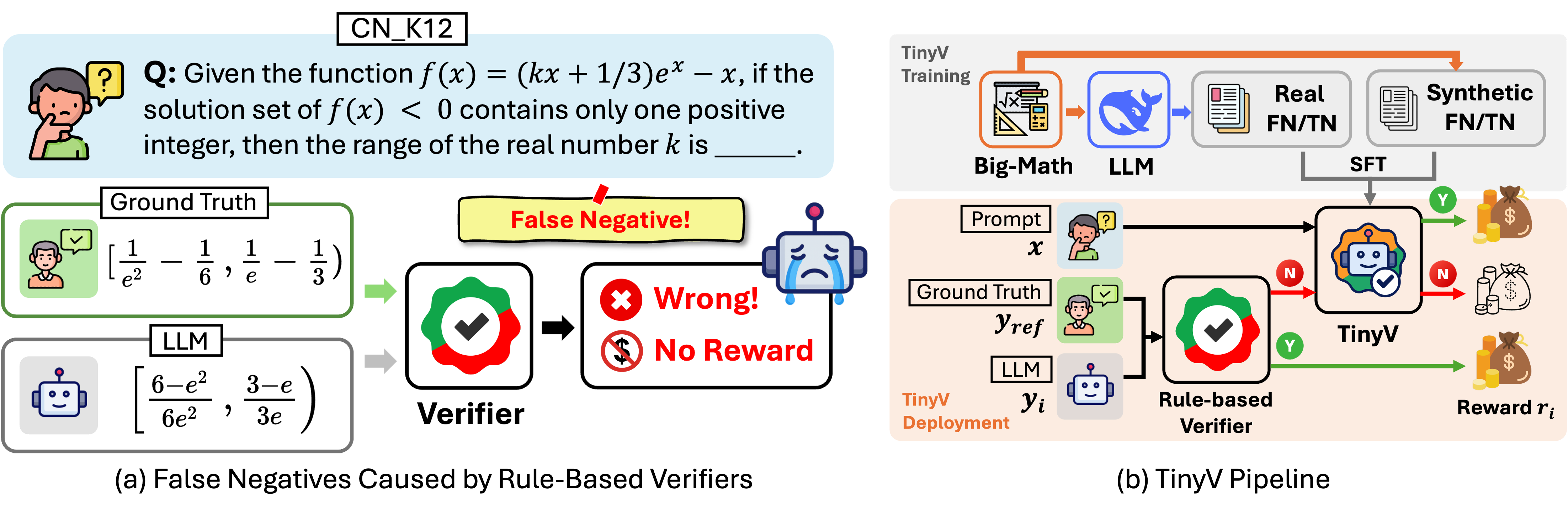

TinyV is a reward system for efficient RL post-training that detects false negatives in current rule-based verifiers and provides more accurate reward signals via a small LLM during RL training. Experiments show that TinyV incurs only 6% additional computational cost while significantly increasing both RL efficiency and final model performance.

Abstract: Reinforcement Learning (RL) has become a powerful tool for enhancing the reasoning abilities of large language models (LLMs) by optimizing their policies with reward signals. Yet, RL's success relies on the reliability of rewards, which are provided by verifiers. In this paper, we expose and analyze a widespread problem--false negatives--where verifiers wrongly reject correct model outputs. Our in-depth study of the Big-Math-RL-Verified dataset reveals that over 38% of model-generated responses suffer from false negatives, where the verifier fails to recognize correct answers. We show, both empirically and theoretically, that these false negatives severely impair RL training by depriving the model of informative gradient signals and slowing convergence. To mitigate this, we propose tinyV, a lightweight LLM-based verifier that augments existing rule-based methods, which dynamically identifies potential false negatives and recovers valid responses to produce more accurate reward estimates. Across multiple math-reasoning benchmarks, integrating TinyV boosts pass rates by up to 10% and accelerates convergence relative to the baseline. Our findings highlight the critical importance of addressing verifier false negatives and offer a practical approach to improve RL-based fine-tuning of LLMs.

- 📄 Technical Report - Including false negative analysis and theotical insights behind TinyV

- 💾 Github Repo - Access the complete pipeline for more efficient RL training via TinyV

- 🤗 HF Collection - Training Data, Benchmarks, and Model Artifact

This model is a fine-tuned version of Qwen/Qwen2.5-1.5B-Instruct on zhangchenxu/TinyV_Training_Data_Balanced dataset.

Overview

How to use it?

Please refer to the codebase: https://github.com/uw-nsl/TinyV for details.

Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- distributed_type: multi-GPU

- num_devices: 8

- gradient_accumulation_steps: 8

- total_train_batch_size: 512

- total_eval_batch_size: 64

- optimizer: Use adamw_torch with betas=(0.9,0.999) and epsilon=1e-08 and optimizer_args=No additional optimizer arguments

- lr_scheduler_type: cosine

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 2.0

Framework versions

- Transformers 4.48.3

- Pytorch 2.5.0

- Datasets 3.2.0

- Tokenizers 0.21.0